DanceDJ: A 3D Dance Animation Authoring System for Live Performance

Naoya Iwamoto, Takuya Kato, Hubert P. H. Shum, Ryo Kakitsuka, Kenta Hara and Shigeo Morishima

Proceedings of the 2017 International Conference on Advances in Computer Entertainment Technology (ACE), 2017

Best Paper Award

Abstract

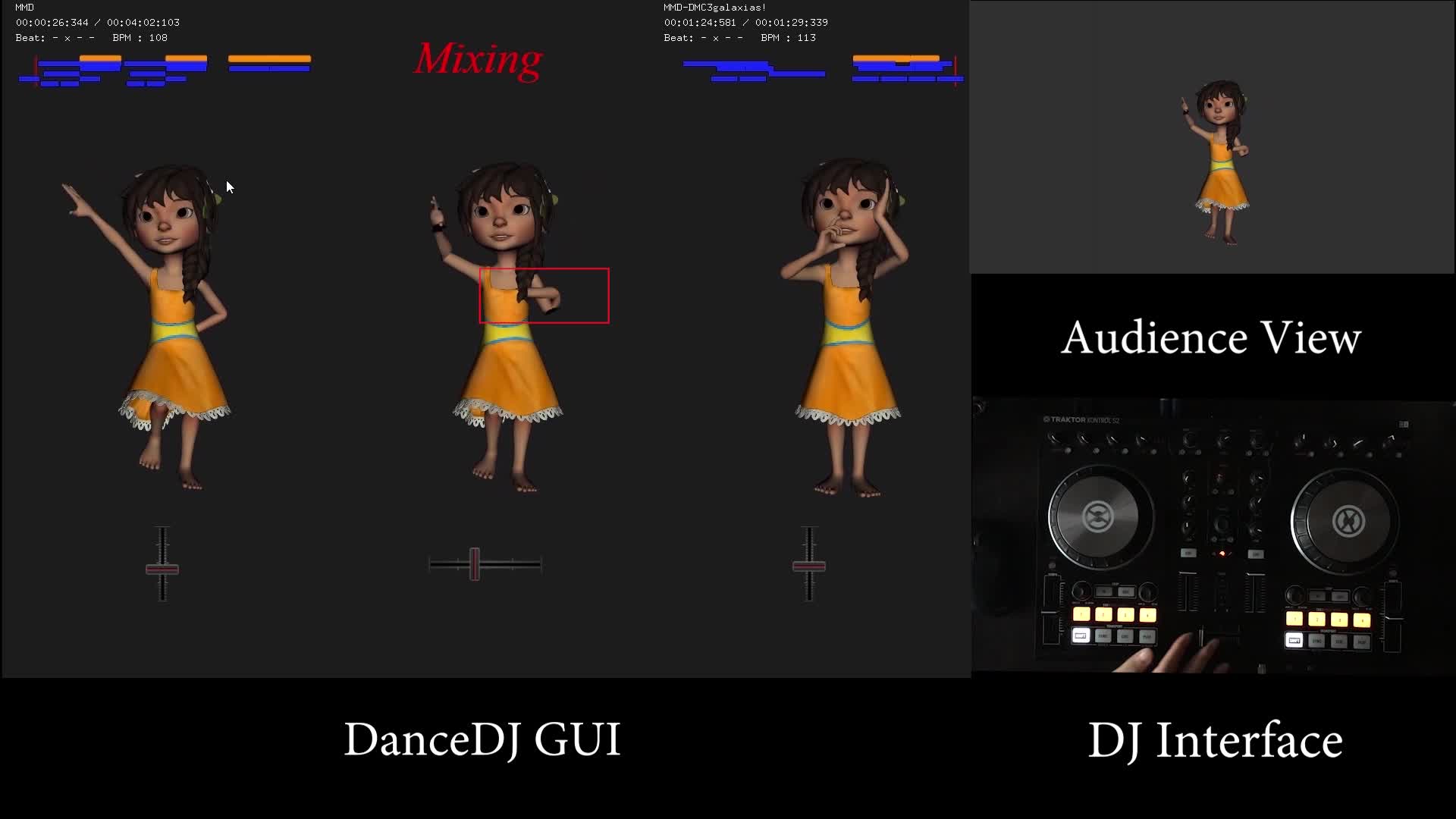

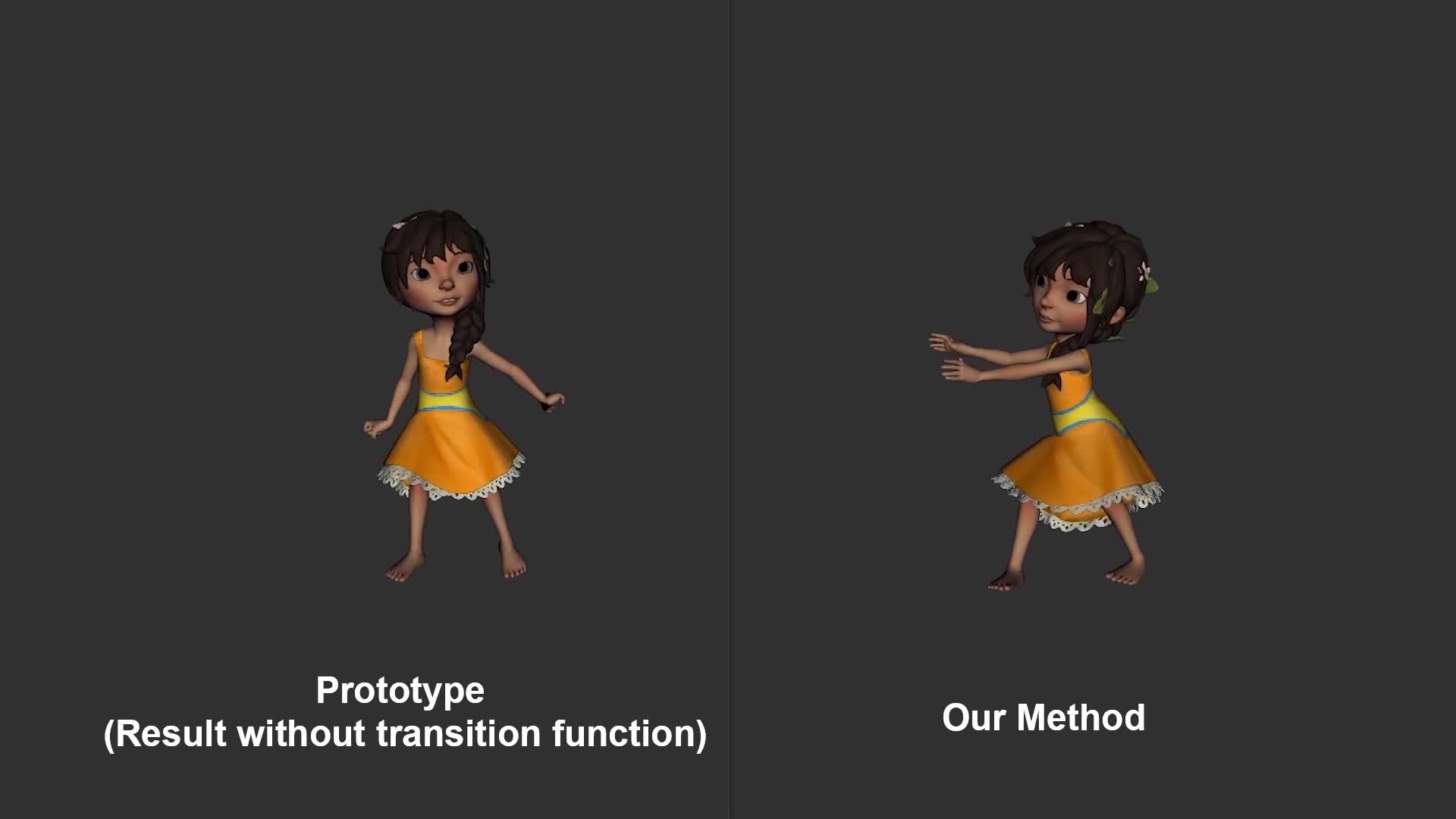

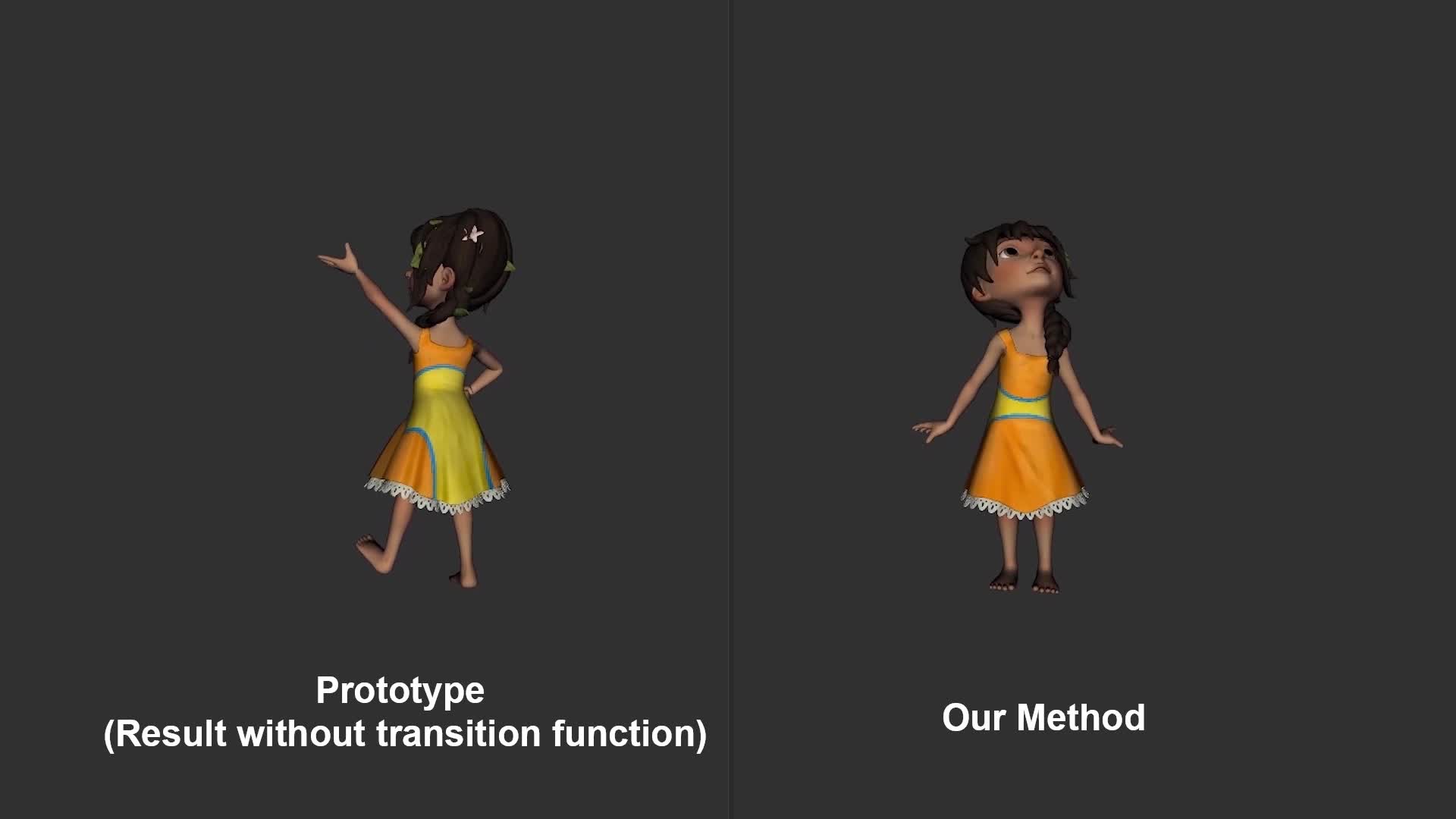

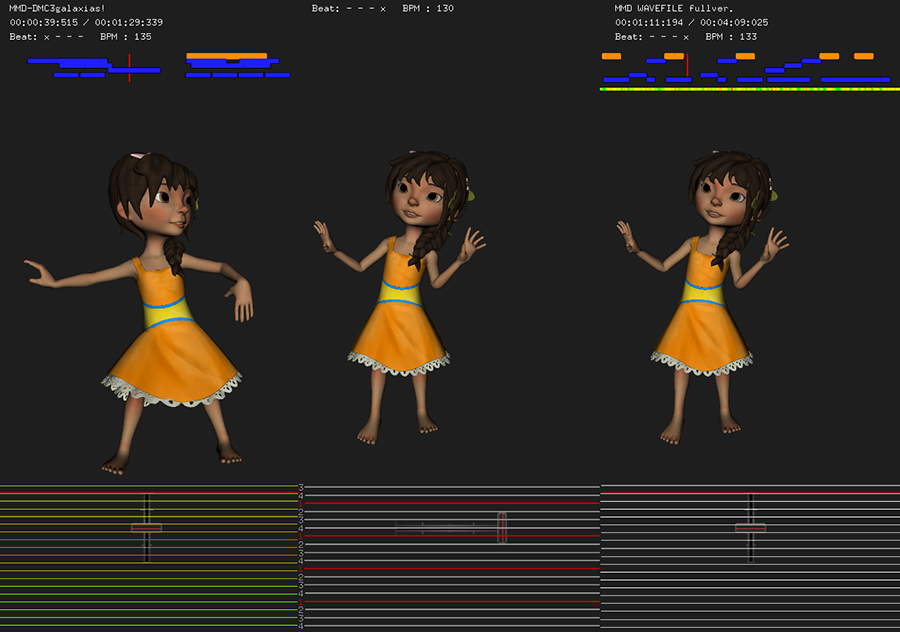

Dance is an important component of live performance for expressing emotion and presenting visual context. Human dance performances typically require expert knowledge of dance choreography and professional rehearsal, which are too costly for casual entertainment venues and clubs. Recent advancements in character animation and motion synthesis have made it possible to synthesize virtual 3D dance characters in real-time. The major problem in existing systems is a lack of an intuitive interfaces to control the animation for real-time dance controls. We propose a new system called the DanceDJ to solve this problem. Our system consists of two parts. The first part is an underlying motion analysis system that evaluates motion features including dance features such as the postures and movement tempo, as well as audio features such as the music tempo and structure. As a pre-process, given a dancing motion database, our system evaluates the quality of possible timings to connect and switch different dancing motions. During run-time, we propose a control interface that provides visual guidance. We observe that disk jockeys (DJs) effectively control the mixing of music using the DJ controller, and therefore propose a DJ controller for controlling dancing characters. This allows DJs to transfer their skills from music control to dance control using a similar hardware setup. We map different motion control functions onto the DJ controller, and visualize the timing of natural connection points, such that the DJ can effectively govern the synthesized dance motion. We conducted two user experiments to evaluate the user experience and the quality of the dance character. Quantitative analysis shows that our system performs well in both motion control and simulation quality.

YouTube

Cite This Research

Supporting Grants

Received from The Ministry of Education, Culture, Sports, Science and Technology, 2015-2016

Project Page