KD360-VoxelBEV: LiDAR and 360-Degree Camera Cross Modality Knowledge Distillation for Bird’s-Eye-View Segmentation

Wenke E, Yixin Sun, Jiaxu Liu, Hubert P. H. Shum, Amir Atapour-Abarghouei and Toby P. Breckon

Proceedings of the 2026 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2026

H5-Index: 131# Core A Conference‡

Abstract

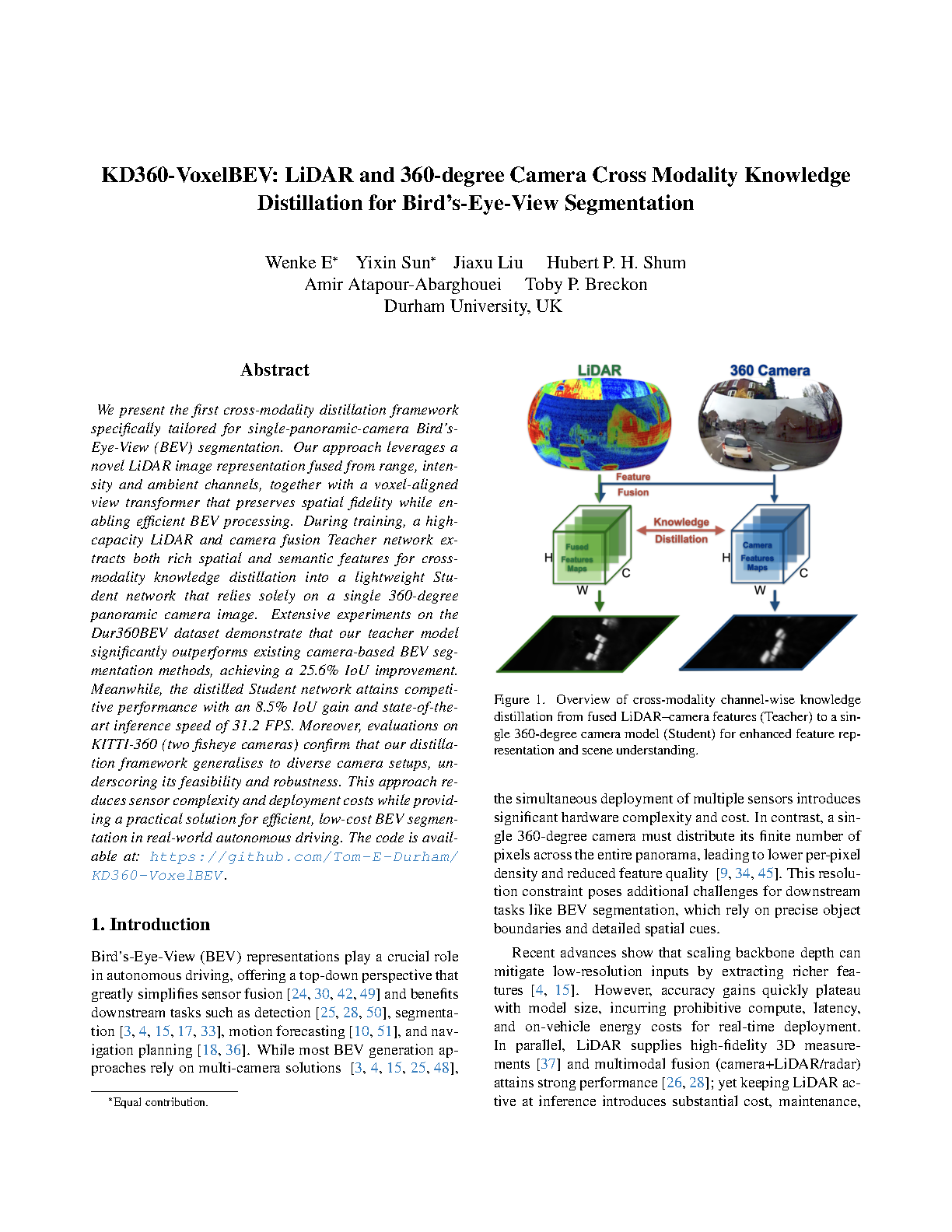

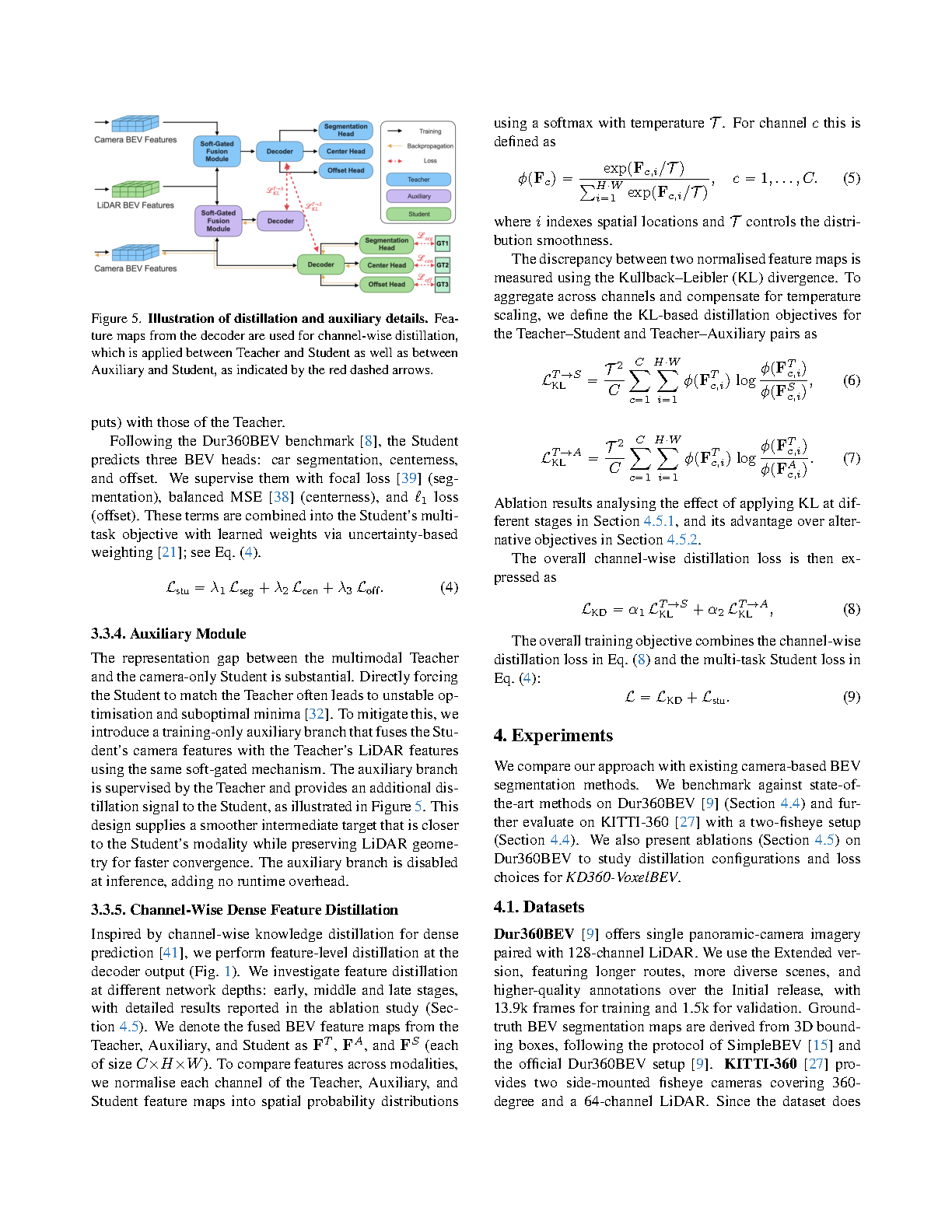

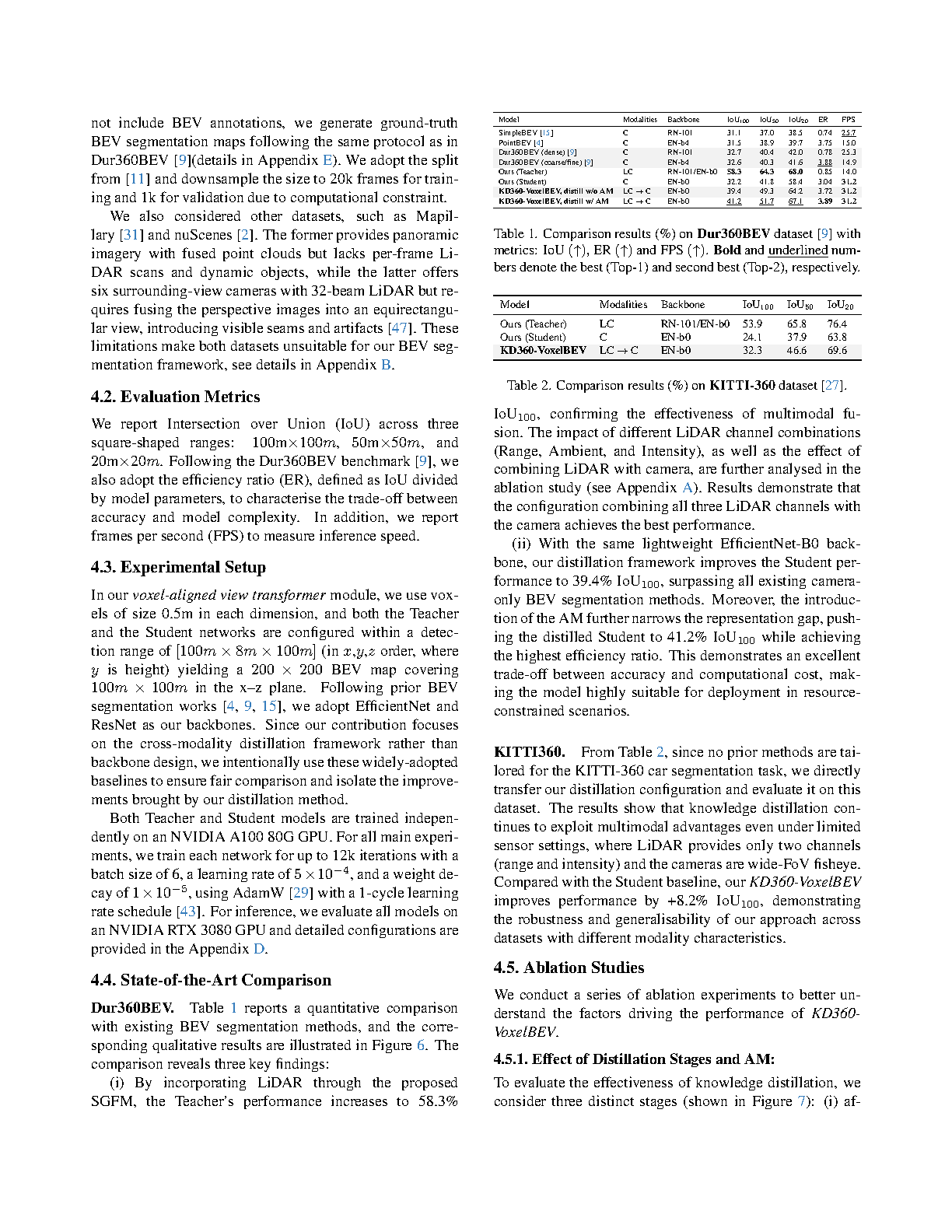

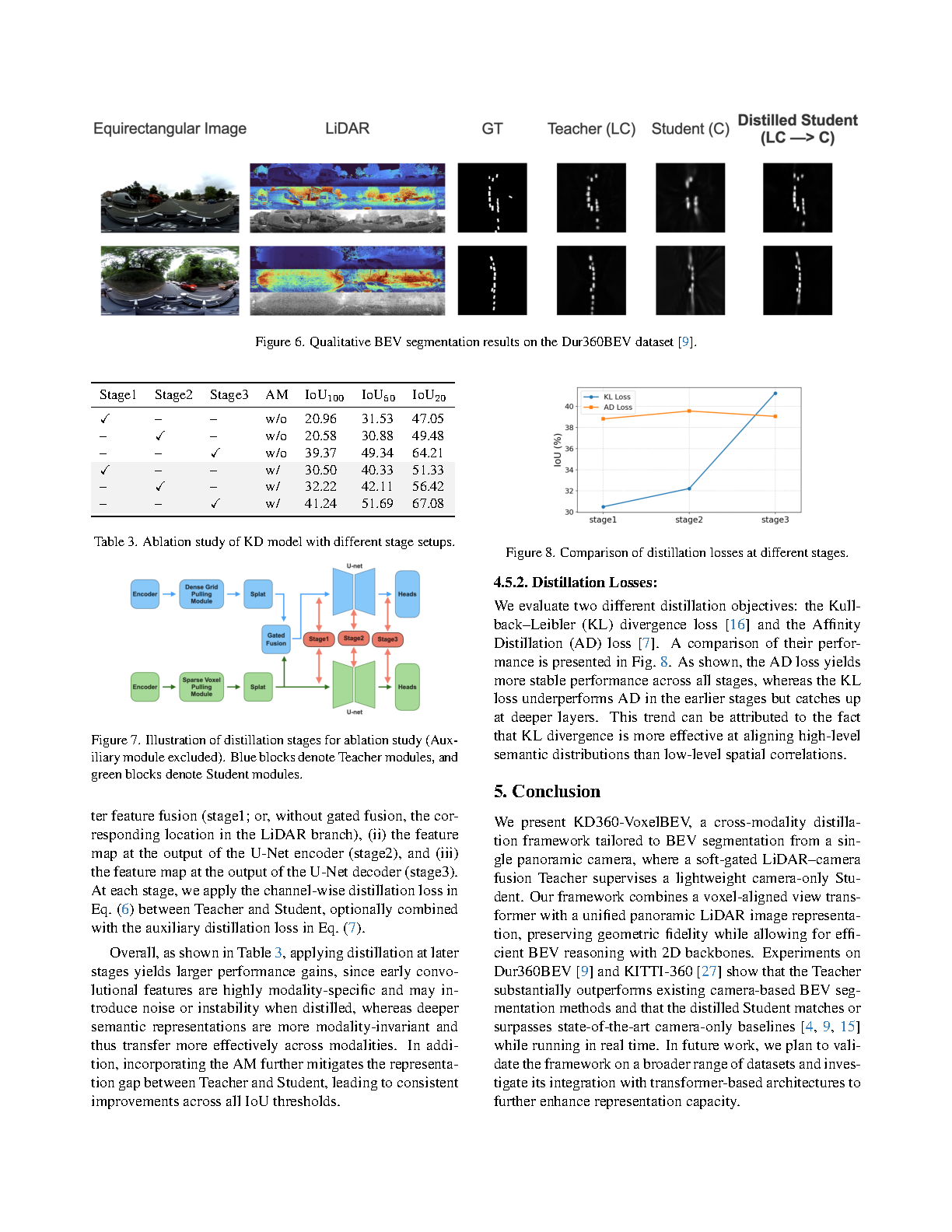

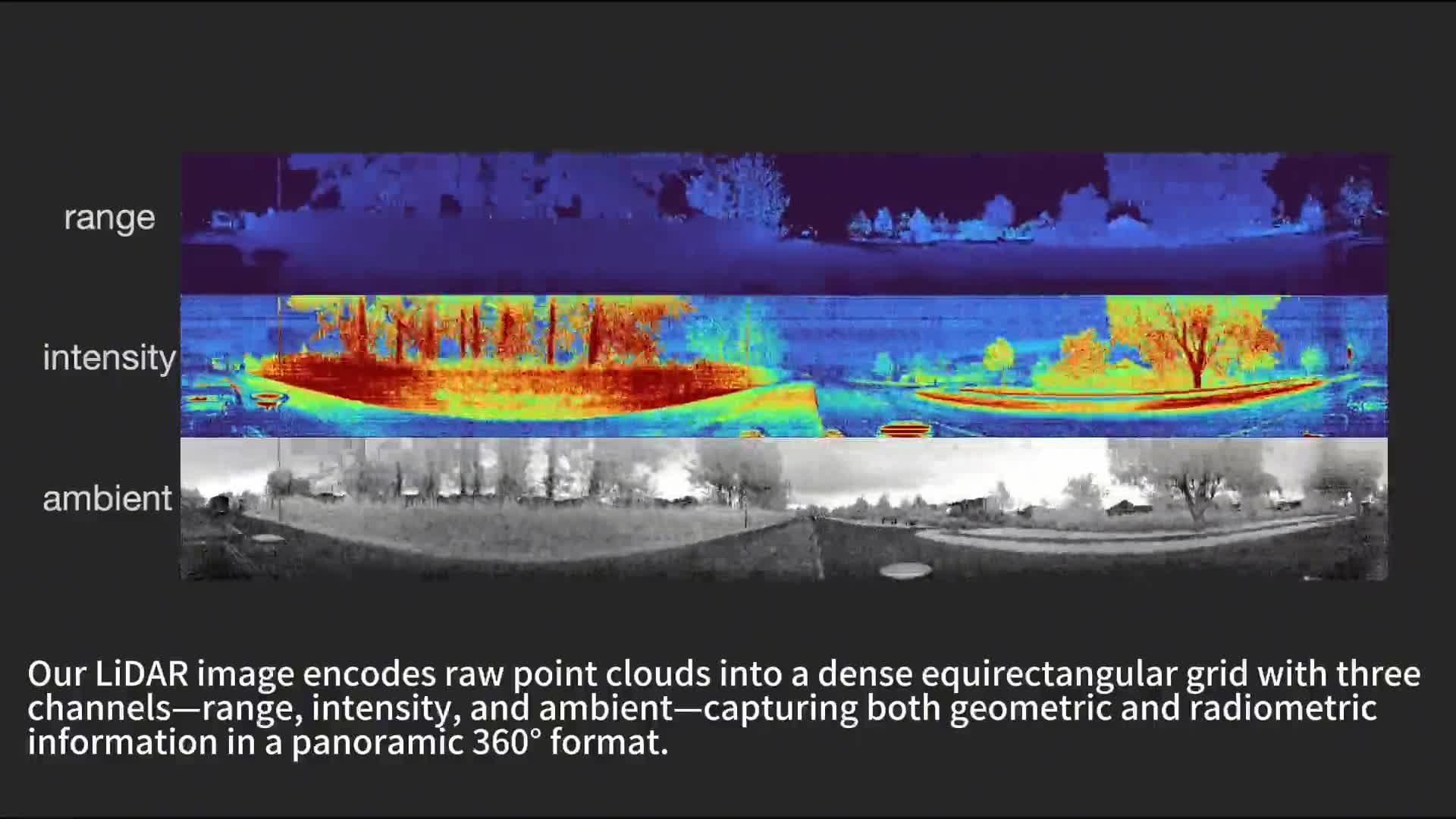

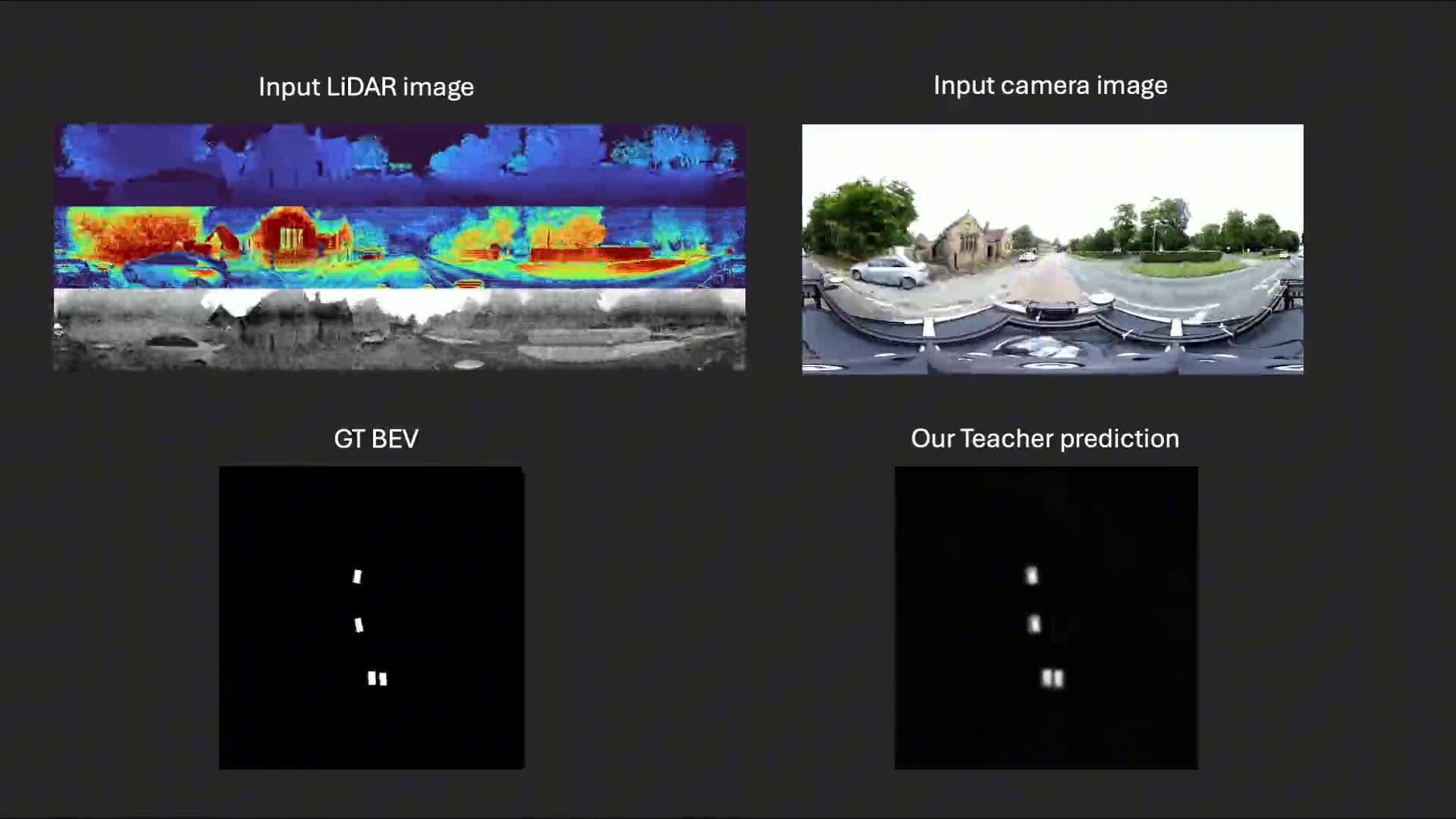

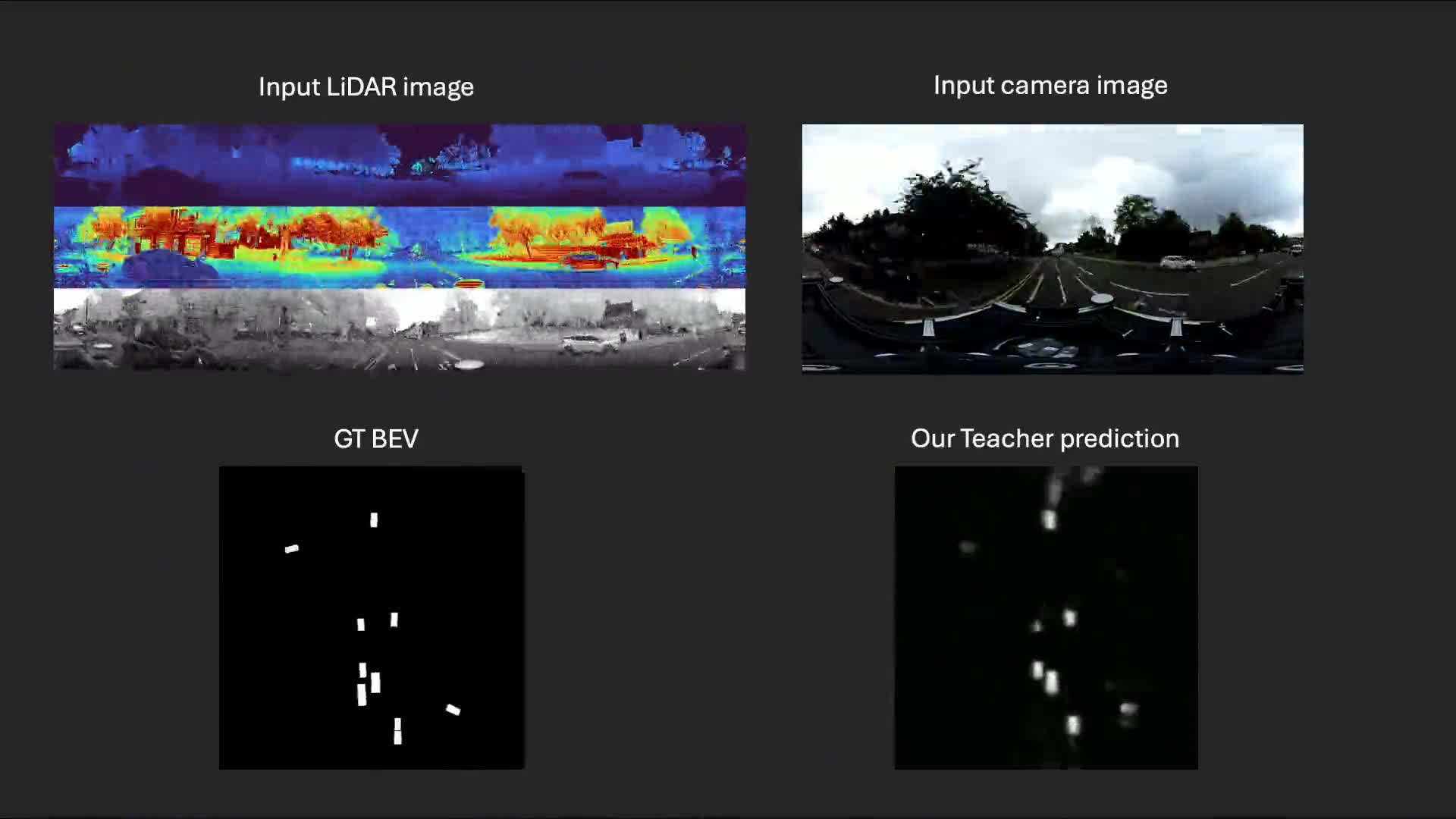

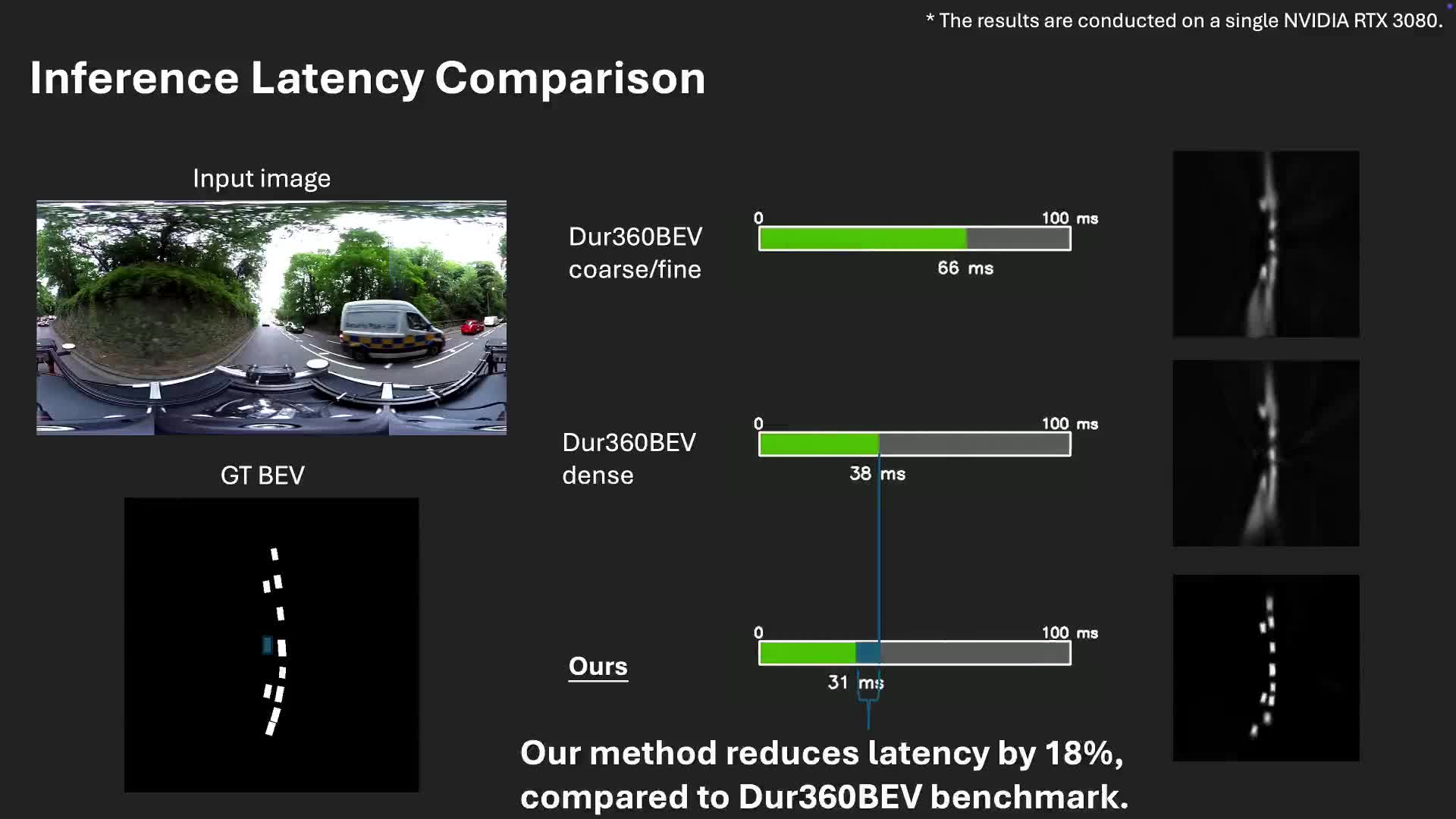

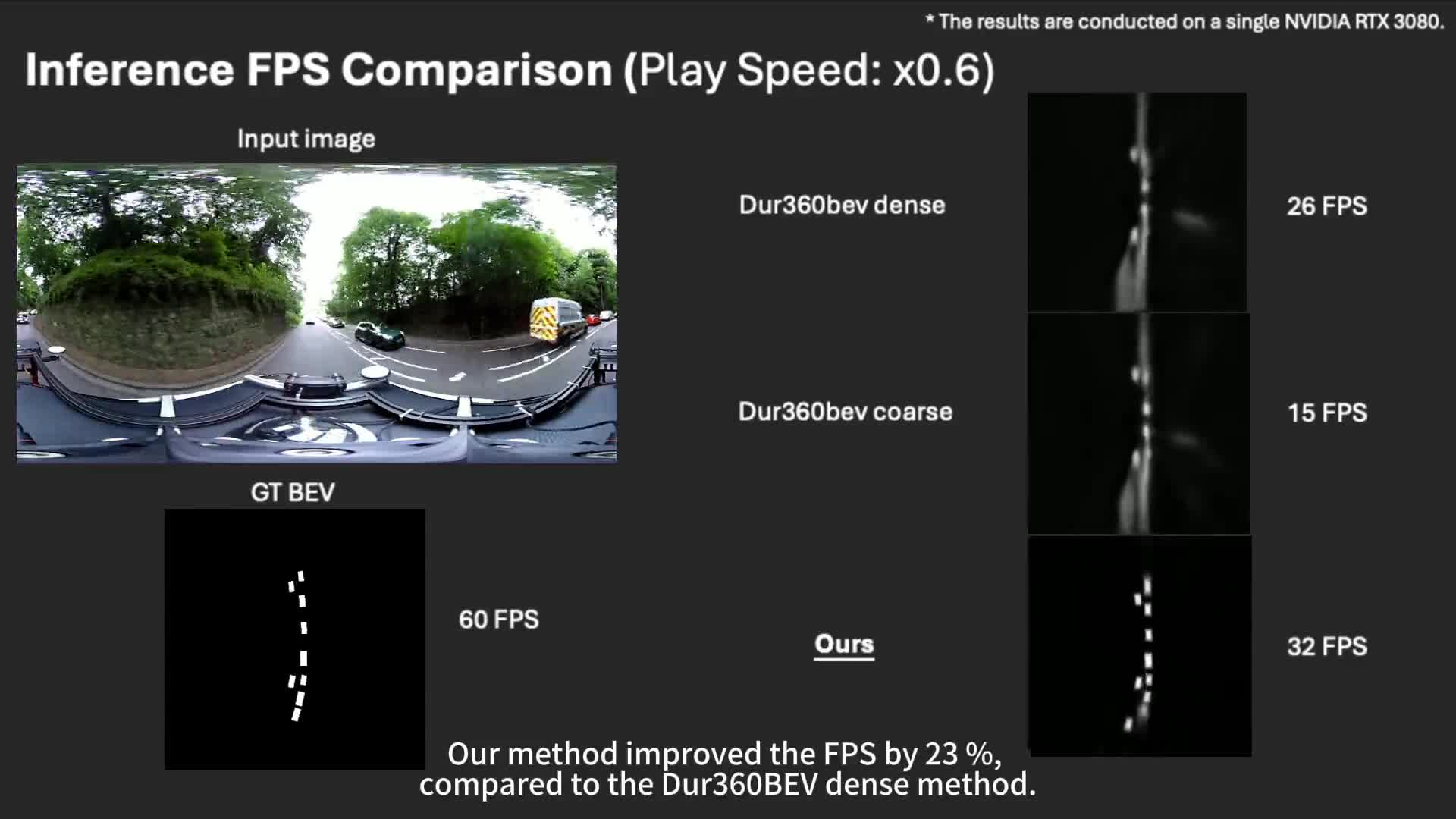

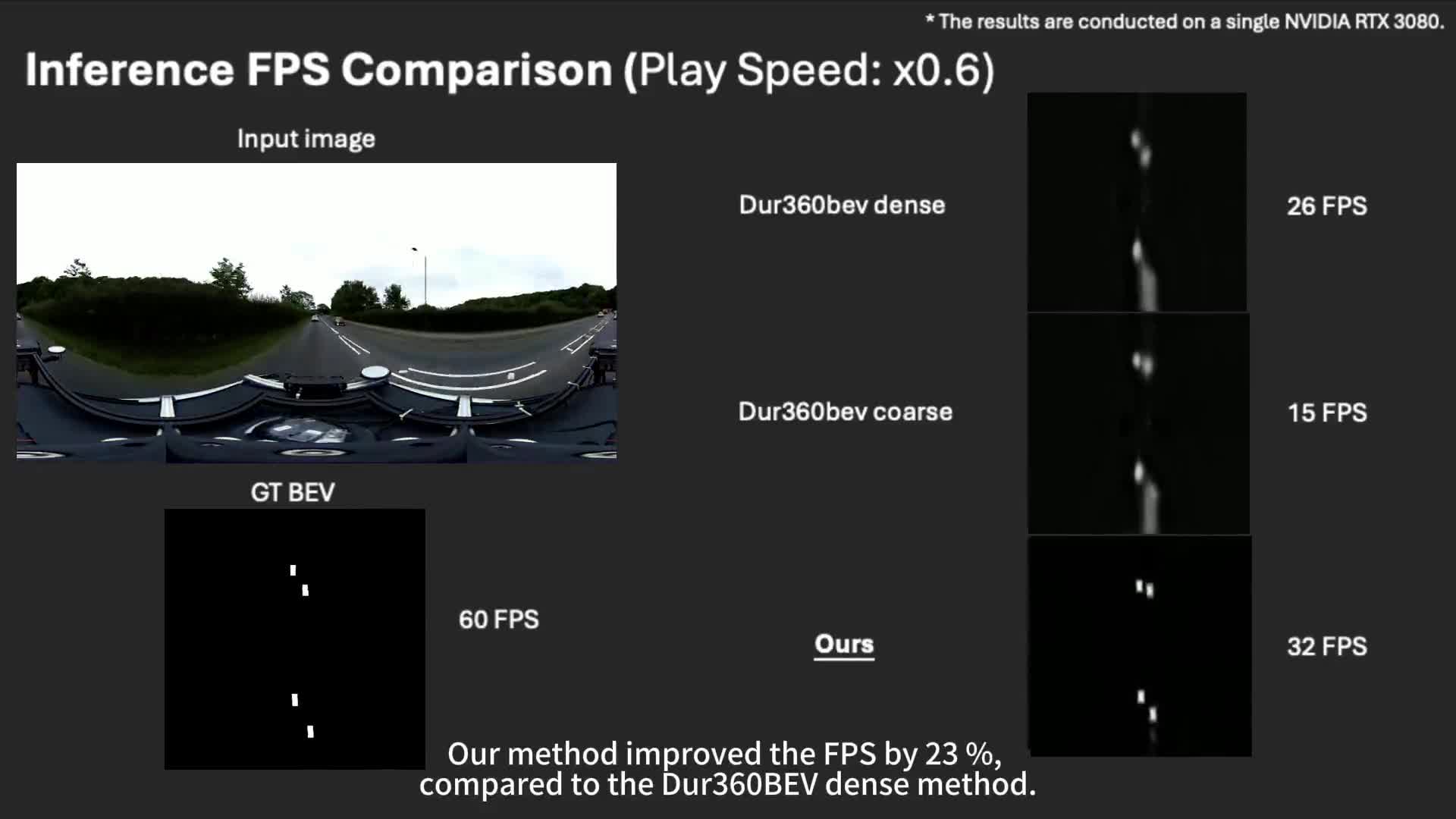

We present the first cross-modality distillation framework specifically tailored for single-panoramic-camera Bird’s- Eye-View (BEV) segmentation. Our approach leverages a novel LiDAR image representation fused from range, intensity and ambient channels, together with a voxel-aligned view transformer that preserves spatial fidelity while enabling efficient BEV processing. During training, a highcapacity LiDAR and camera fusion Teacher network extracts both rich spatial and semantic features for crossmodality knowledge distillation into a lightweight Student network that relies solely on a single 360-degree panoramic camera image. Extensive experiments on the Dur360BEV dataset demonstrate that our teacher model significantly outperforms existing camera-based BEV segmentation methods, achieving a 25.6% IoU improvement. Meanwhile, the distilled Student network attains competitive performance with an 8.5% IoU gain and state-of-theart inference speed of 31.2 FPS. Moreover, evaluations on KITTI-360 (two fisheye cameras) confirm that our distillation framework generalises to diverse camera setups, underscoring its feasibility and robustness. This approach reduces sensor complexity and deployment costs while providing a practical solution for efficient, low-cost BEV segmentation in real-world autonomous driving. The code is available at: https://github.com/Tom-E-Durham/KD360-VoxelBEV.