Makeup Style Transfer on Low-Quality Images with Weighted Multi-Scale Attention

Daniel Organisciak, Edmond S. L. Ho and Hubert P. H. Shum

Proceedings of the 2020 International Conference on Pattern Recognition (ICPR), 2020

Abstract

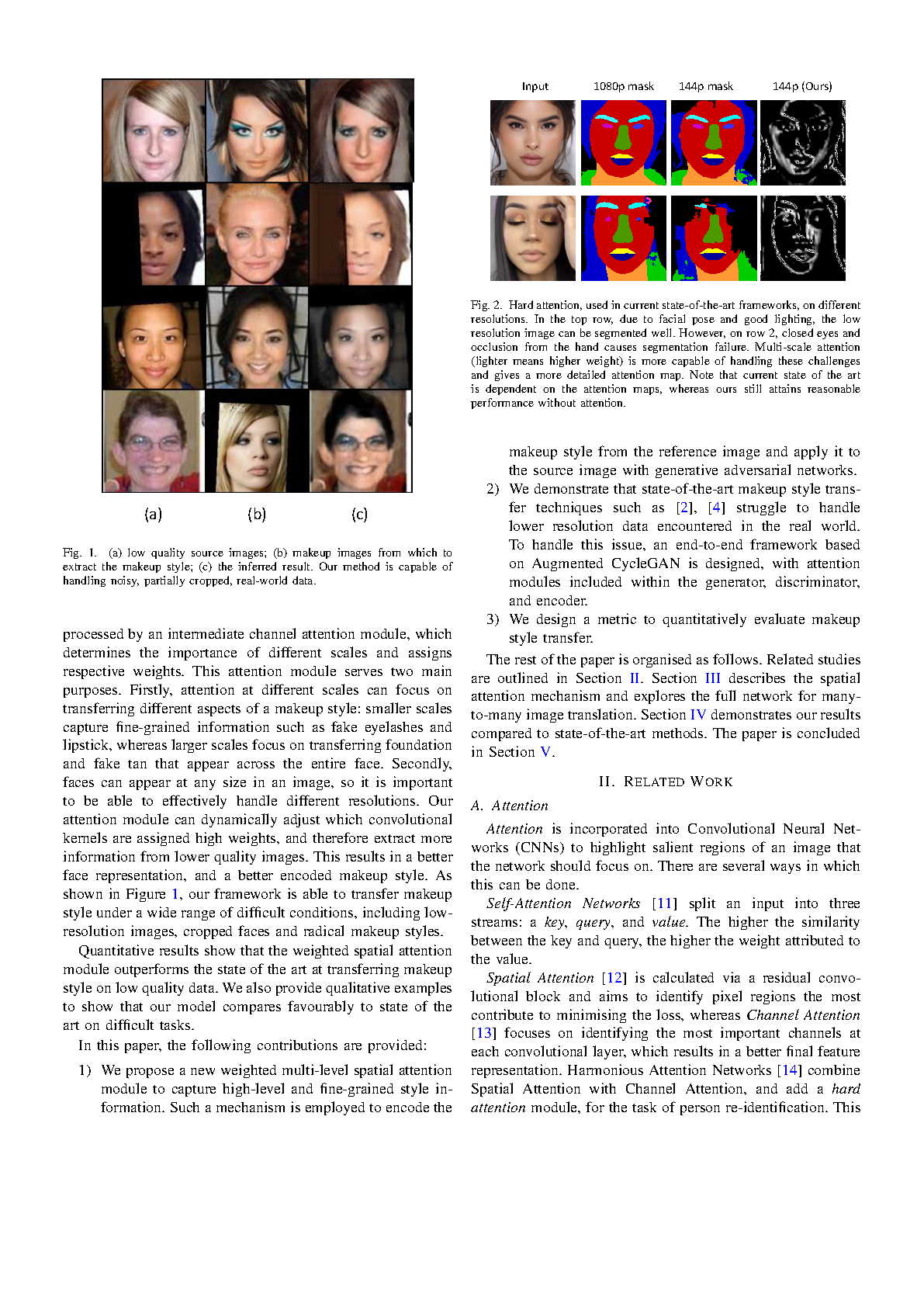

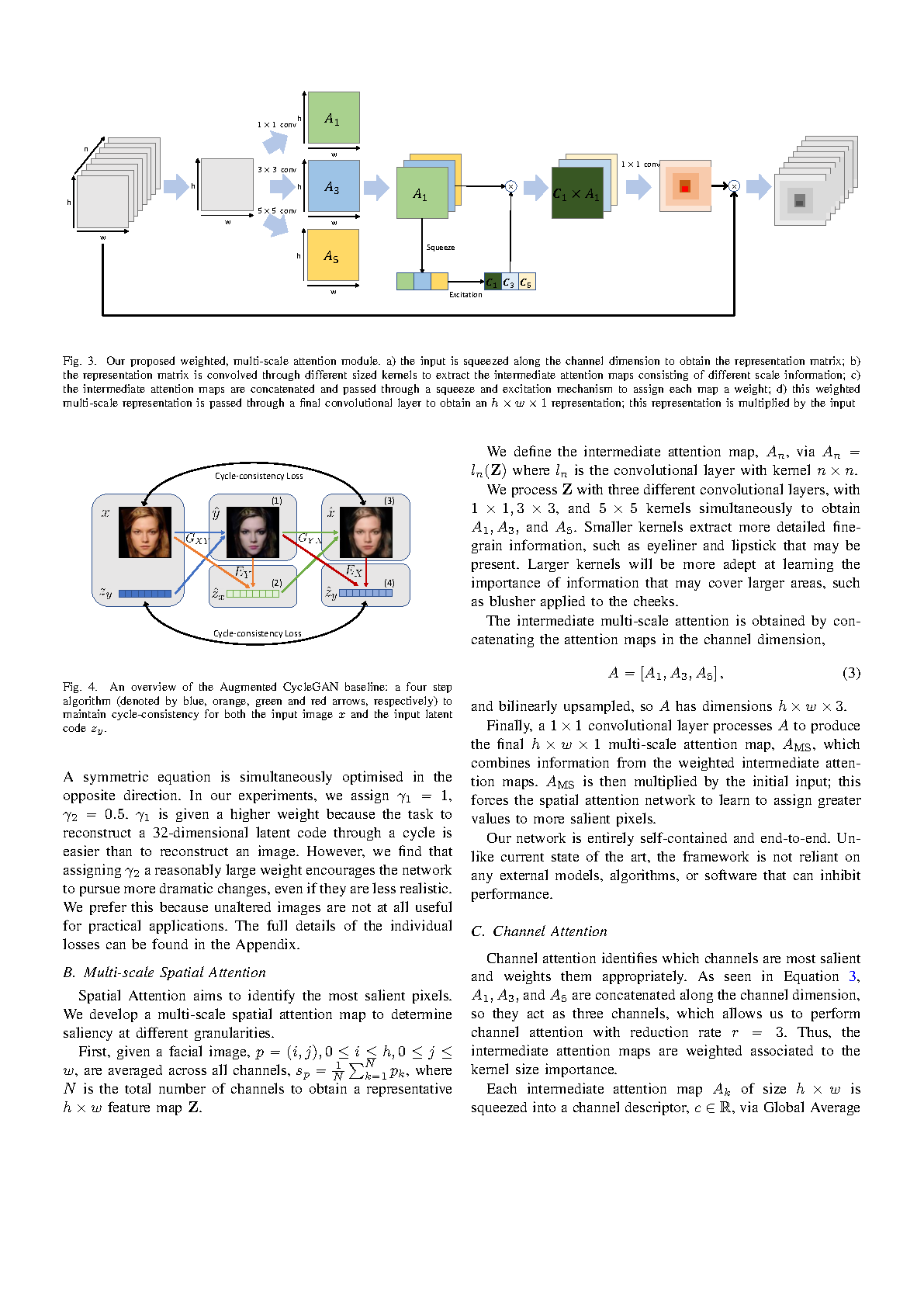

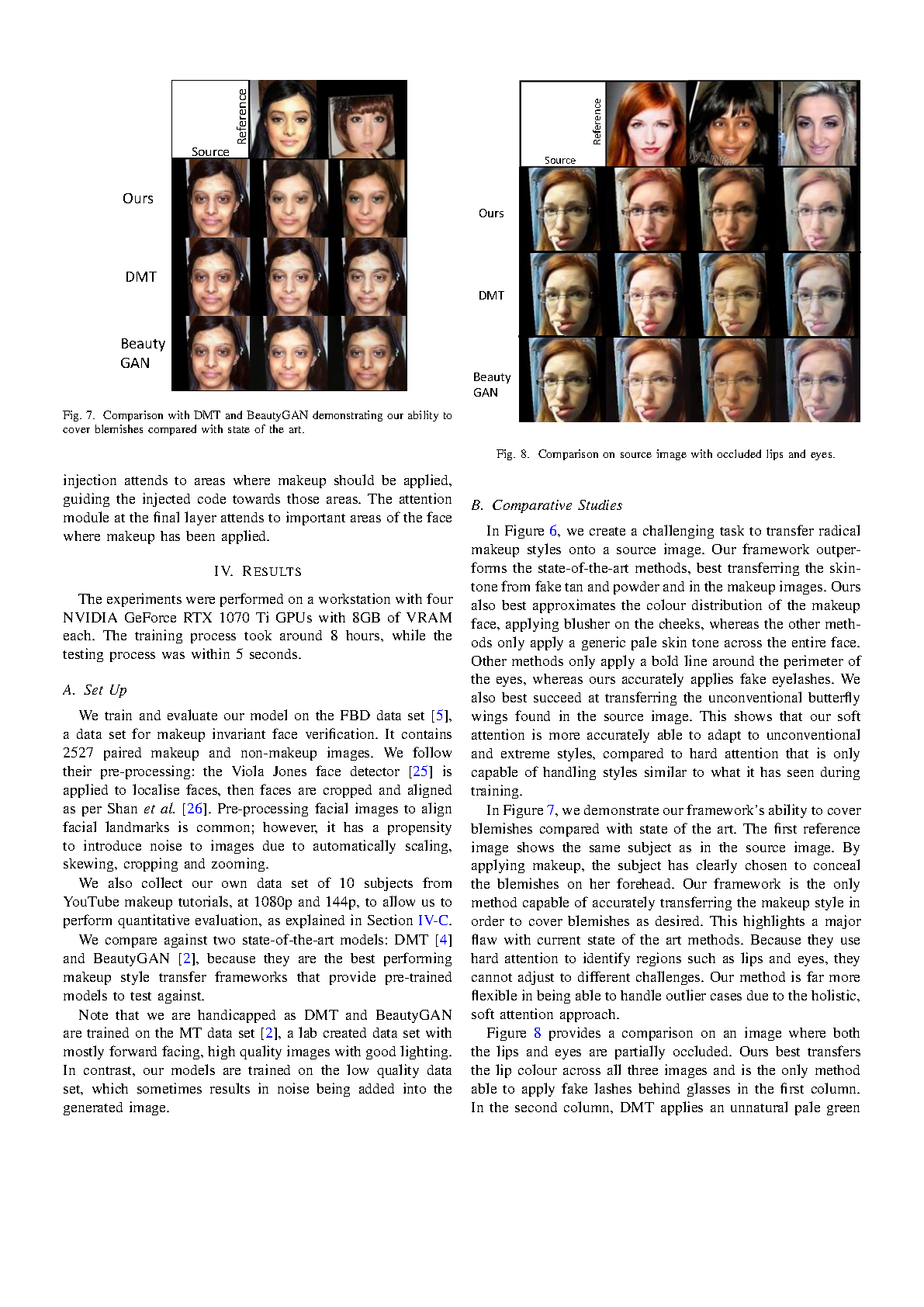

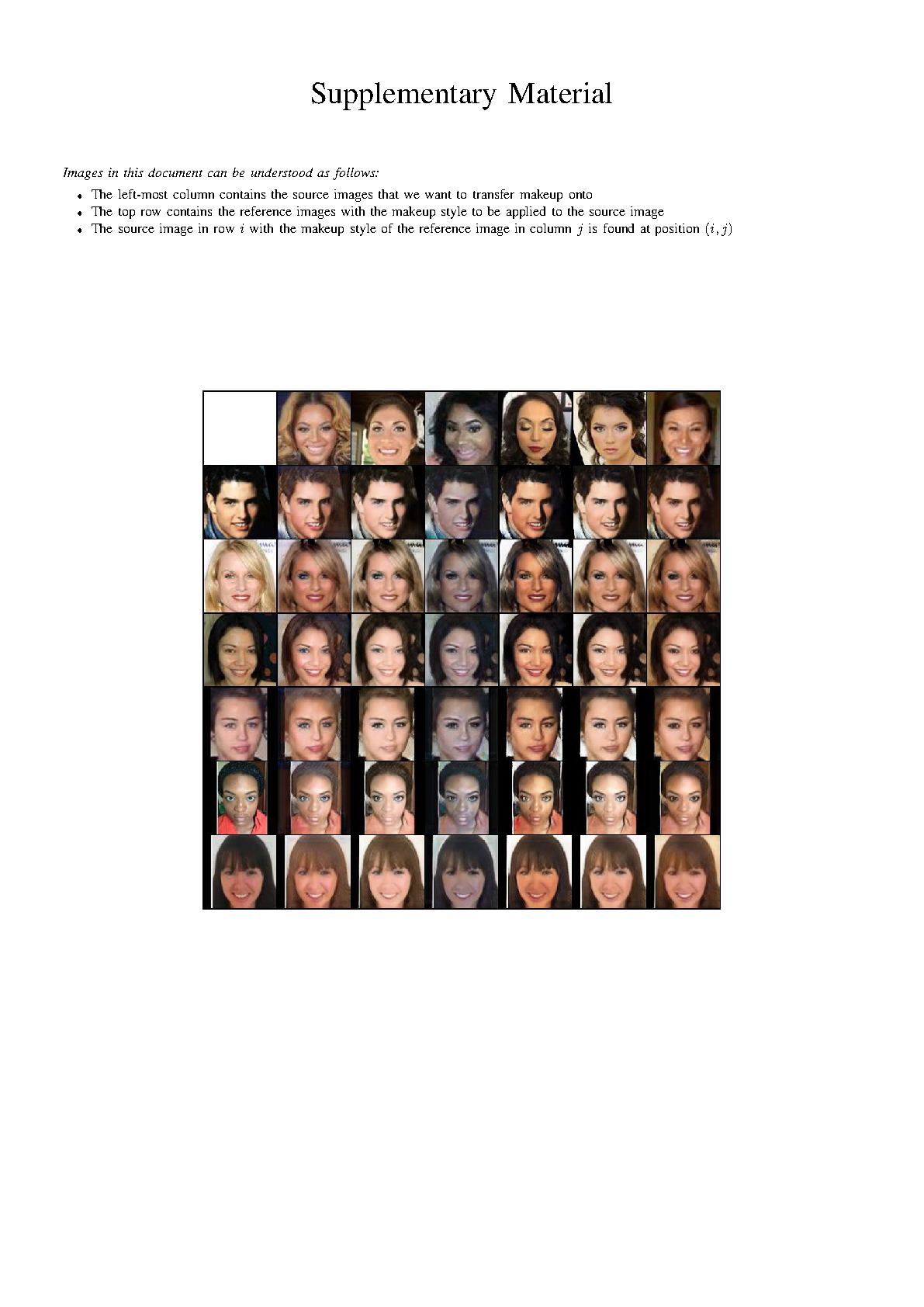

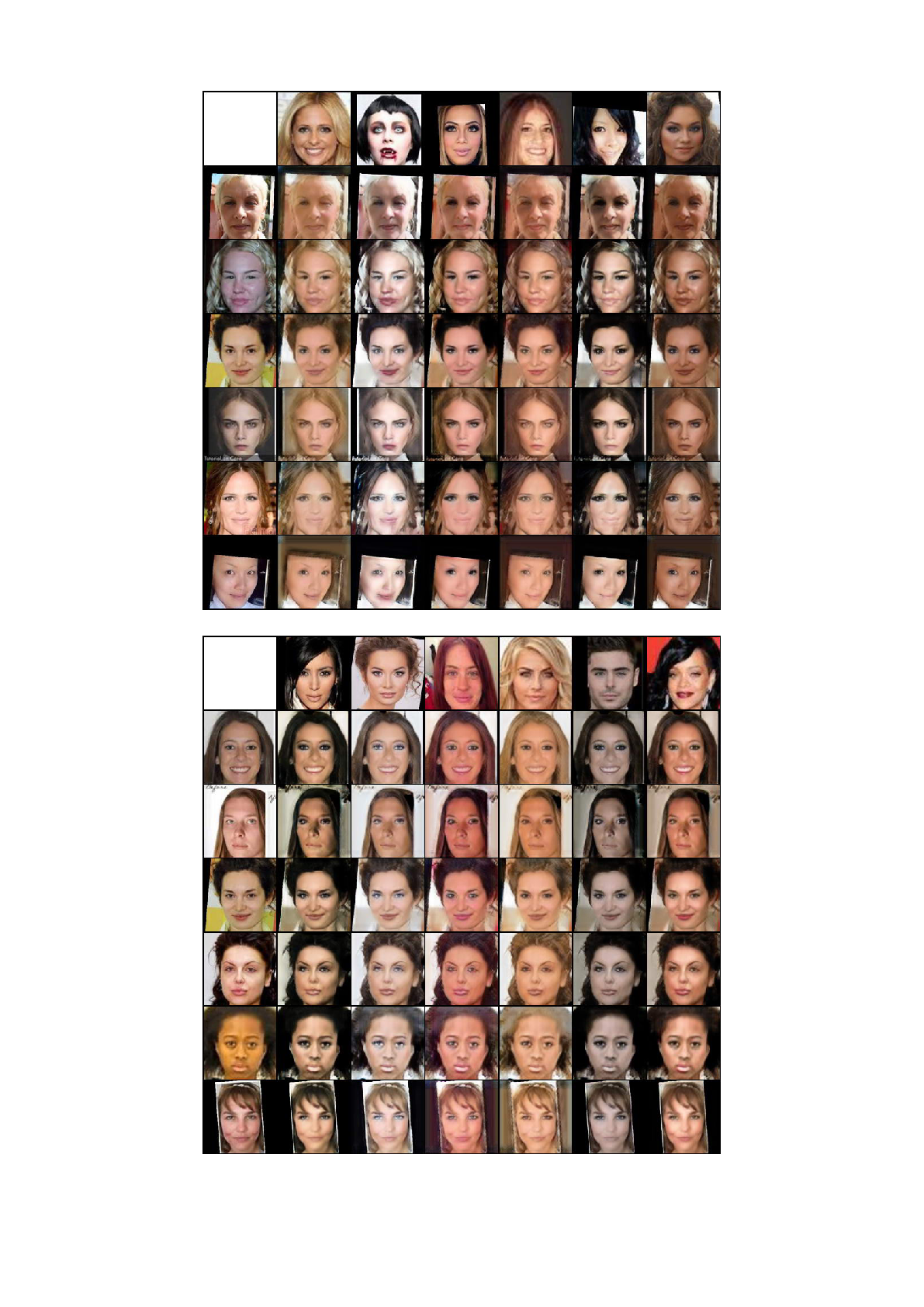

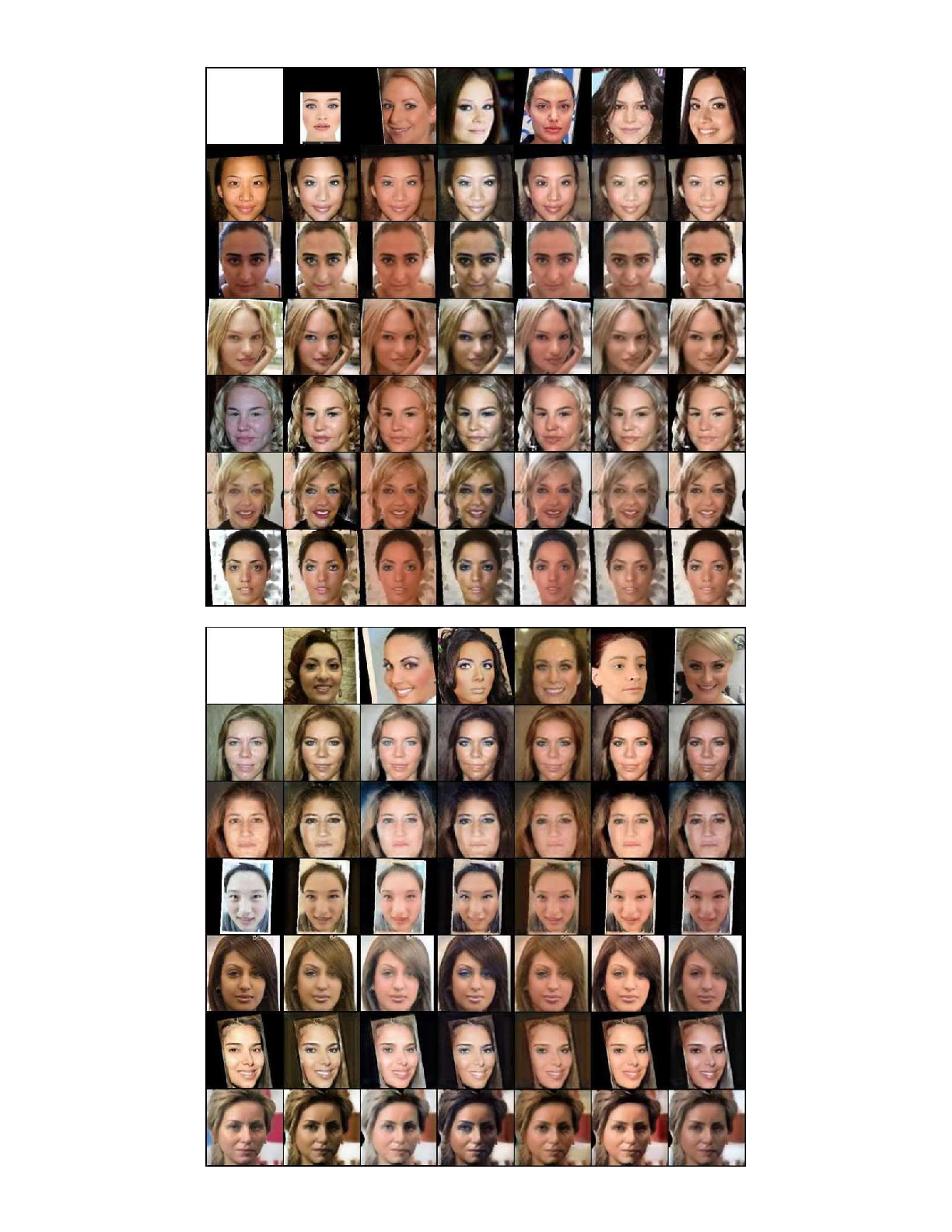

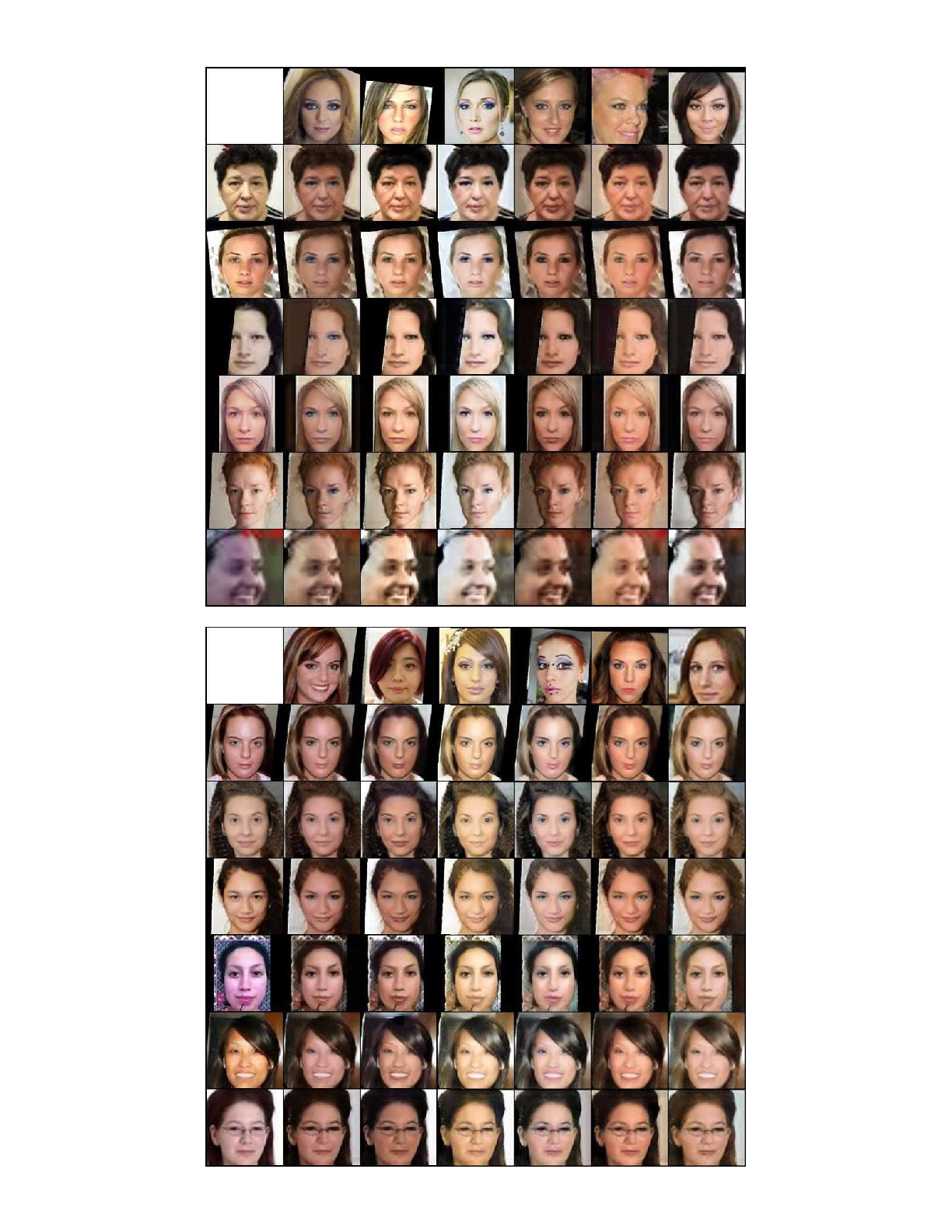

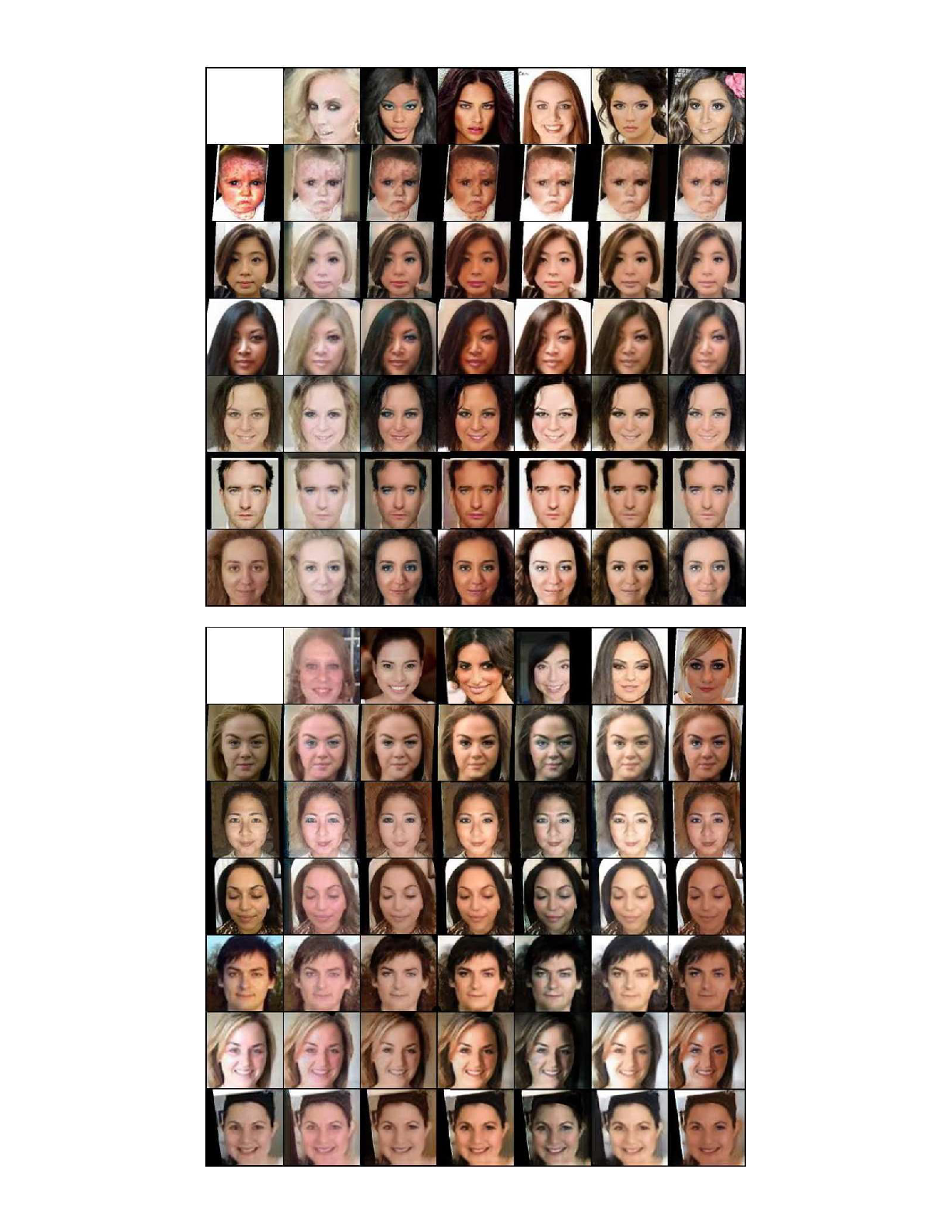

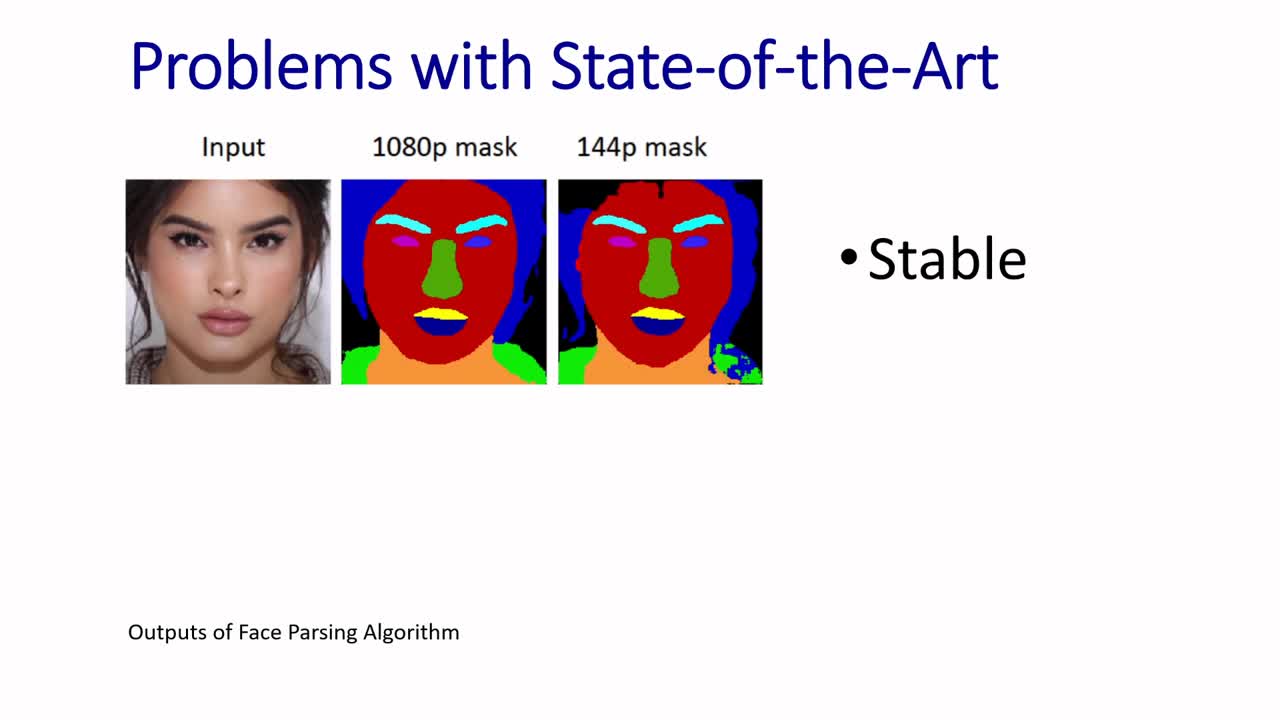

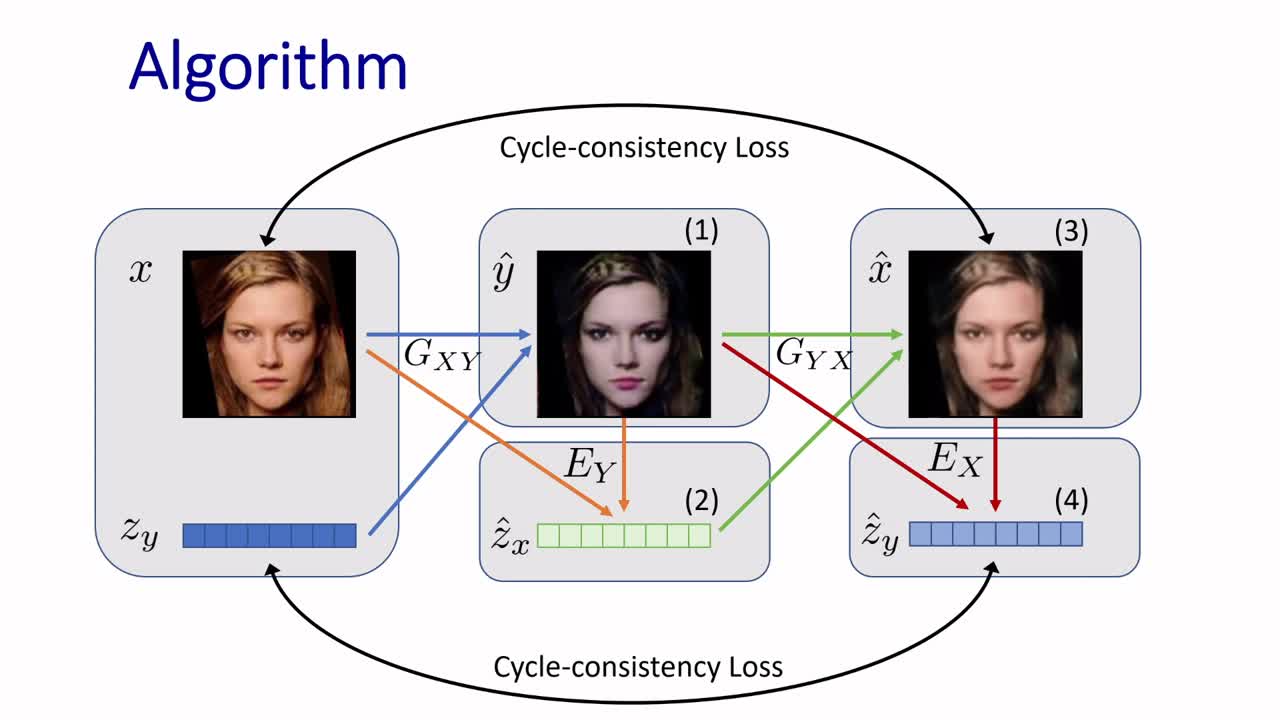

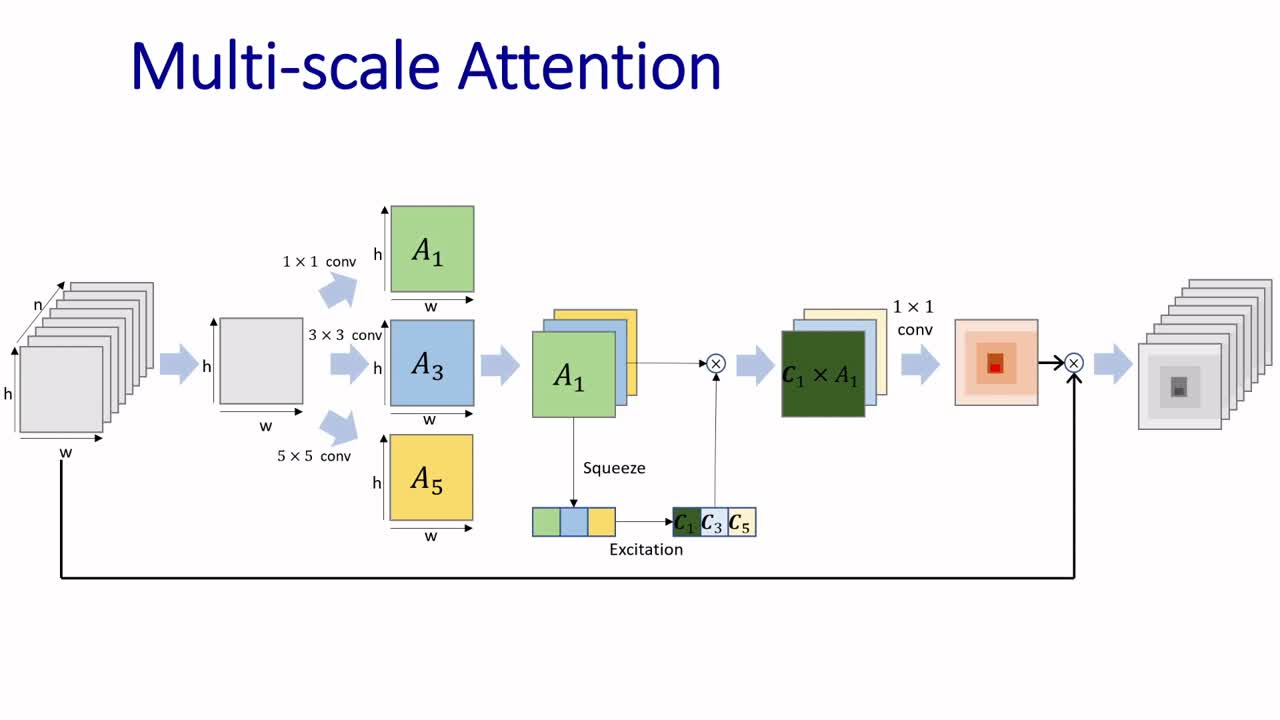

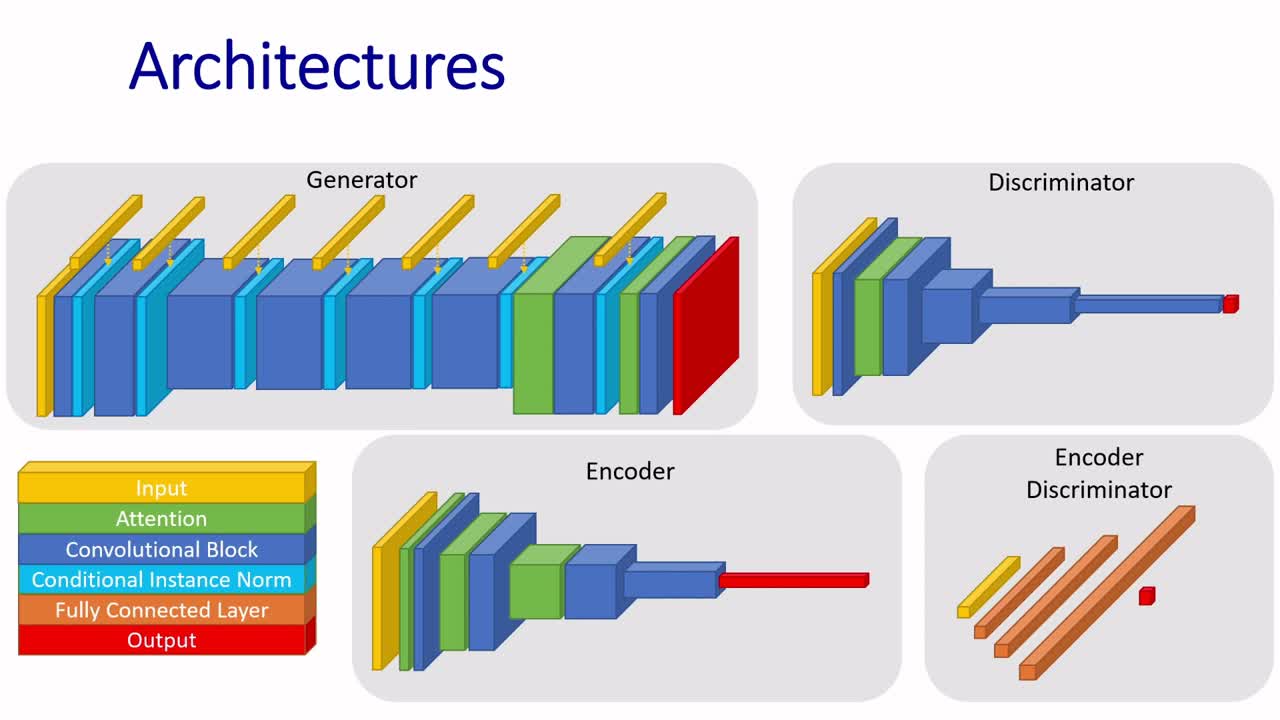

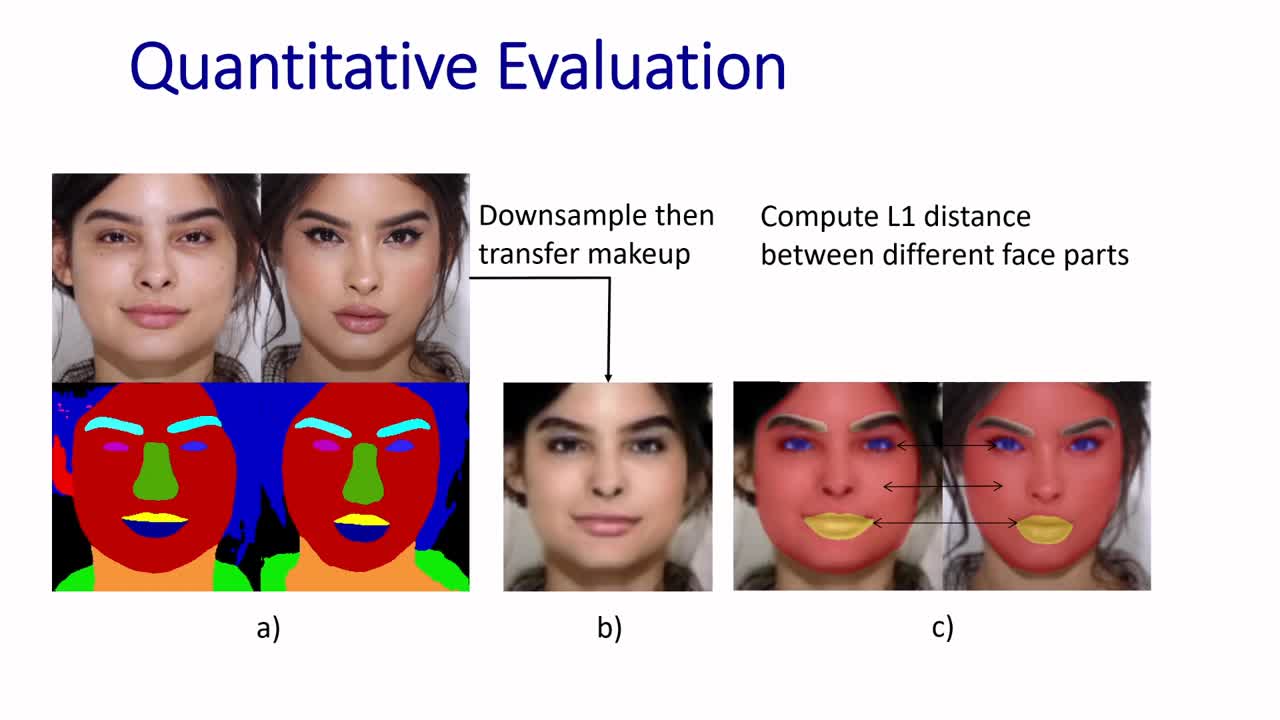

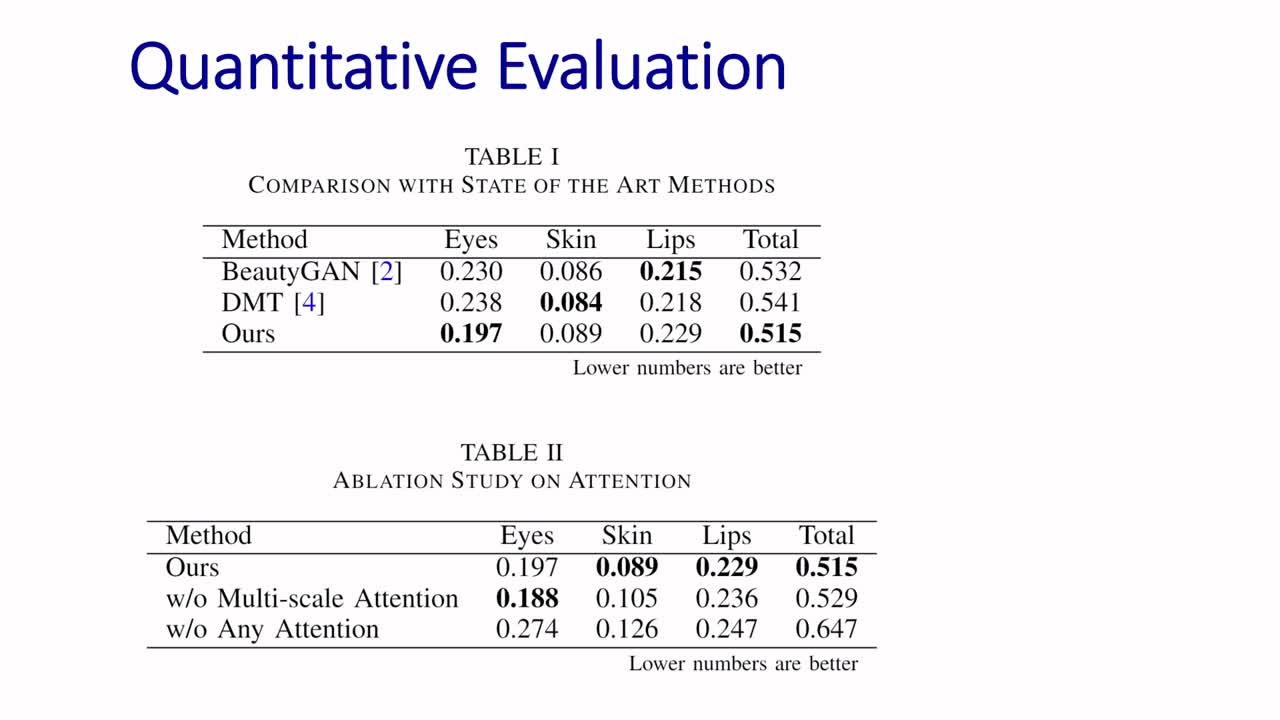

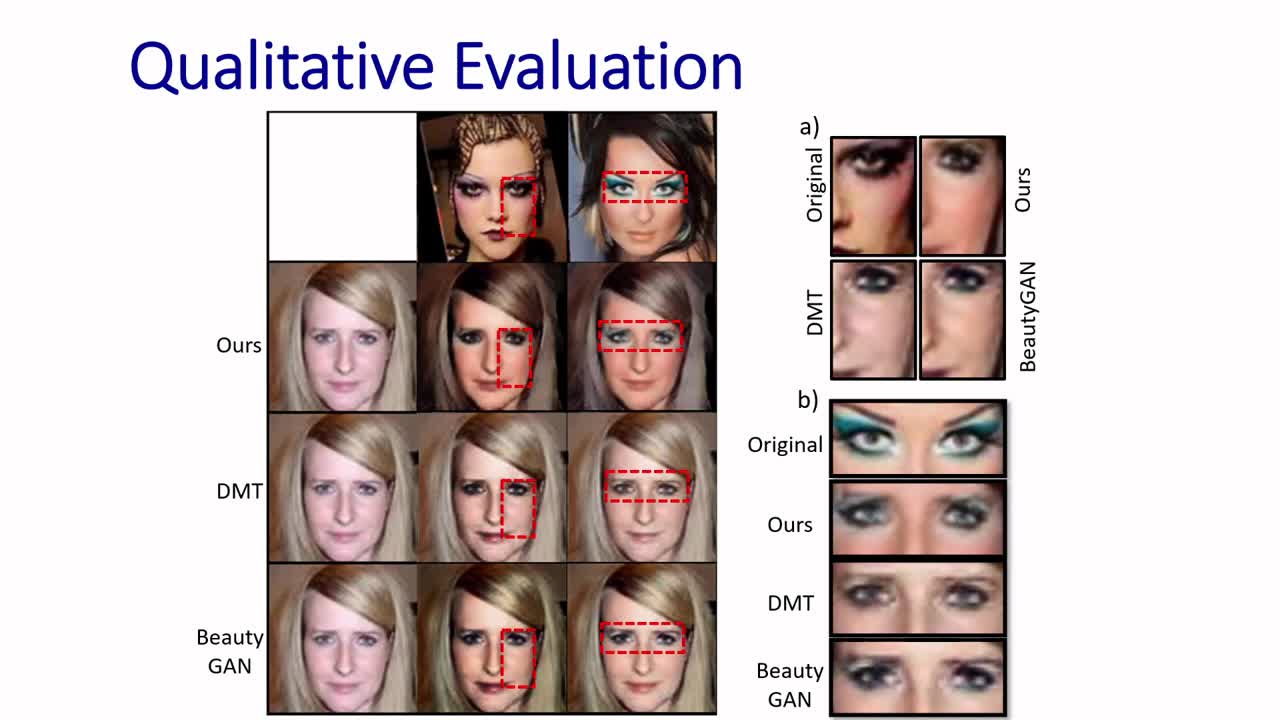

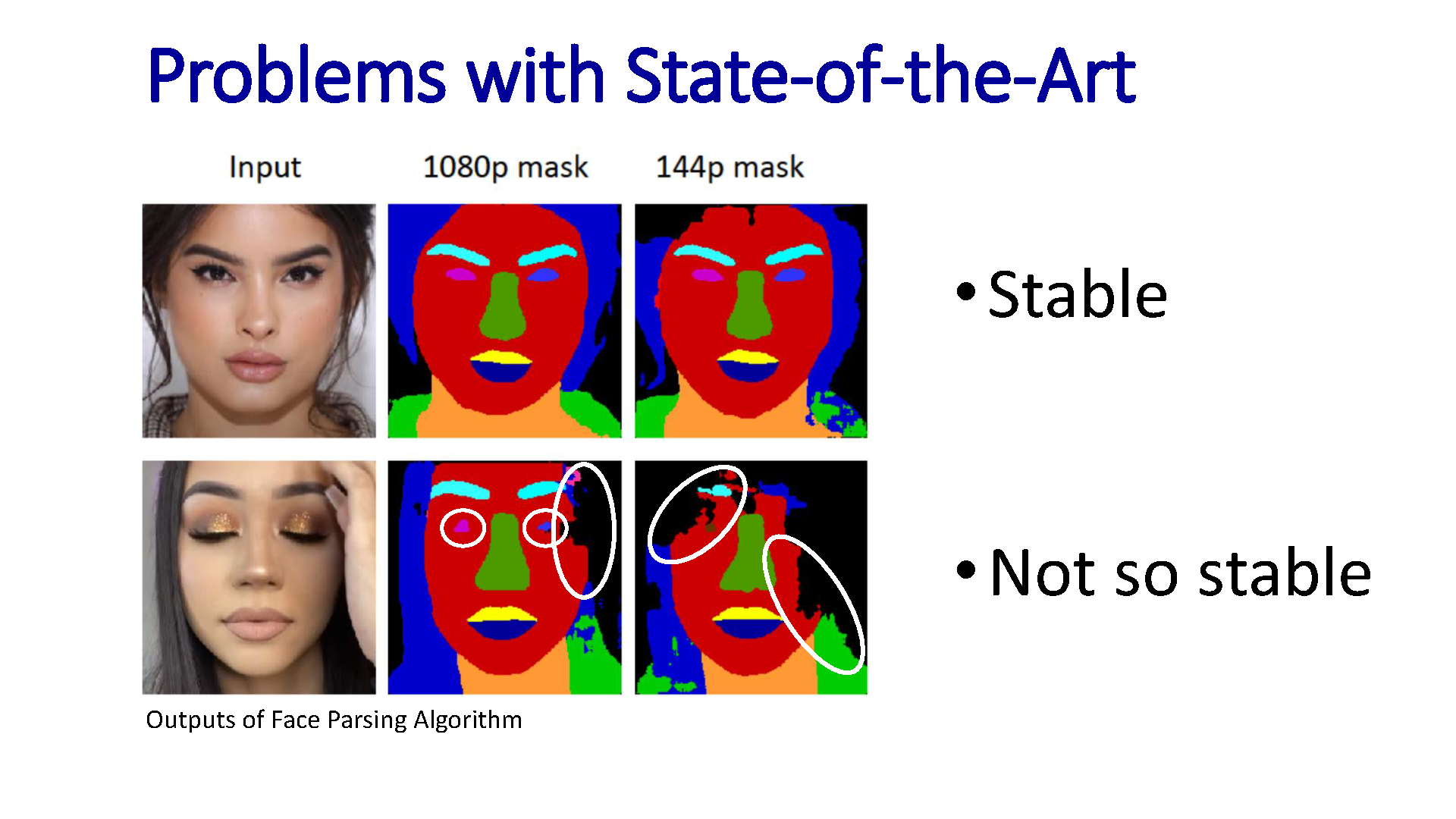

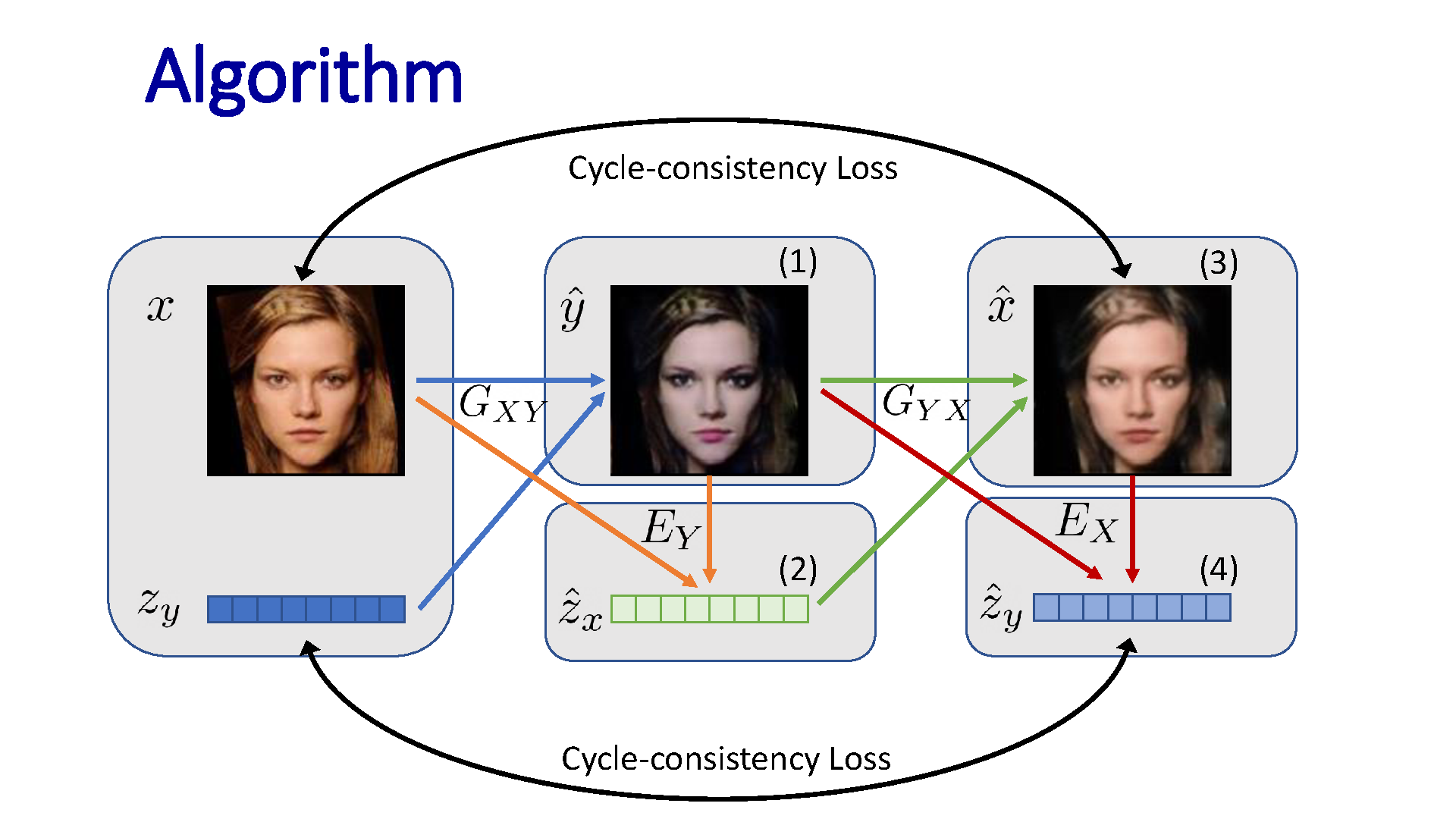

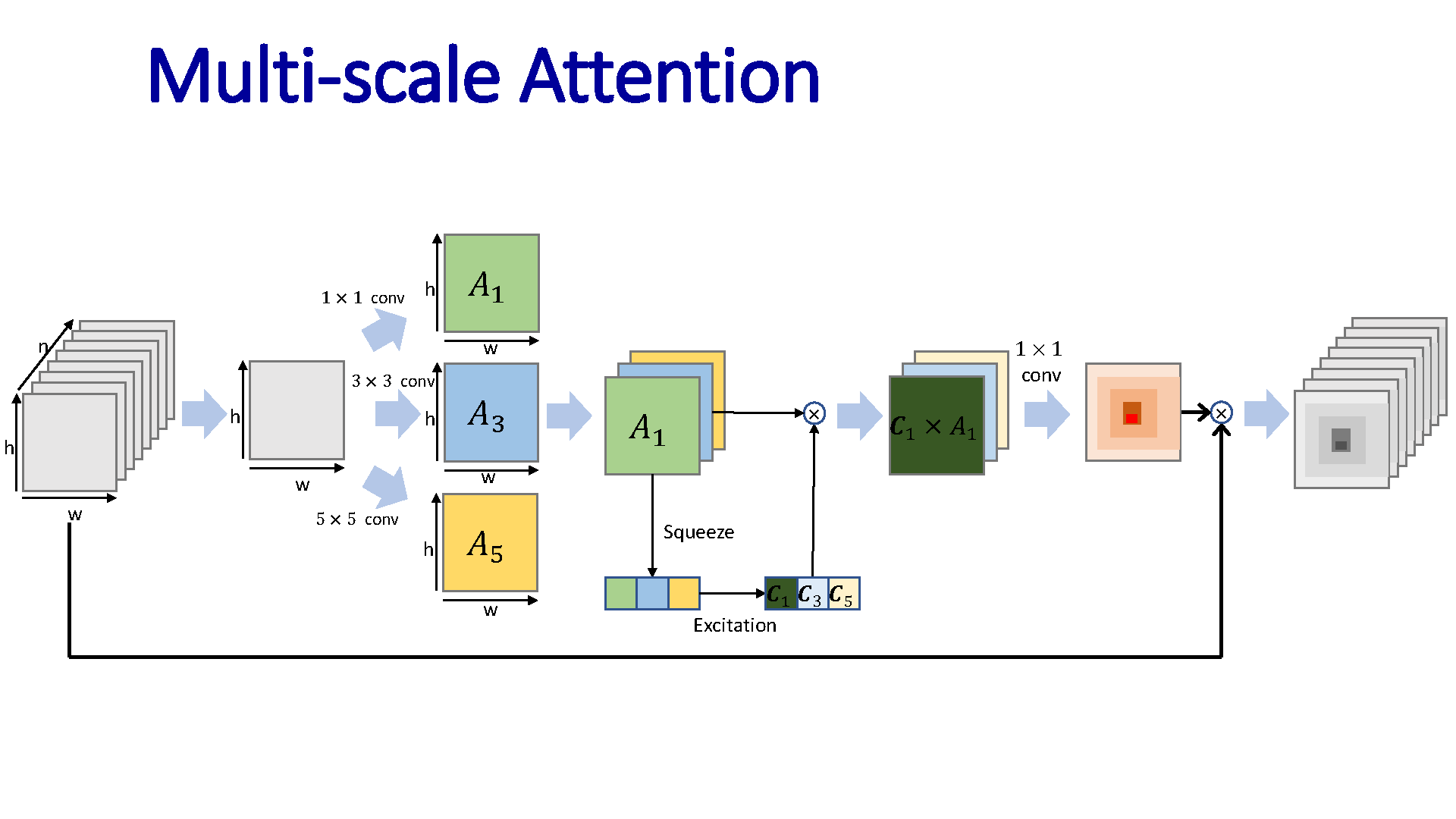

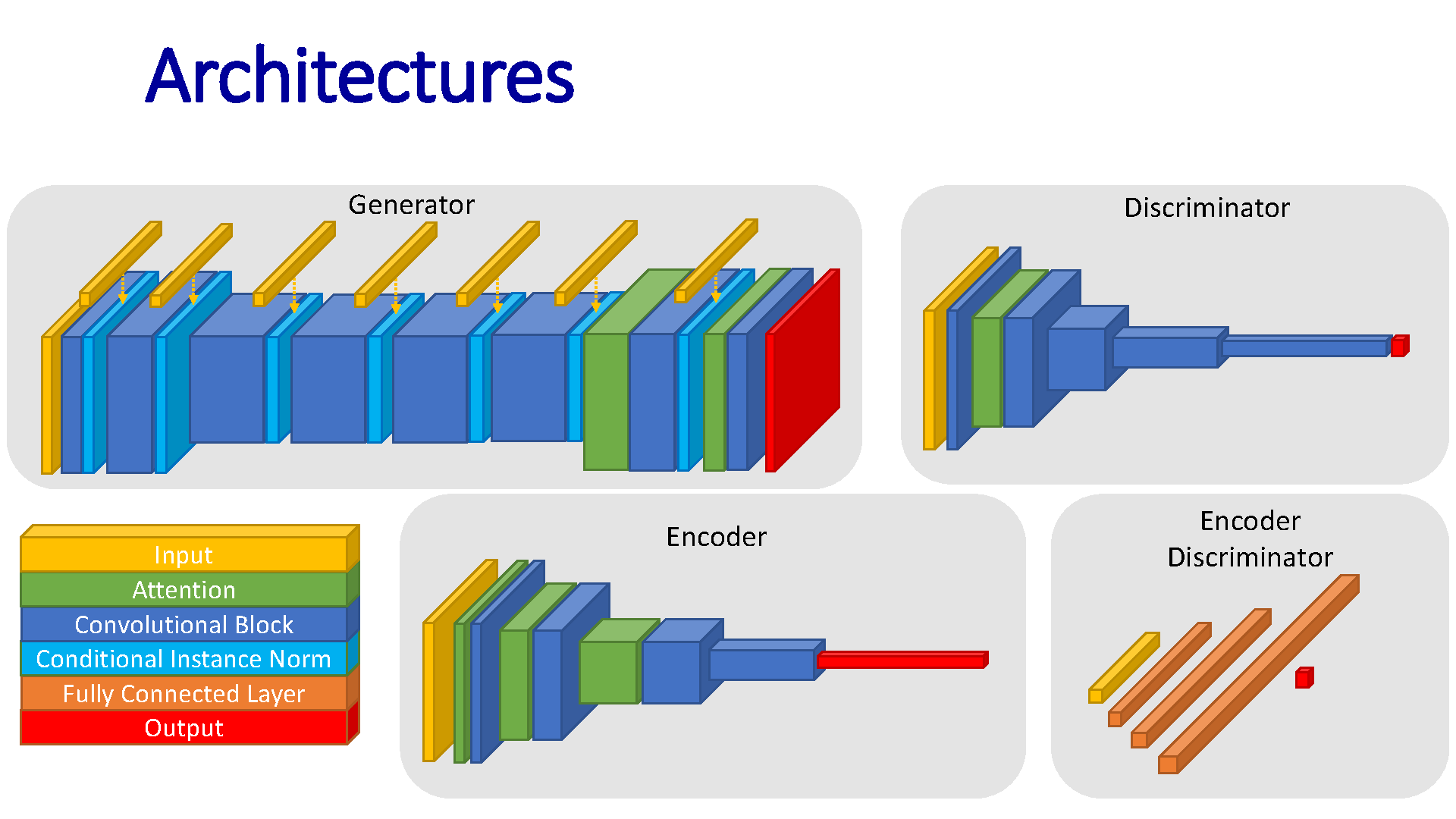

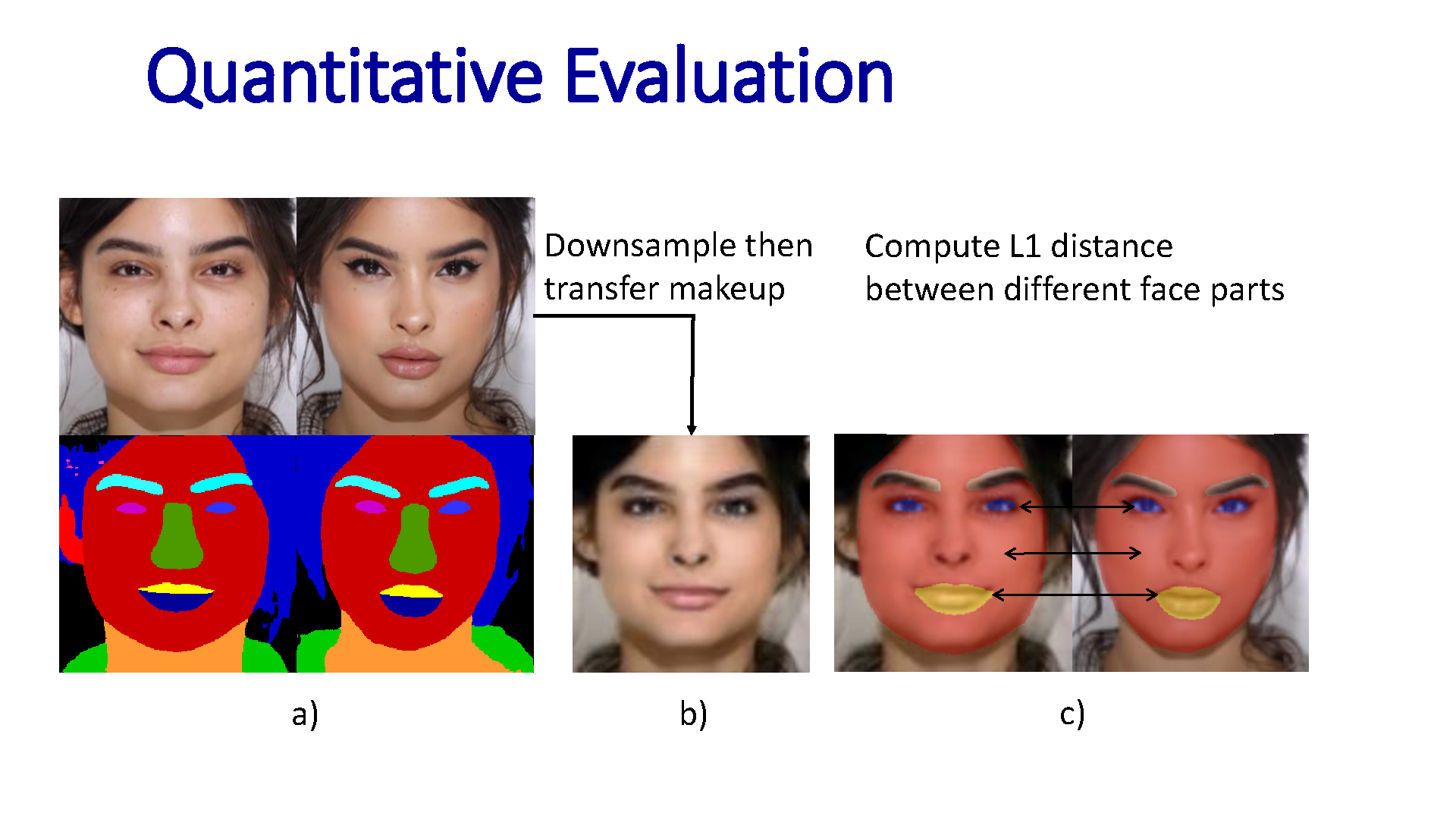

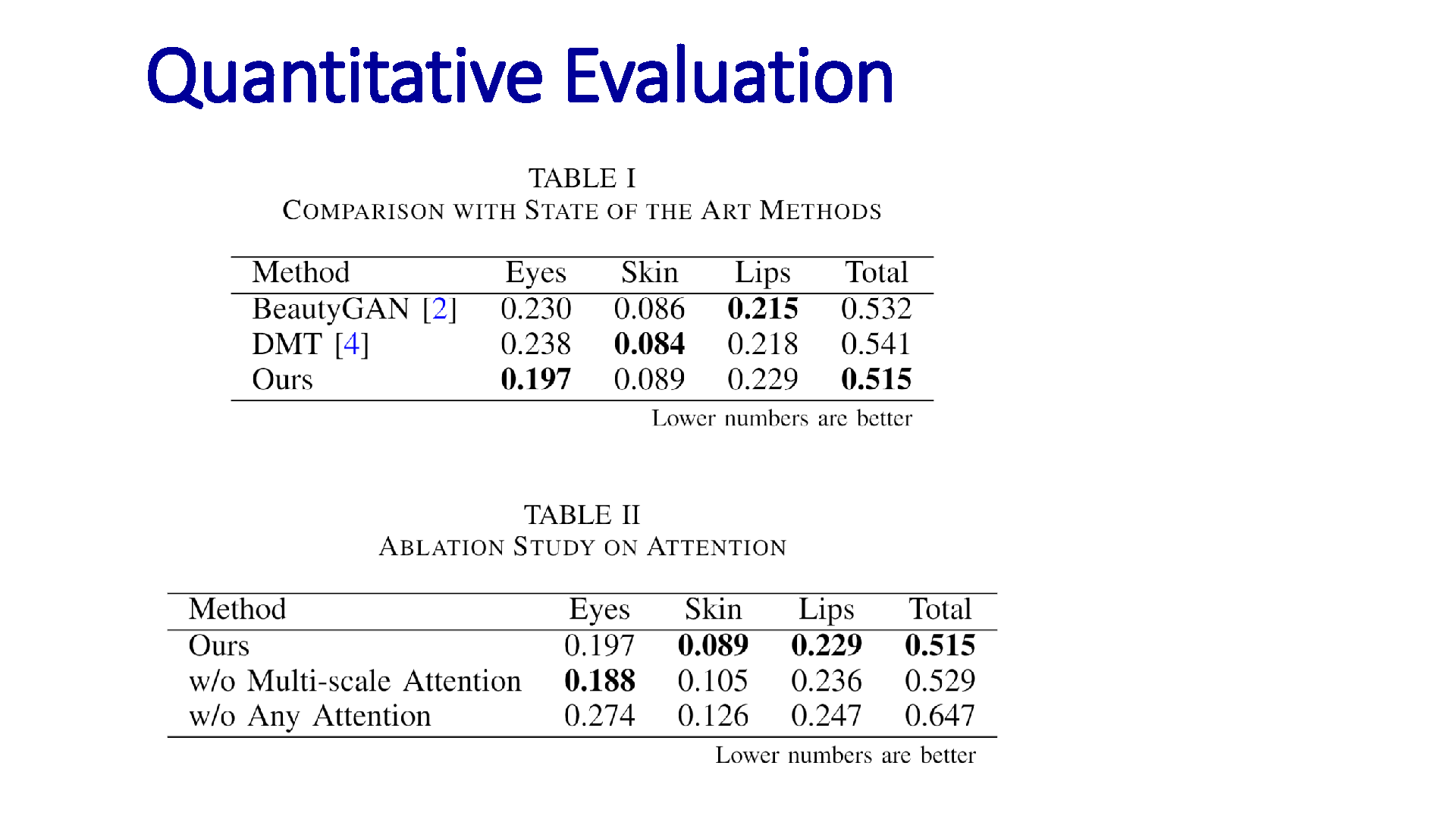

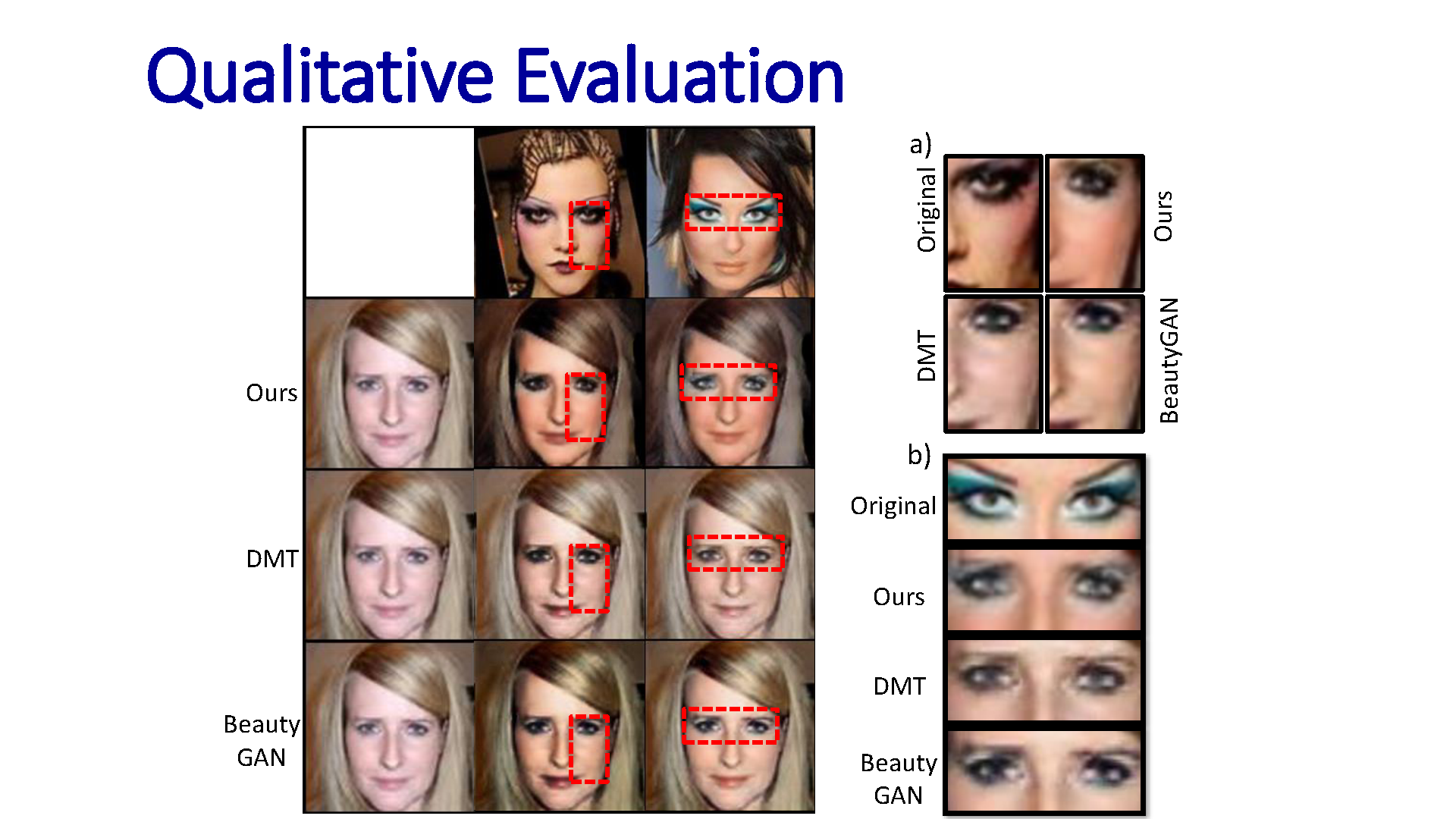

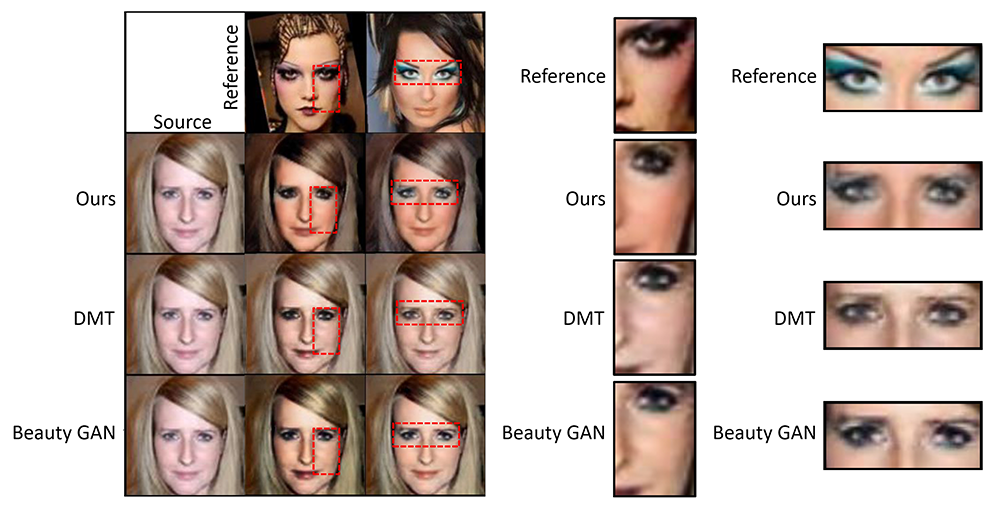

Facial makeup style transfer is an extremely challenging sub-field of image-to-image-translation. Due to this difficulty, state-of-the-art results are mostly reliant on the Face Parsing Algorithm, which segments a face into parts in order to easily extract makeup features. However, this algorithm can only work well on high-definition images where facial features can be accurately extracted. Faces in many real-world photos, such as those including a large background or multiple people, are typically of low-resolution, which considerably hinders state-of- the-art algorithms. In this paper, we propose an end-to-end holistic approach to effectively transfer makeup styles between two low-resolution images. The idea is built upon a novel weighted multi-scale spatial attention module, which identifies salient pixel regions on low-resolution images in multiple scales, and uses channel attention to determine the most effective attention map. This design provides two benefits: low-resolution images are usually blurry to different extents, so a multi-scale architecture can select the most effective convolution kernel size to implement spatial attention; makeup is applied on both a macro-level (foundation, fake tan) and a micro-level (eyeliner, lipstick) so different scales can excel in extracting different makeup features. We develop an Augmented CycleGAN network that embeds our attention modules at selected layers to most effectively transfer makeup. Our system is tested with the FBD data set, which consists of many low-resolution facial images, and demonstrate that it outperforms state-of-the-art methods, particularly in transferring makeup for blurry images and partially occluded images.

Downloads

YouTube

Cite This Research

Supporting Grants

Received from Faculty of Engineering and Environment, Northumbria University, UK, 2018-2021

Project Page