A Unified Deep Metric Representation for Mesh Saliency Detection and Non-Rigid Shape Matching

Shanfeng Hu, Hubert P. H. Shum, Nauman Aslam, Frederick W. B. Li and Xiaohui Liang

IEEE Transactions on Multimedia (TMM), 2020

REF 2021 Submitted Output Impact Factor: 9.7† Top 10% Journal in Computer Science, Software Engineering†

Abstract

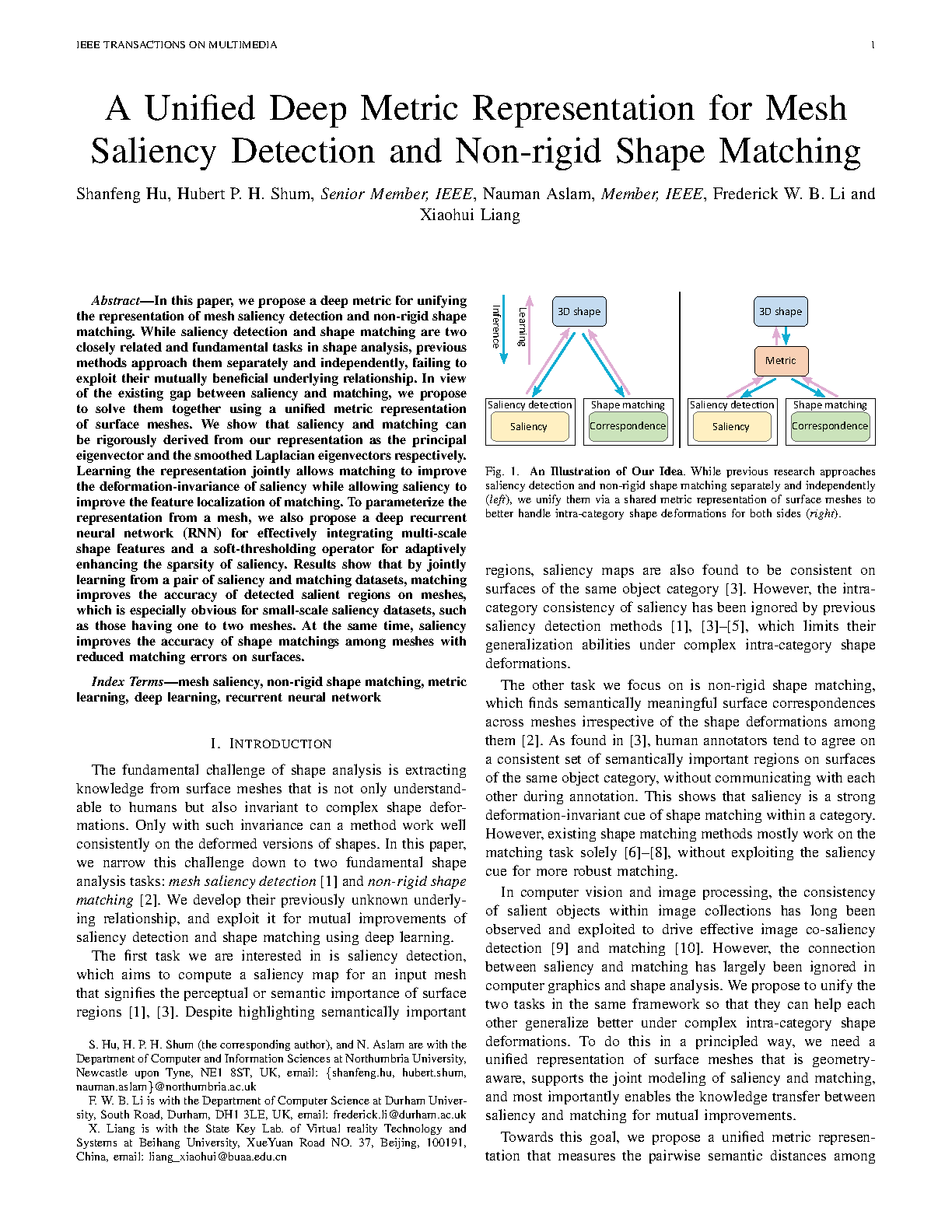

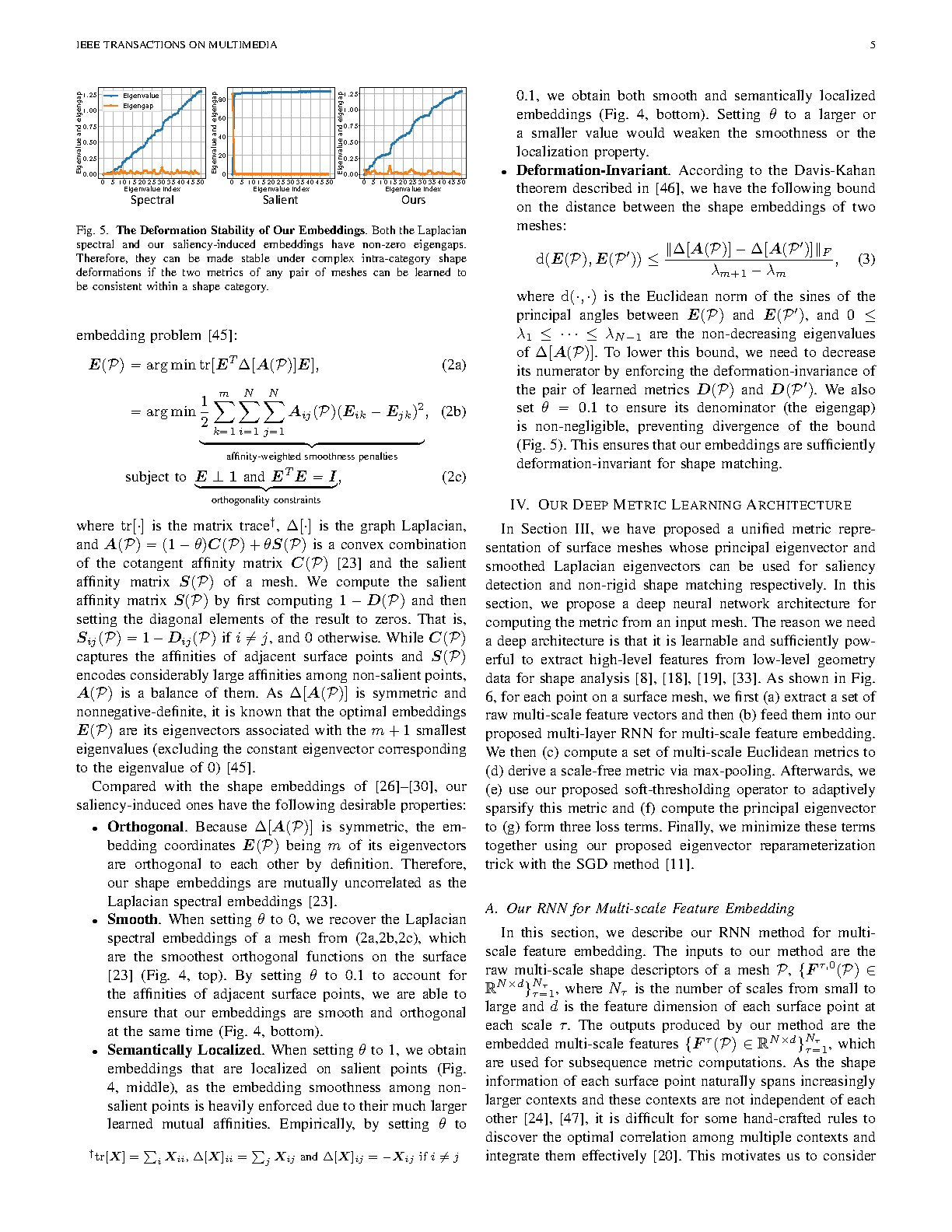

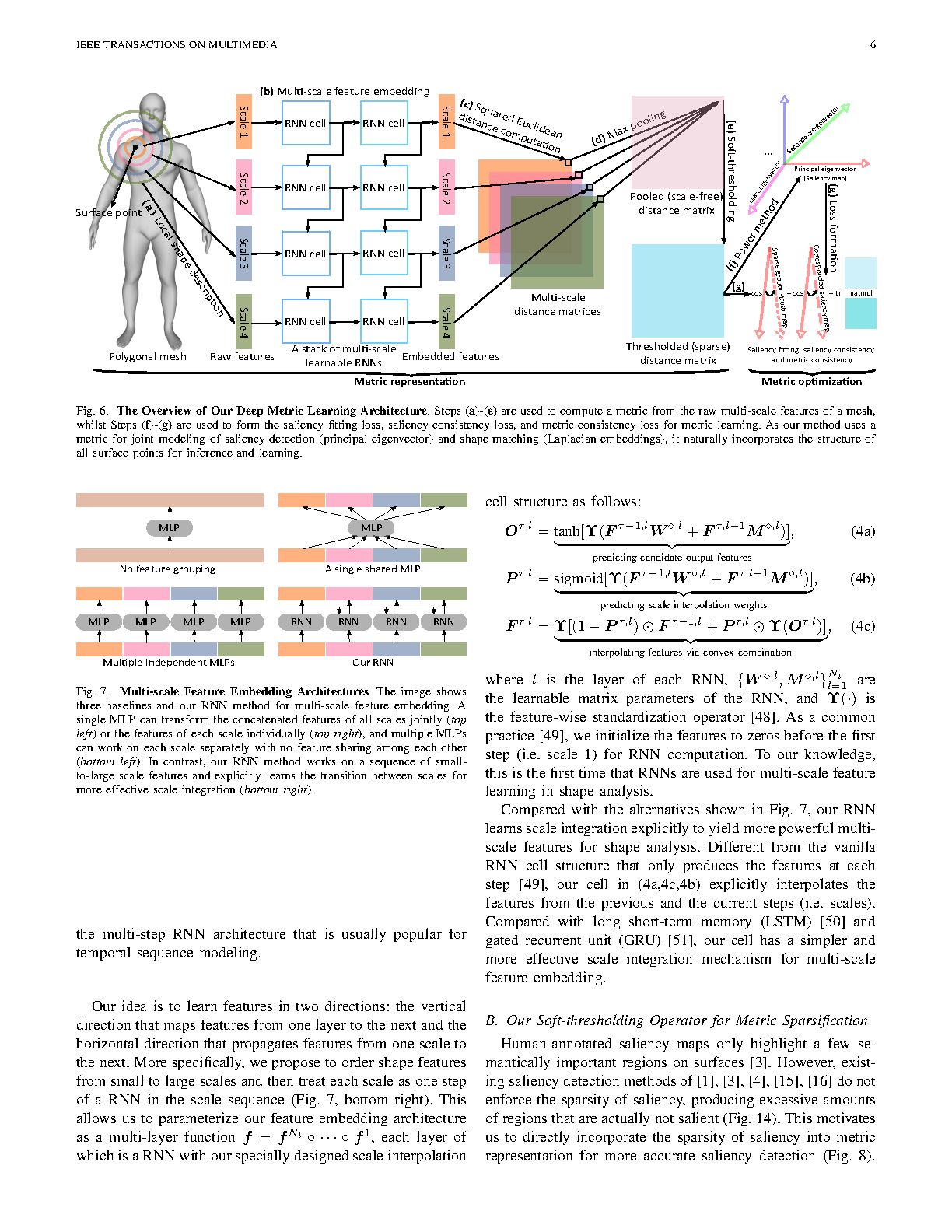

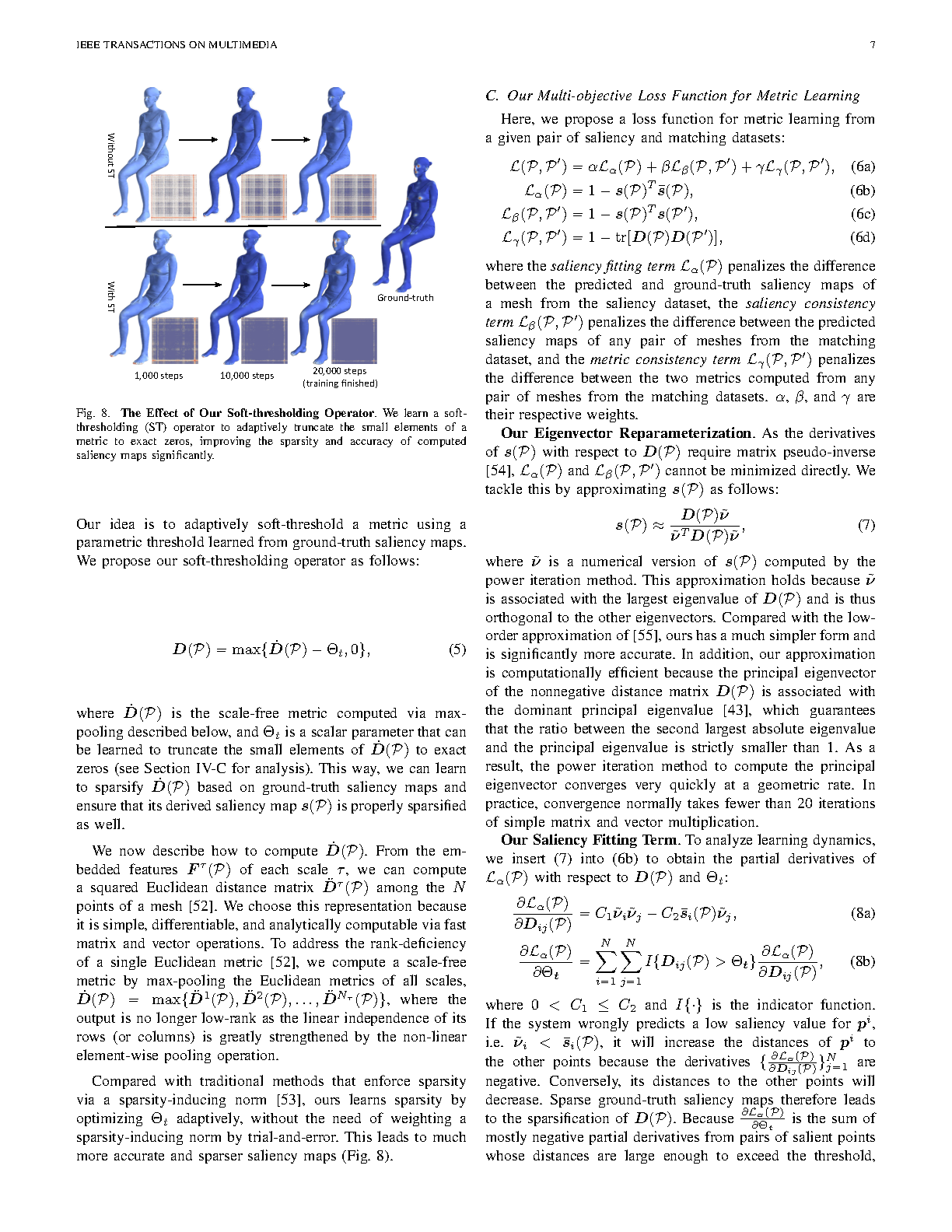

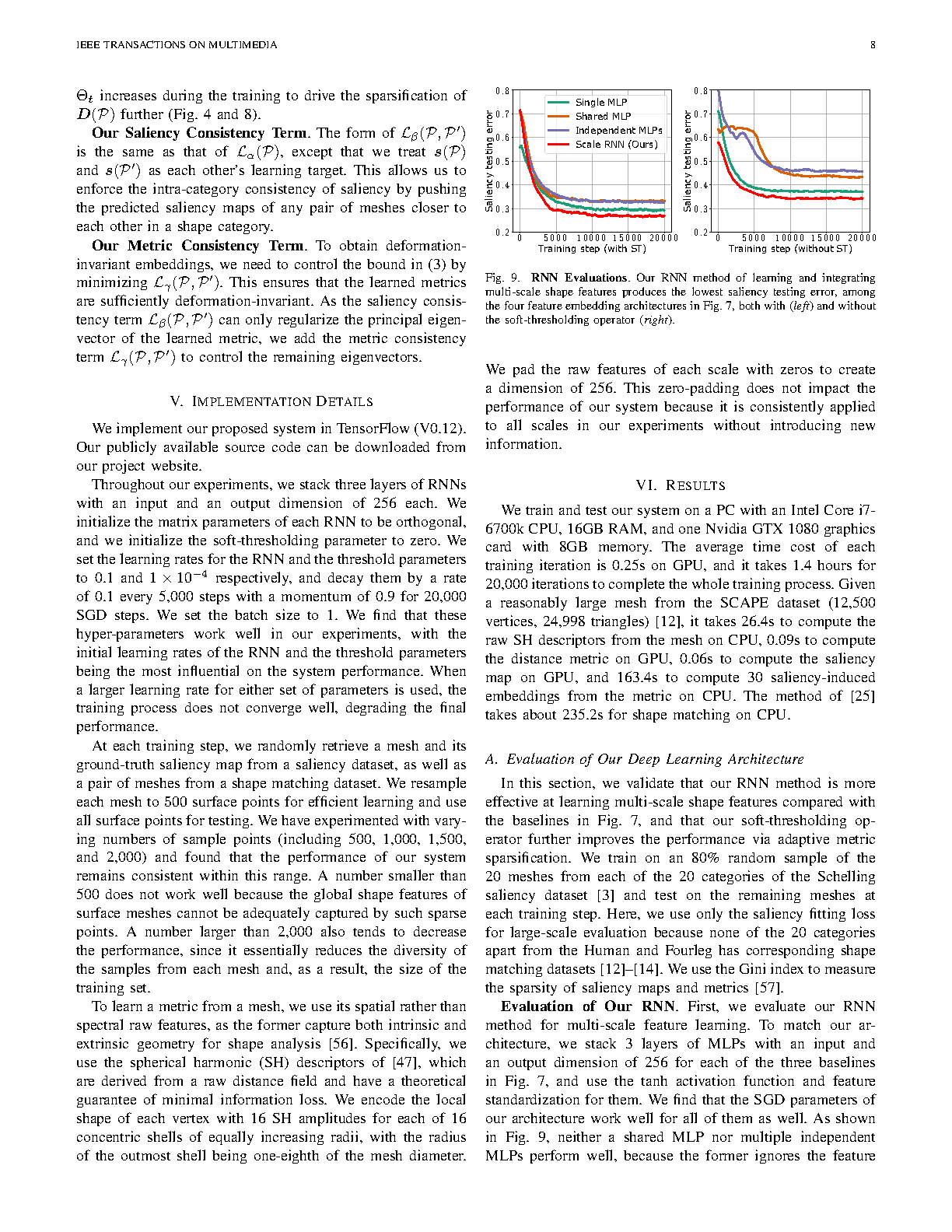

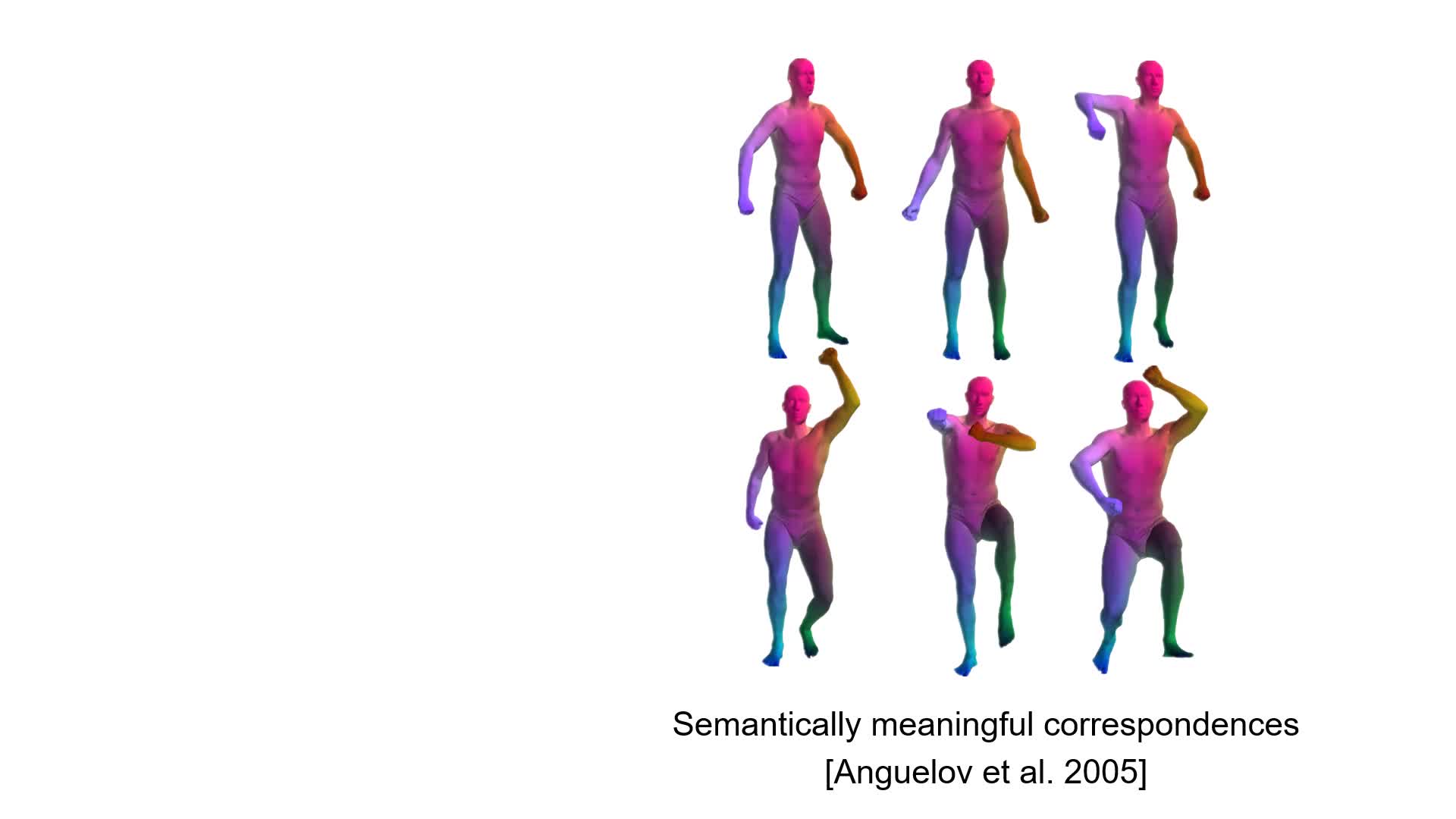

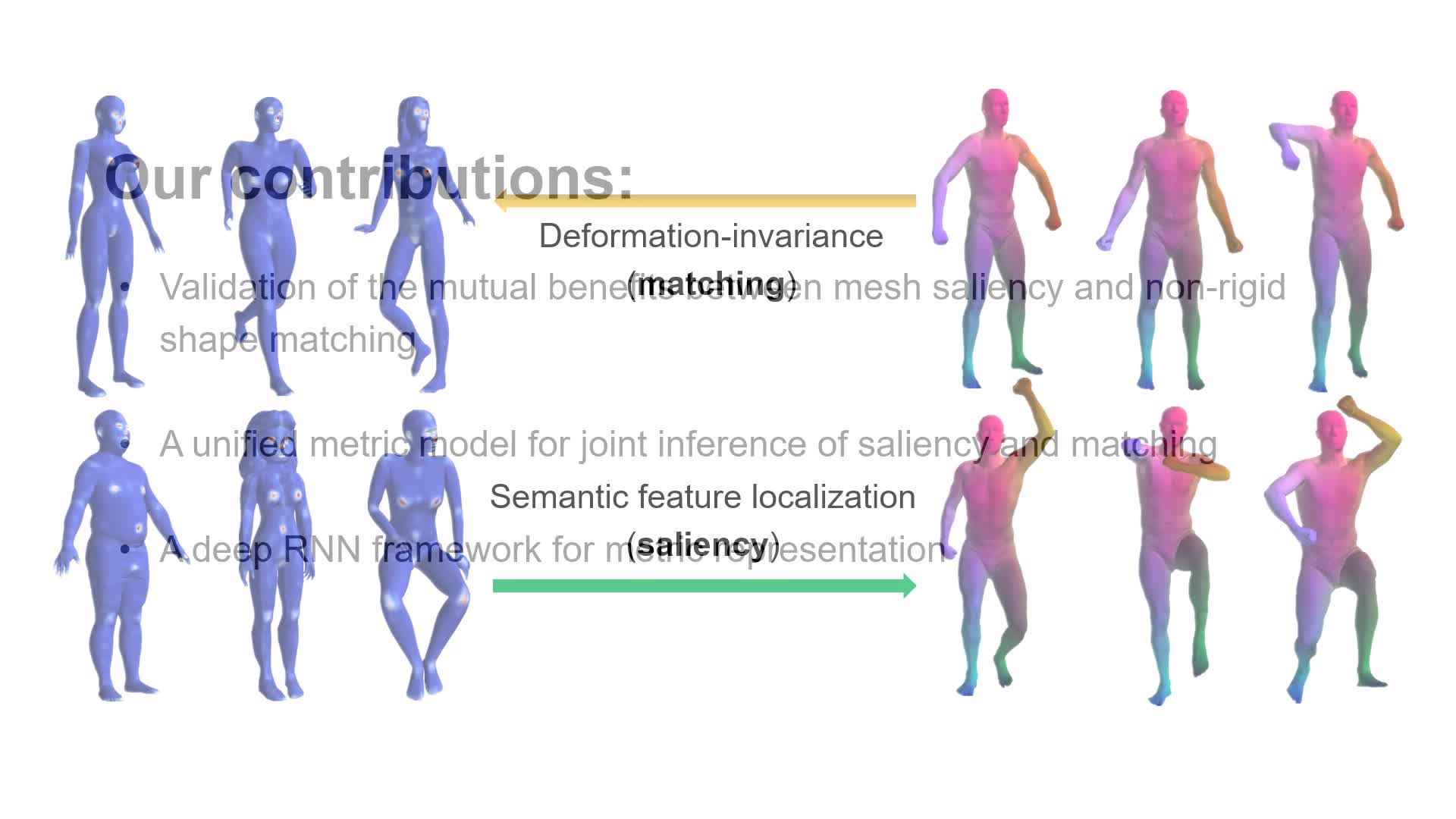

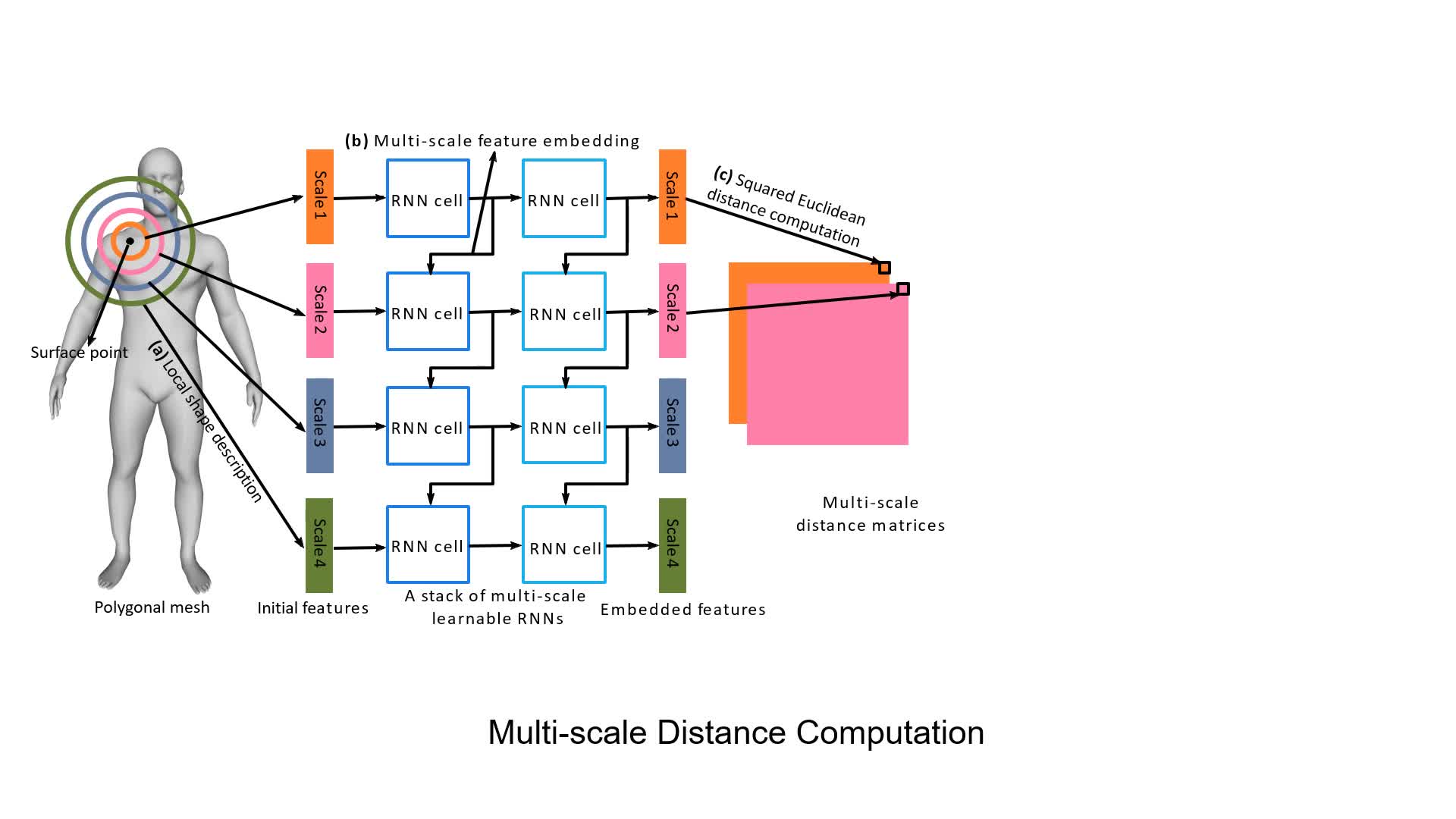

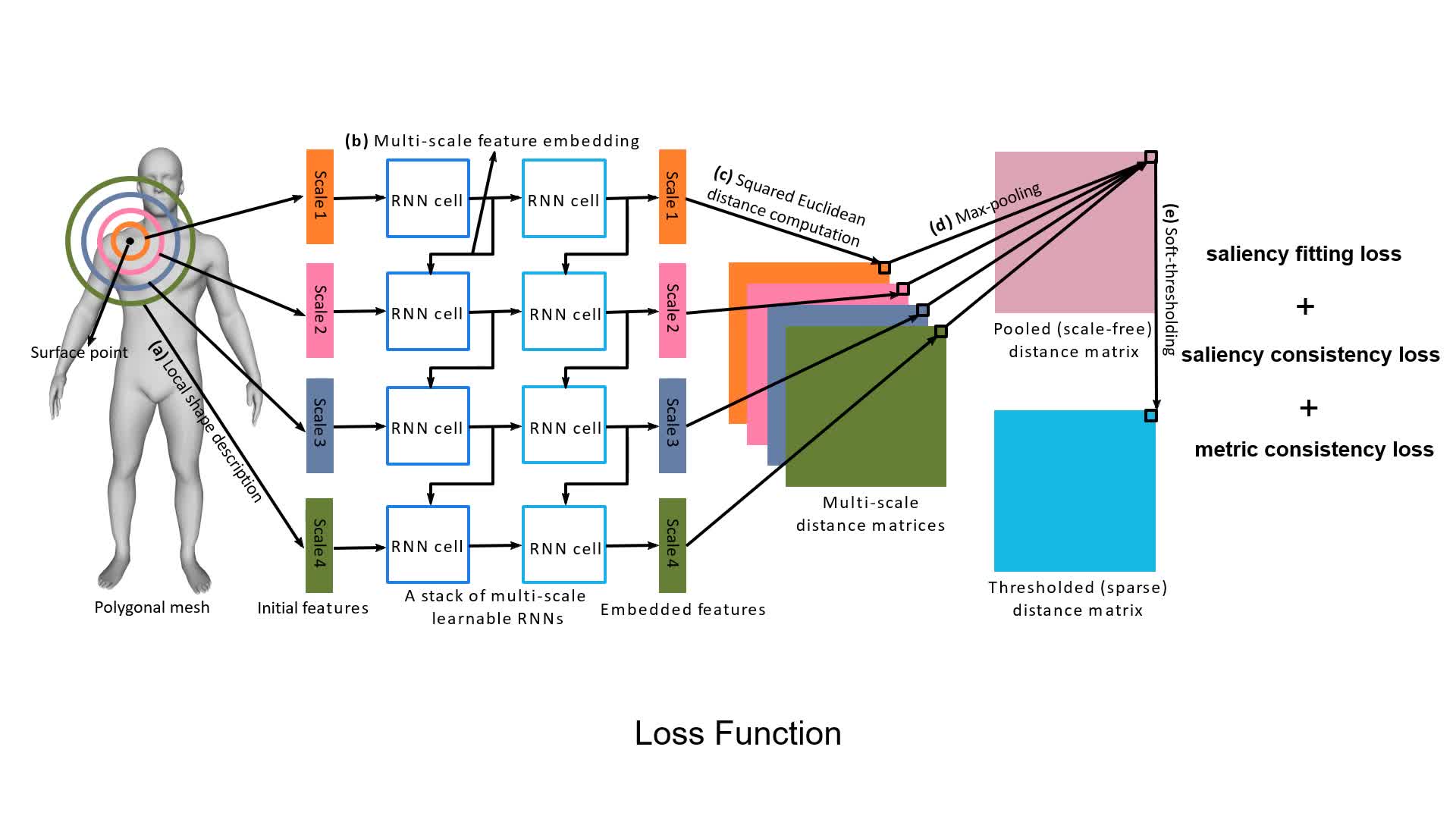

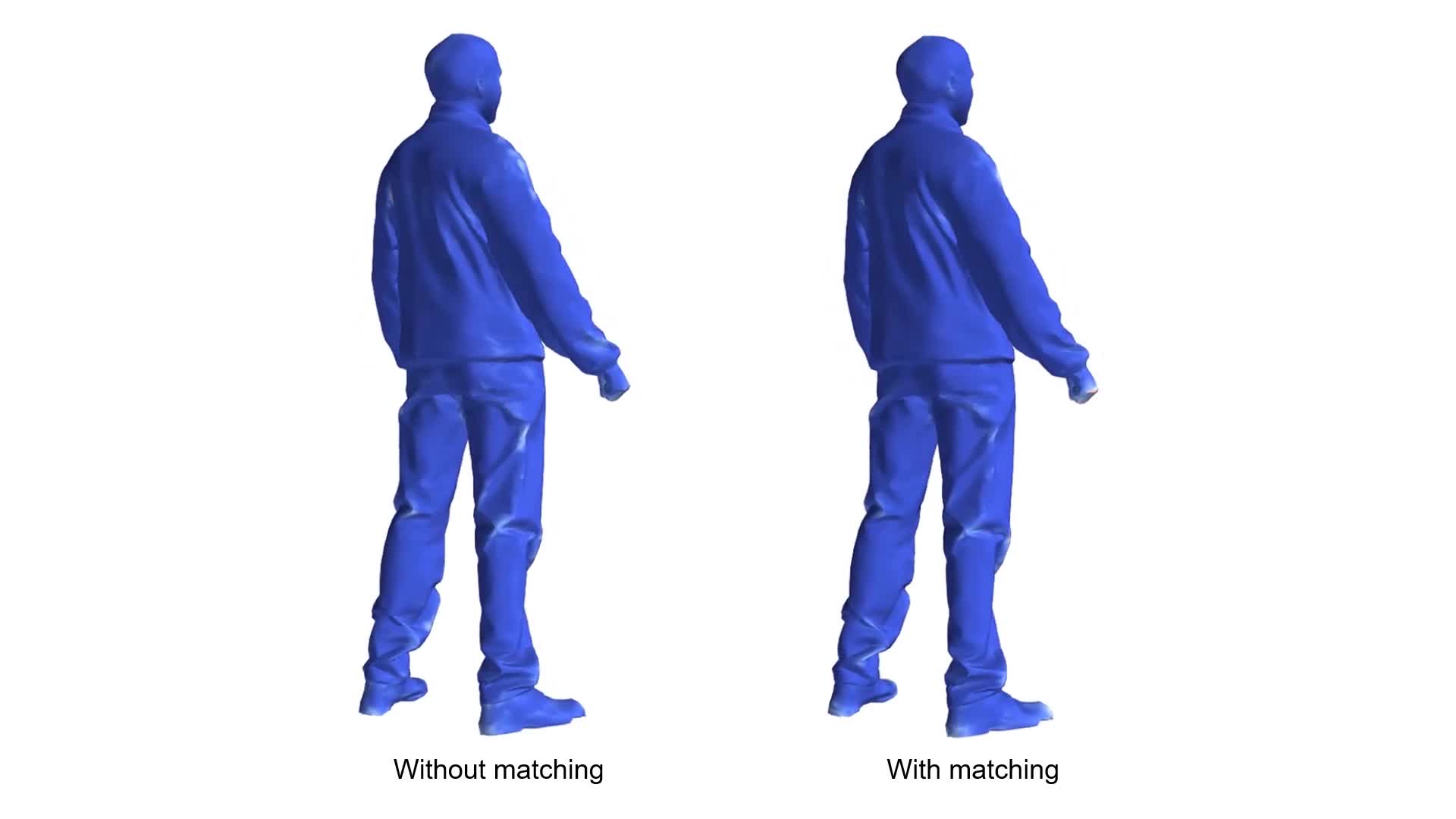

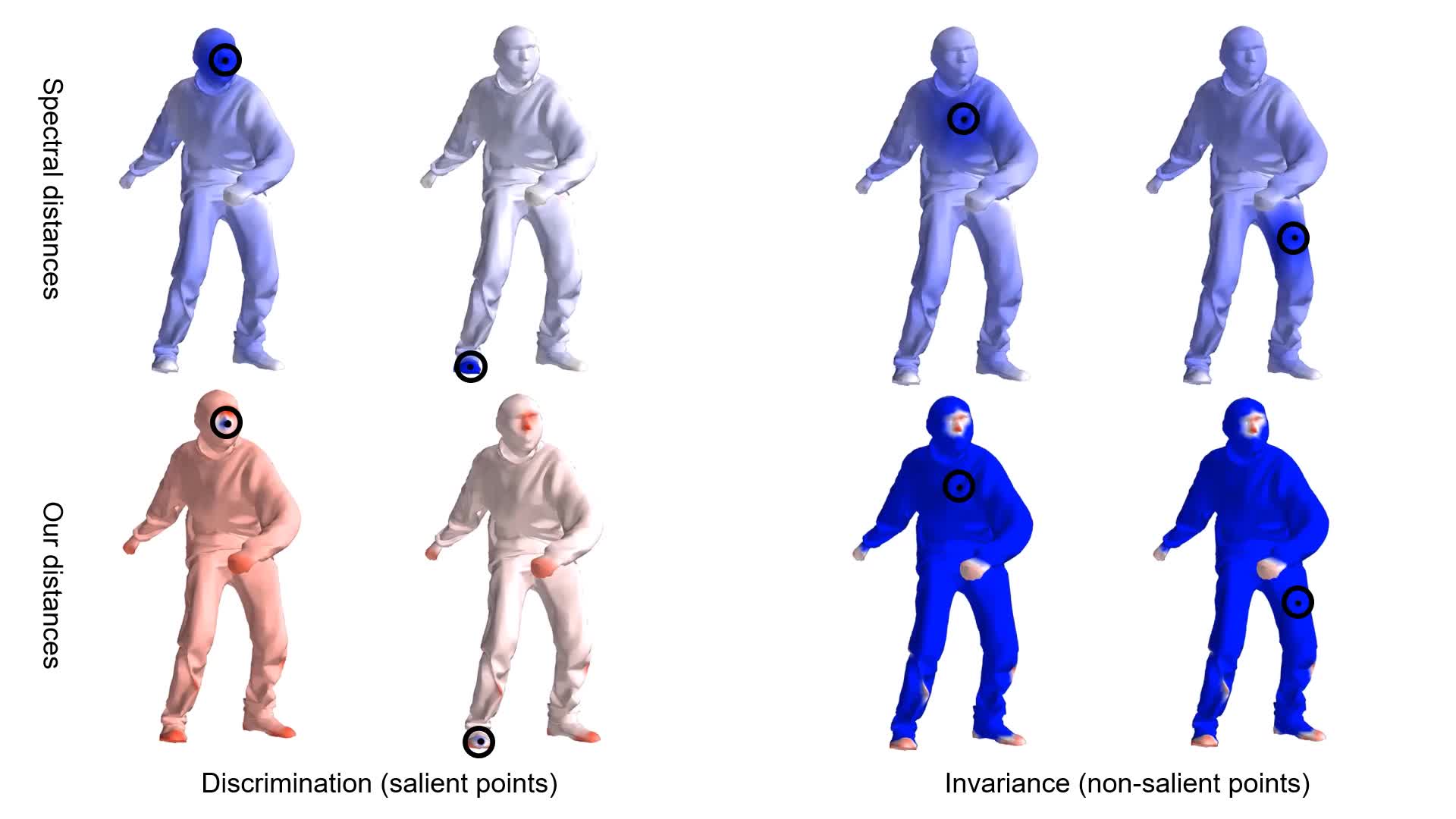

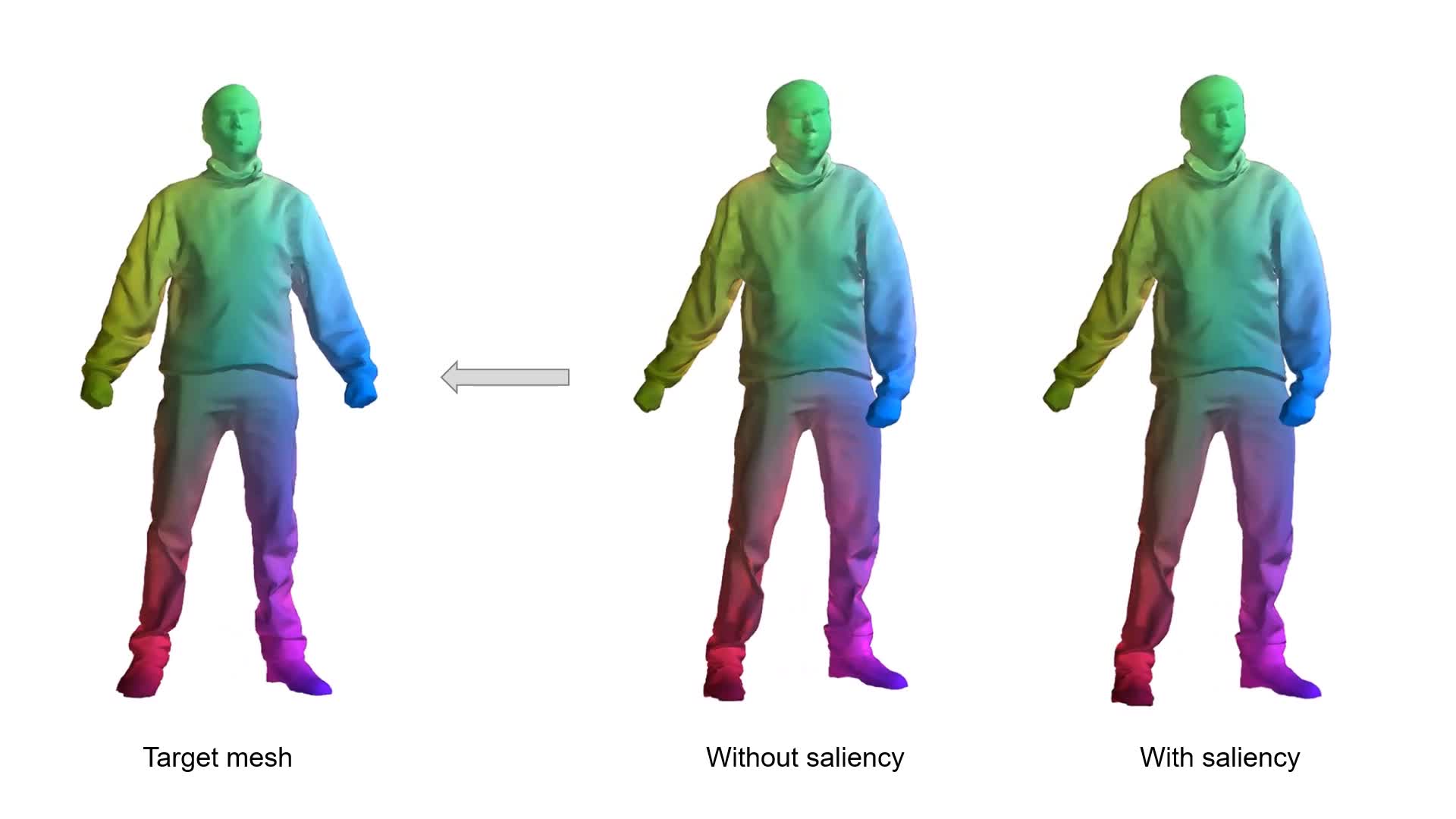

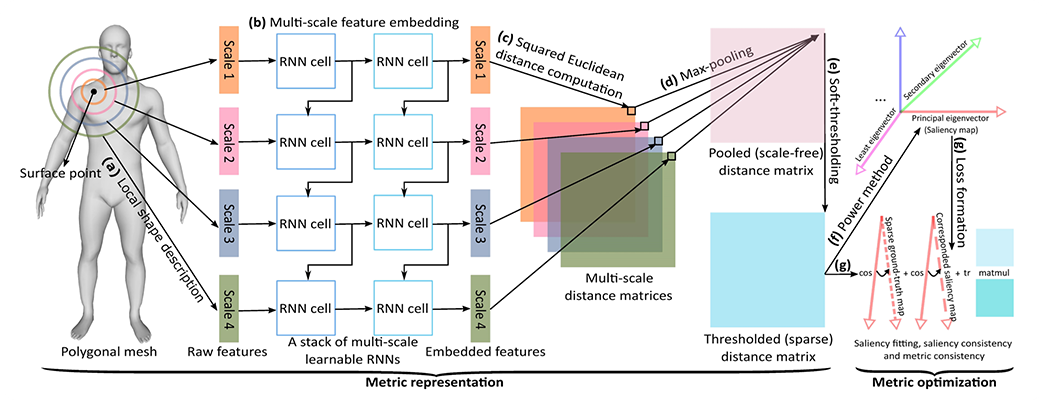

In this paper, we propose a deep metric for unifying the representation of mesh saliency detection and non-rigid shape matching. While saliency detection and shape matching are two closely related and fundamental tasks in shape analysis, previous methods approach them separately and independently, failing to exploit their mutually beneficial underlying relationship. In view of the existing gap between saliency and matching, we propose to solve them together using a unified metric representation of surface meshes. We show that saliency and matching can be rigorously derived from our representation as the principal eigenvector and the smoothed Laplacian eigenvectors respectively. Learning the representation jointly allows matching to improve the deformation-invariance of saliency while allowing saliency to improve the feature localization of matching. To parameterize the representation from a mesh, we also propose a deep recurrent neural network (RNN) for effectively integrating multi-scale shape features and a soft-thresholding operator for adaptively enhancing the sparsity of saliency. Results show that by jointly learning from a pair of saliency and matching datasets, matching improves the accuracy of detected salient regions on meshes, which is especially obvious for small-scale saliency datasets, such as those having one to two meshes. At the same time, saliency improves the accuracy of shape matchings among meshes with reduced matching errors on surfaces.

YouTube

Cite This Research

Supporting Grants

Royal Society International Exchanges (Ref: IES\R2\181024): £12,000, Principal Investigator

Received from The Royal Society, UK, 2019-2022

Project Page

Erasmus Mundus Action 2 Programme (Ref: 2014-0861/001-001): €3.03 million, Northumbria University Funding Management Leader (PI: Prof. Nauman Aslam)

Received from Erasmus Mundus, 2015-2018

Project Page