Multimodal Models for Skin Cancer Classification using Clinical Free Text and Dermatoscopic Images

Matthew Watson, Thomas Winterbottom, Thomas Hudson, Benedict Jones, Hubert P. H. Shum, Amir Atapour-Abarghouei, Toby P. Breckon, James Harmsworth King and Noura Al Moubayed

Communications Medicine, 2026

Abstract

Background: Skin cancer is one of the most prevalent cancers globally, with early detection critical to ensure reduced mortality risk. To aid early detection, machine learning (ML) skin cancer detection models have been proposed, currently with a focus on dermatoscopic imaging only. However, freetext may provide extra diagnostic information that is not present in images alone.

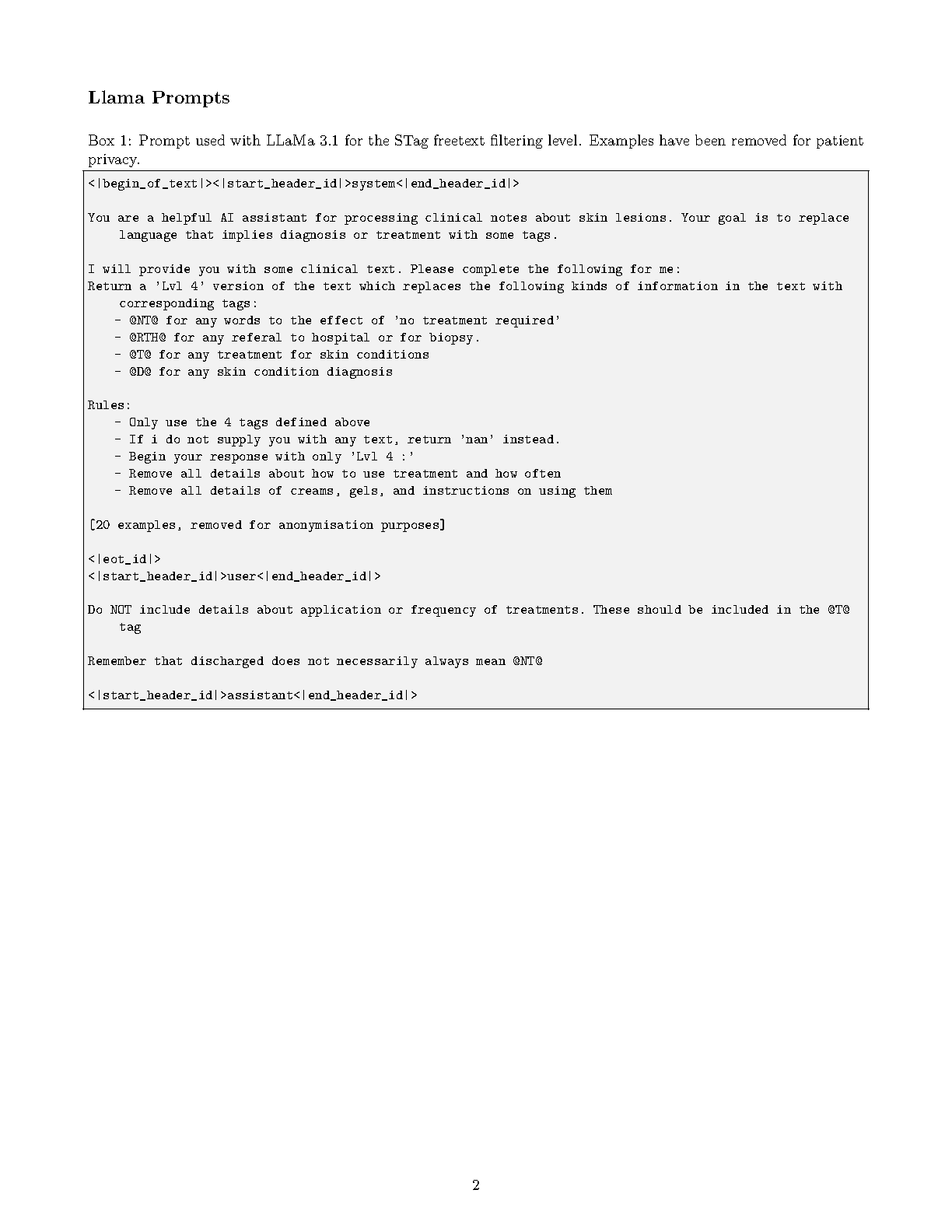

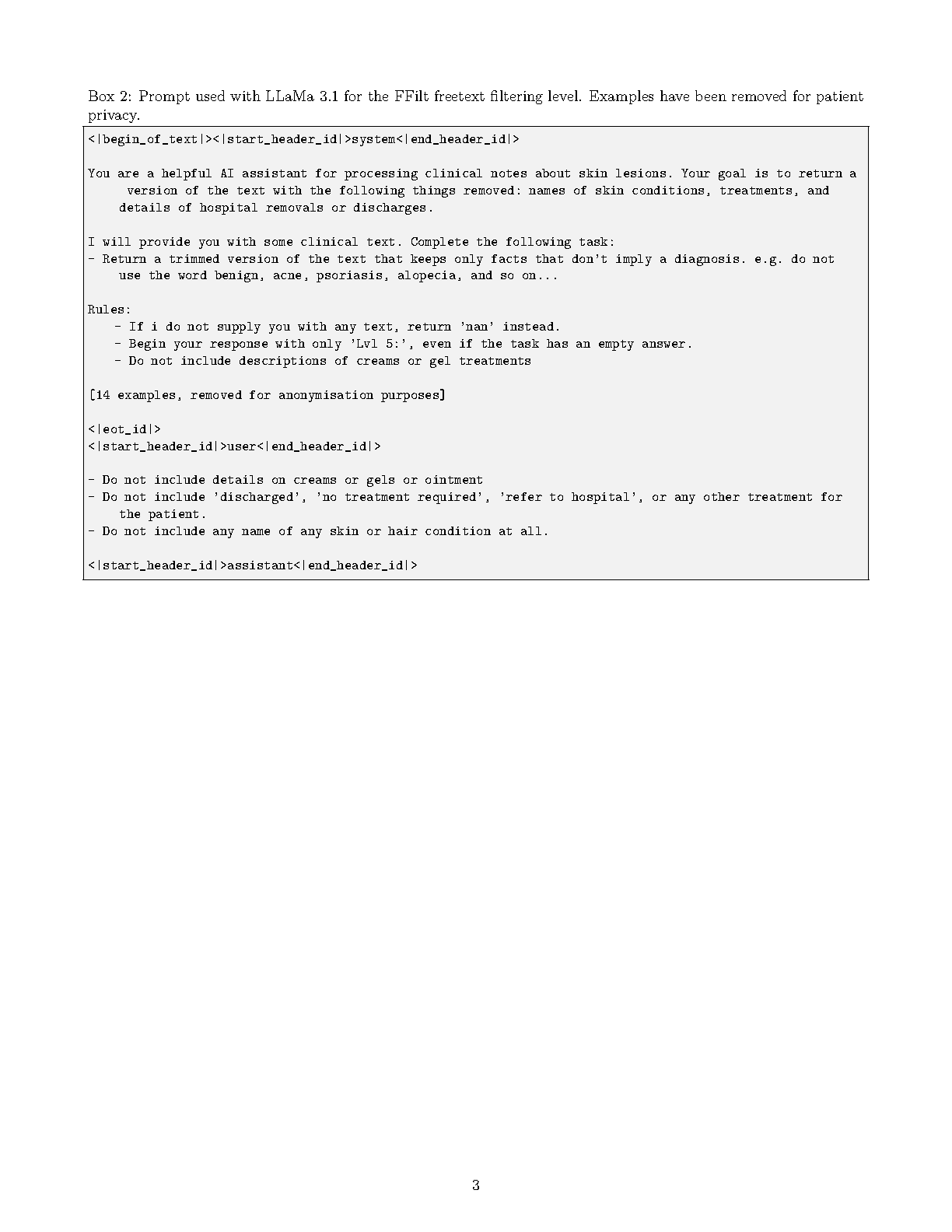

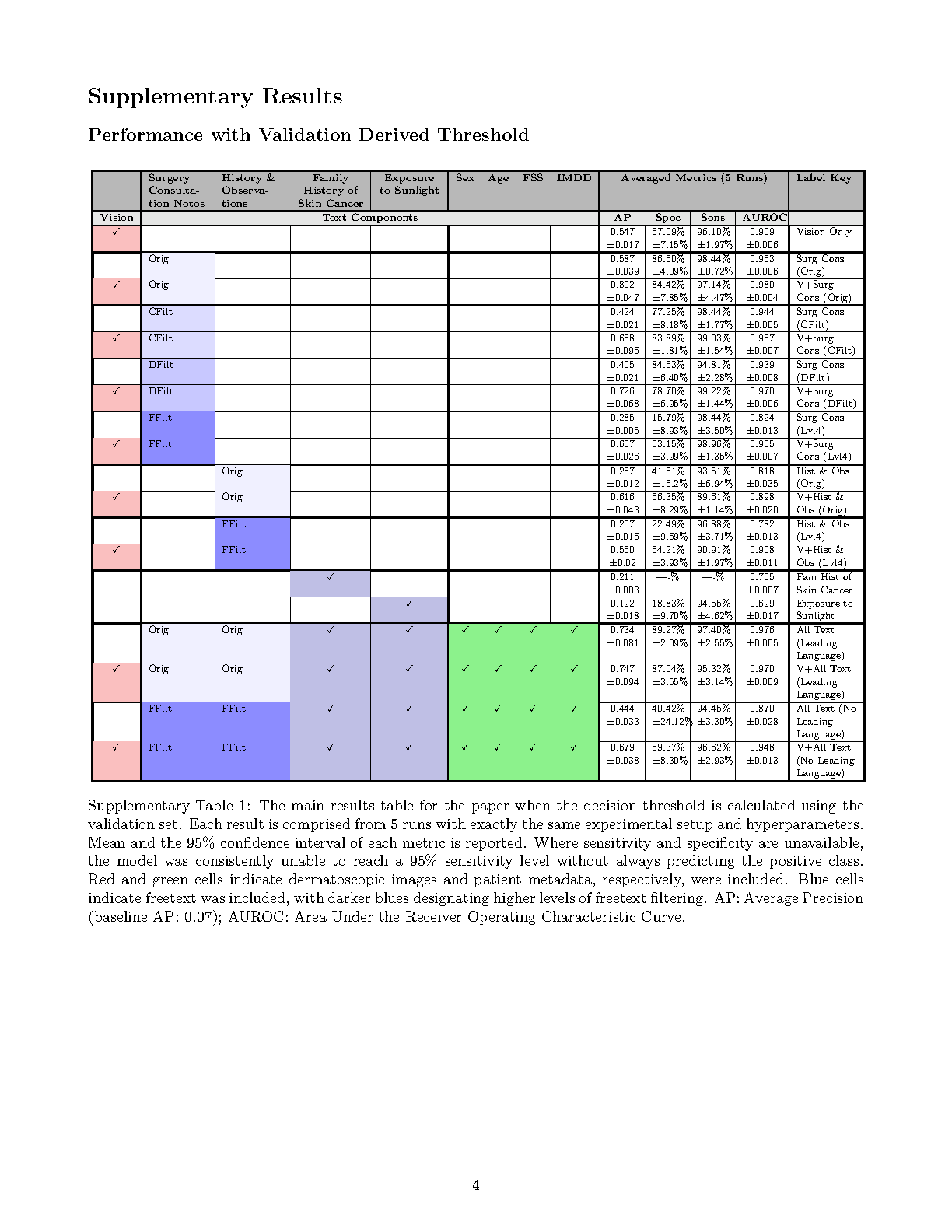

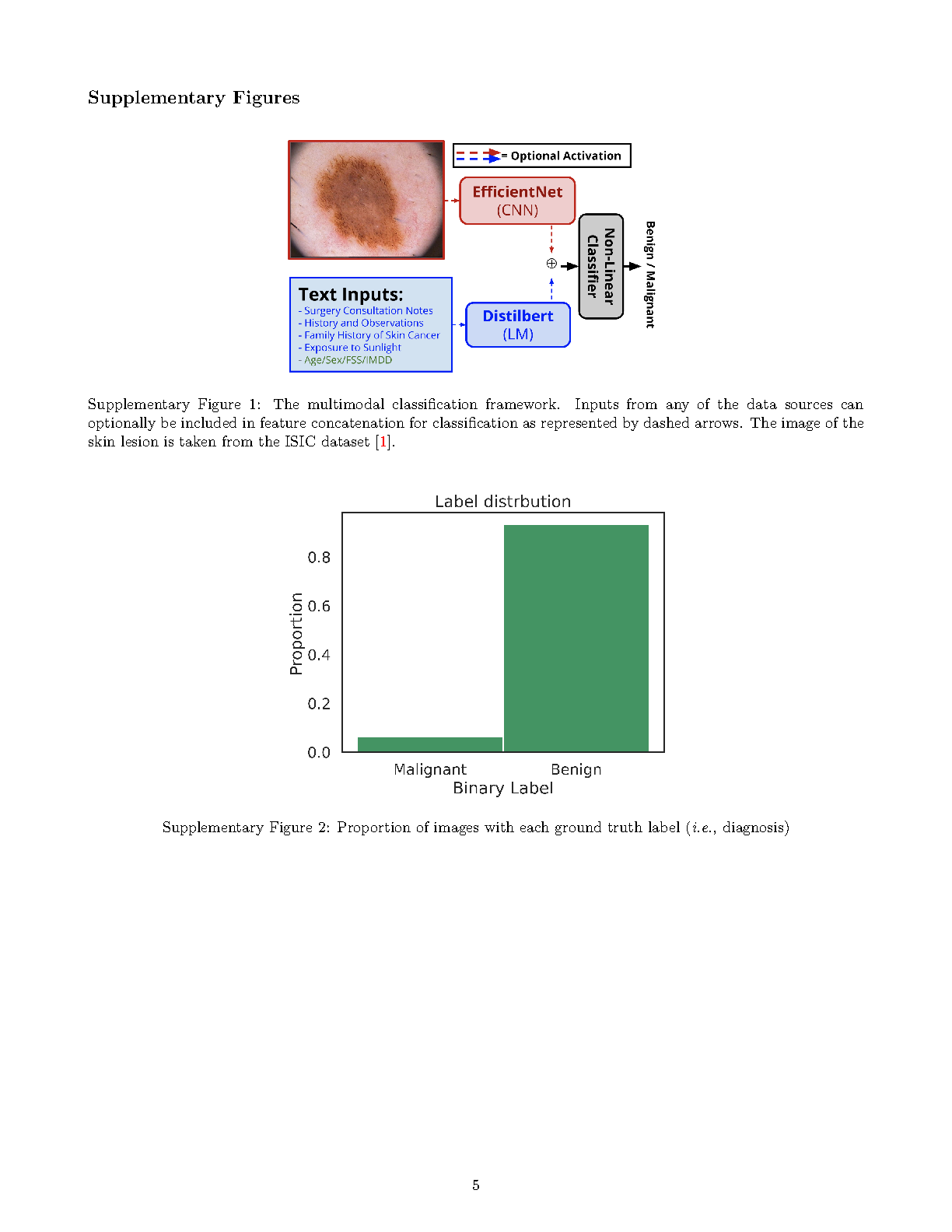

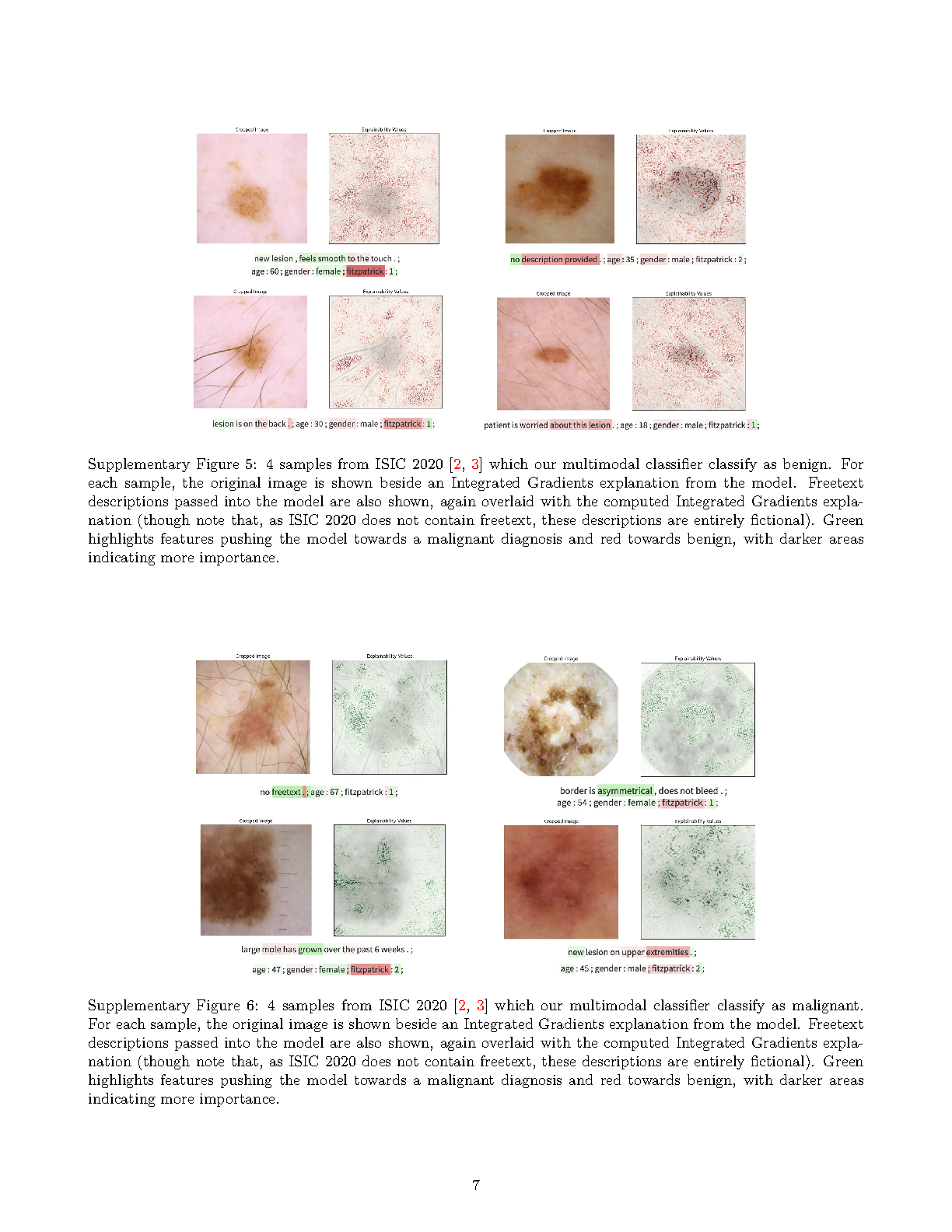

Methods: We constructed a multimodal dataset comprising 5,481 dermatoscopic images from 4,538 patients, including patient metadata and clinical notes, with binary labels (benign vs. malignant, 7% malignant). To assess and mitigate bias from leading language, we developed a clinical text preprocessing pipeline combining regular expressions and large language models, enabling multiple levels of filtering. We train multimodal ML models on this dataset to explore the effect of freetext on model performance.

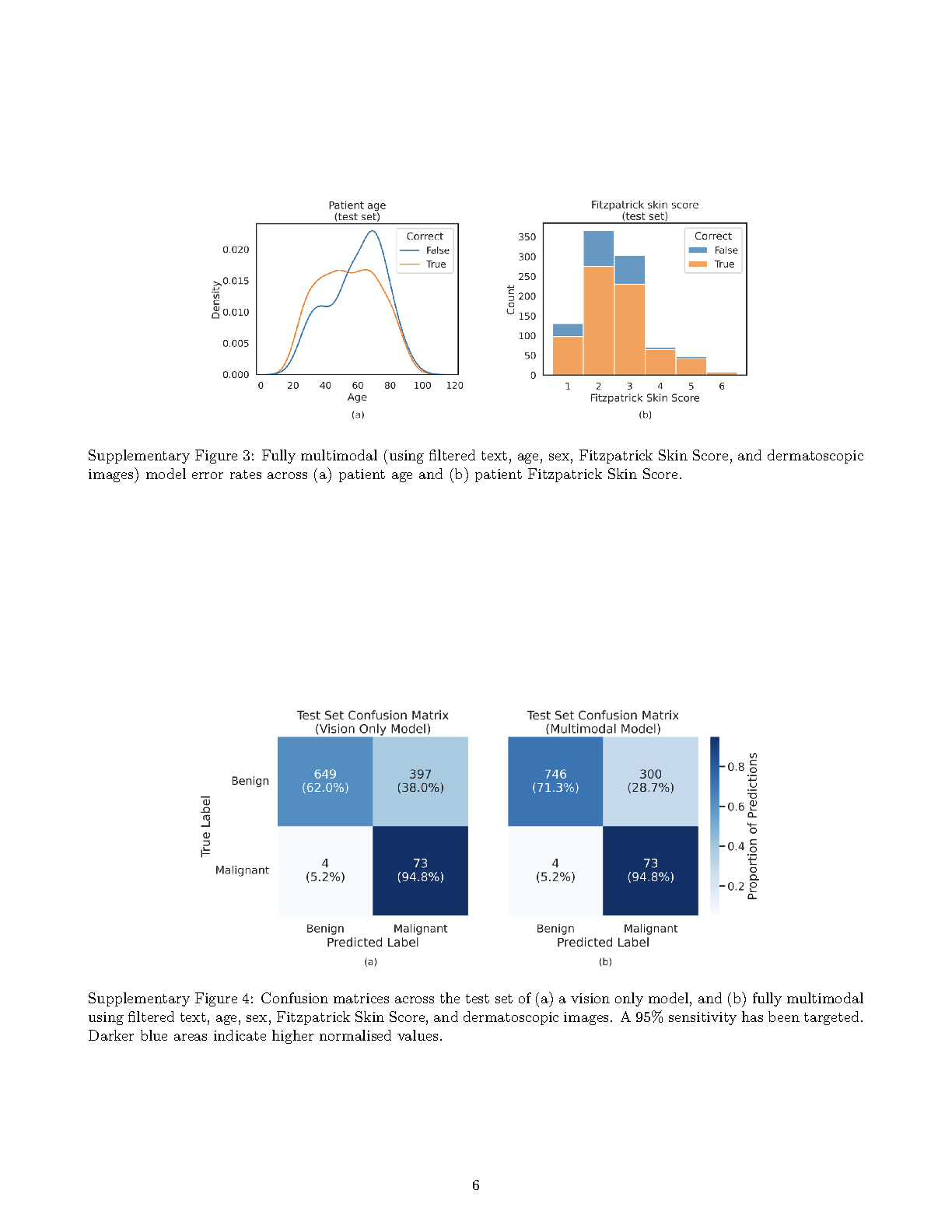

Results: Our results show that incorporating unfiltered text significantly improves classification performance (0.970 AUROC) compared to visual data alone (0.909 AUROC); even with leading language removed, performance gains persist (0.948 AUROC).

Conclusions: This work benchmarks clinical freetext inclusion in skin lesion classification, demonstrating that clinical text contributes predictive value beyond that available in images alone. The model’s high performance on unfiltered clinical text highlights the high levels of bias, and possible shortcutting, present in this text which may make it unsuitable for inclusion in some ML models. By systematically filtering clinical notes via our proposed technique, we show that multimodal models retain improved accuracy while reducing bias. These results provide practical guidance for integrating clinical text into real-world skin cancer detection systems and establish a foundation for future multimodal research in dermatology.

Cite This Research

Supporting Grants

Knowledge Transfer Partnership (Ref: 13457): £143,393, Principal Investigator

Received from Innovate UK, UK, 2022-2024

Project Page