Self-Regulated Sample Diversity in Large Language Models

Mingyue Liu, Jonathan Frawley, Sarah Wyer, Hubert P. H. Shum, Sara L. Uckelman, Sue Black and Chris G. Willcocks

Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2024

H5-Index: 126# Core A Conference‡

Abstract

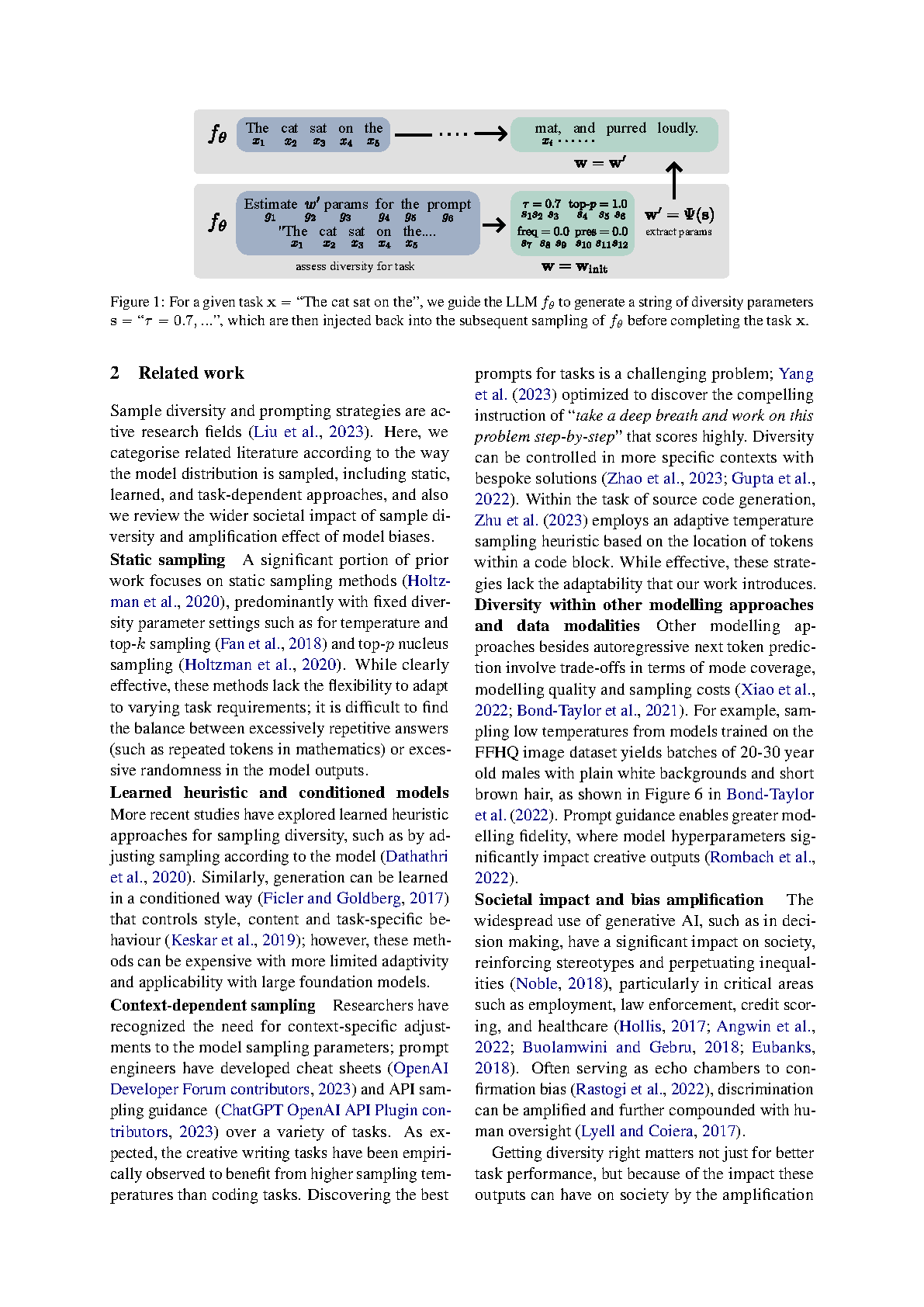

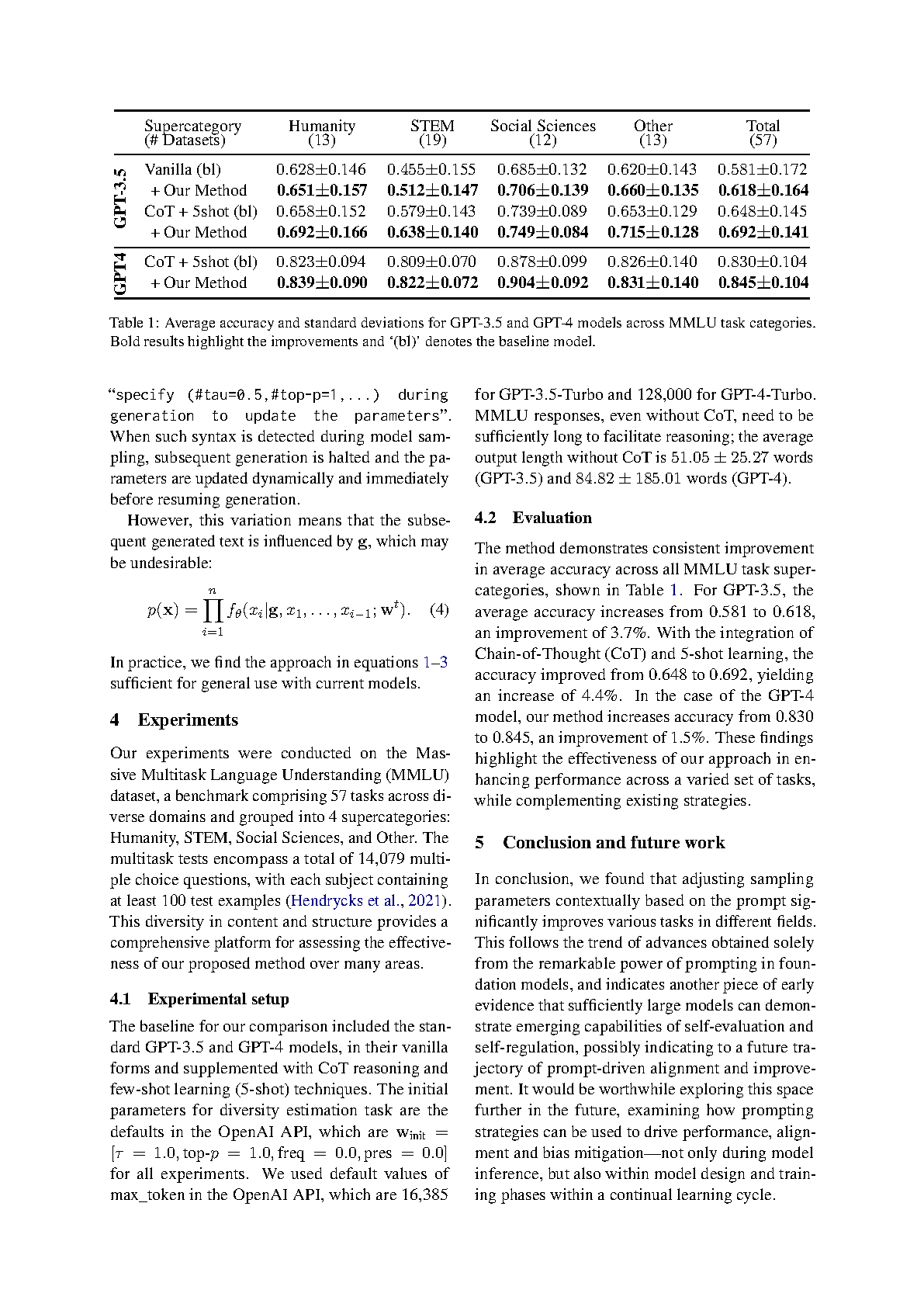

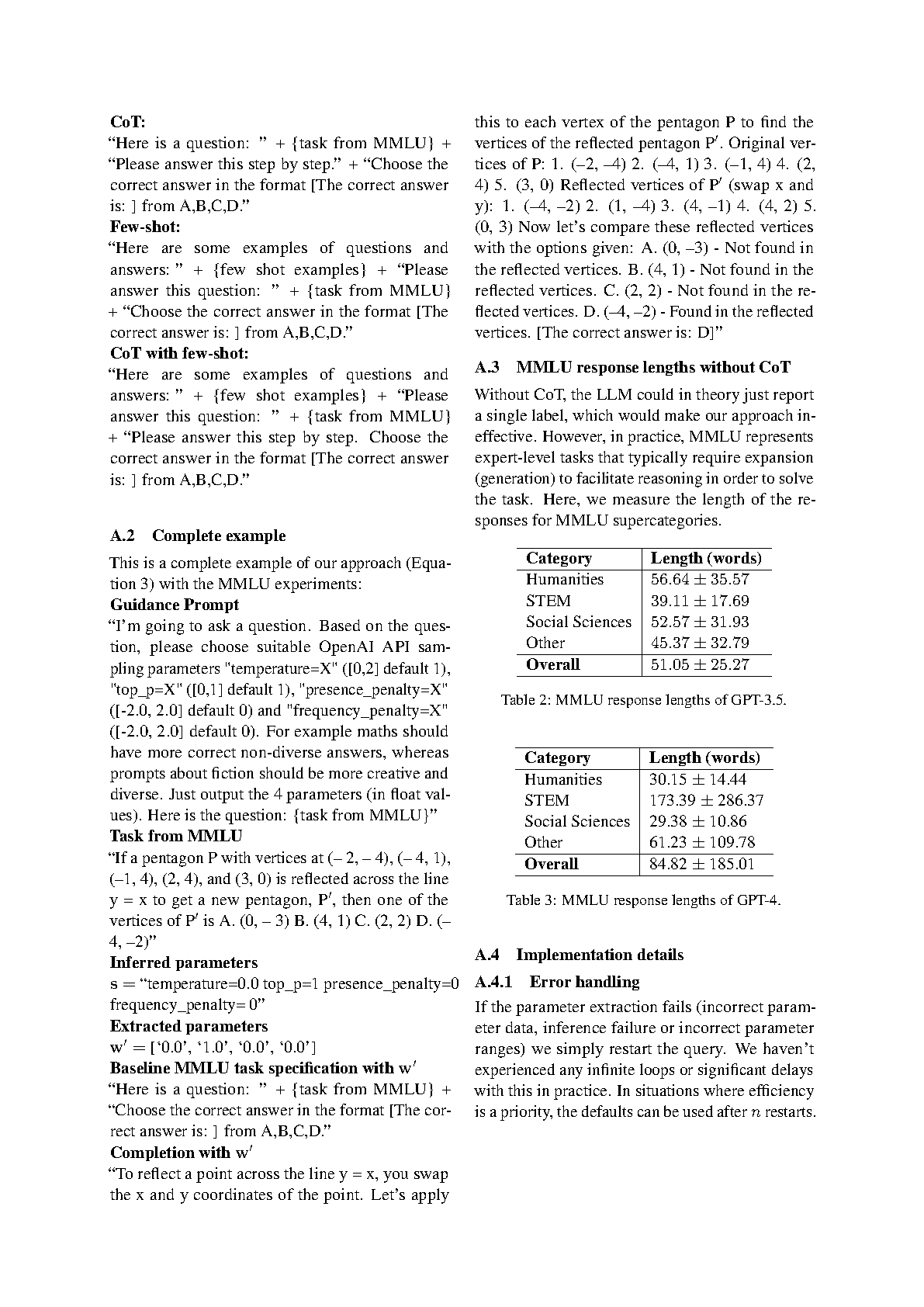

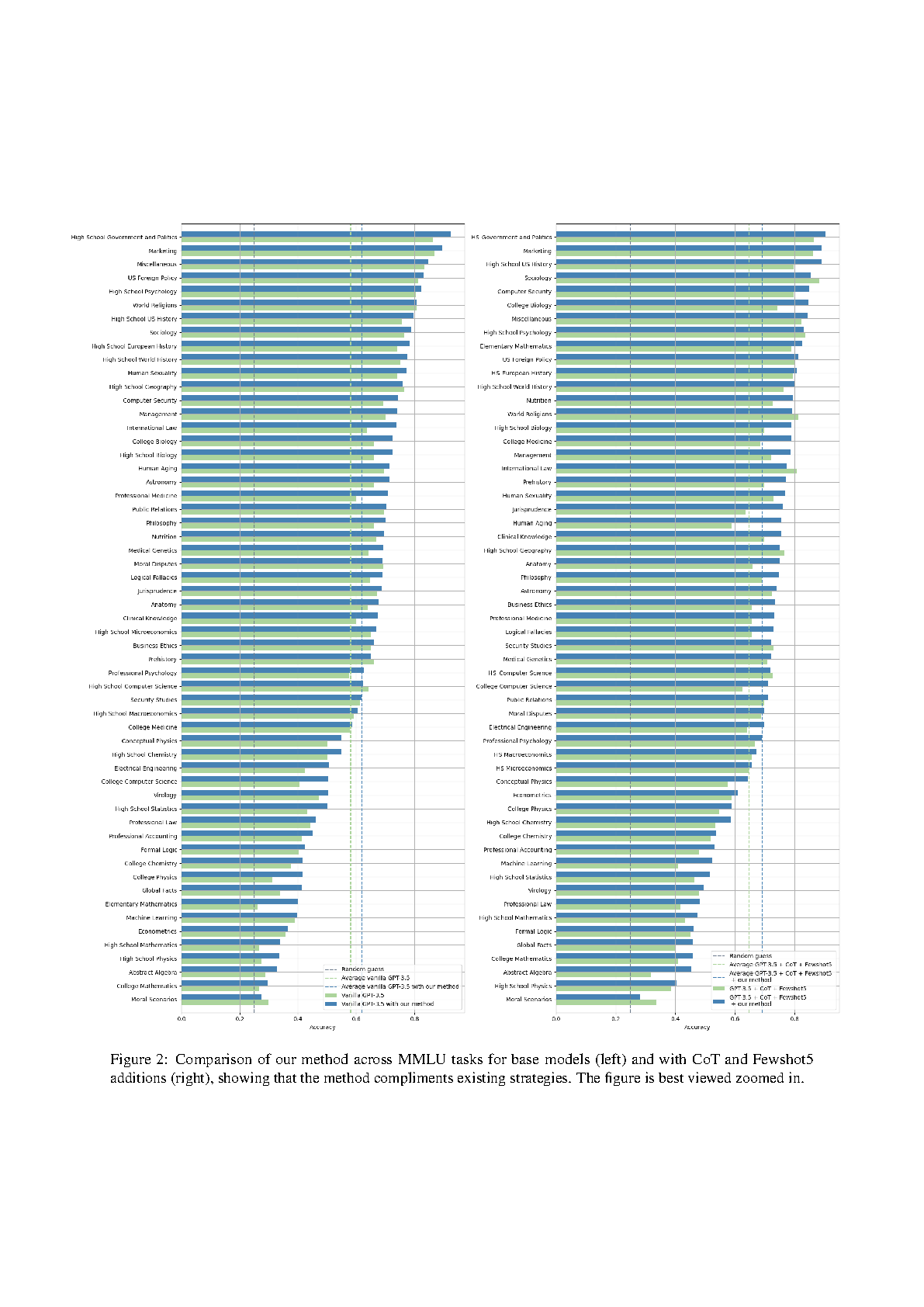

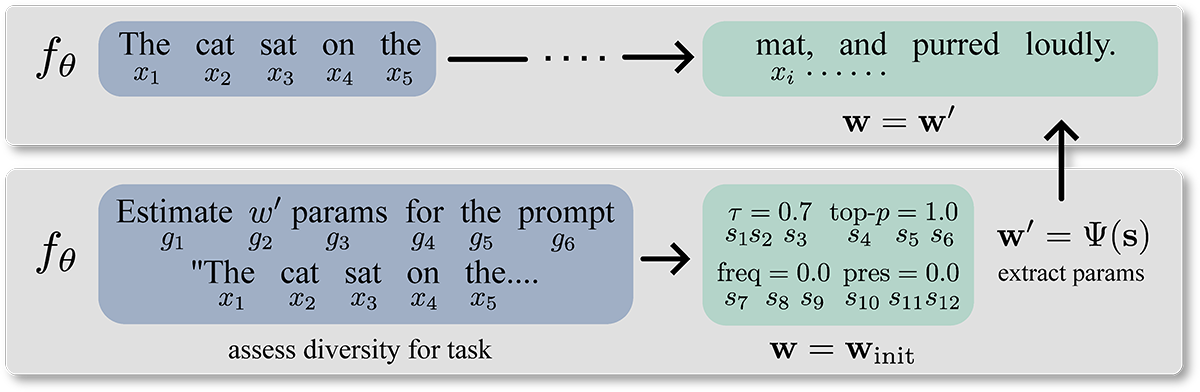

Sample diversity depends on the task; within mathematics, precision and determinism are paramount, while storytelling thrives on creativity and surprise. This paper presents a simple self-regulating approach where we adjust sample diversity inference parameters dynamically based on the input prompt - in contrast to existing methods that require expensive and inflexible setups, or maintain static values during inference. Capturing a broad spectrum of sample diversities can be formulated as a straightforward self-supervised inference task, which we find significantly improves the quality of responses generically without model retraining or fine-tuning. In particular, our method demonstrates significant improvement in all supercategories of the MMLU multitask benchmark (GPT-3.5: +4.4%, GPT-4: +1.5%), which captures a large variety of difficult tasks covering STEM, the humanities and social sciences.