Bi-Projection Based Foreground-Aware Omnidirectional Depth Prediction

Qi Feng, Hubert P. H. Shum and Shigeo Morishima

Proceedings of the 2021 Visual Computing (VC), 2021

Abstract

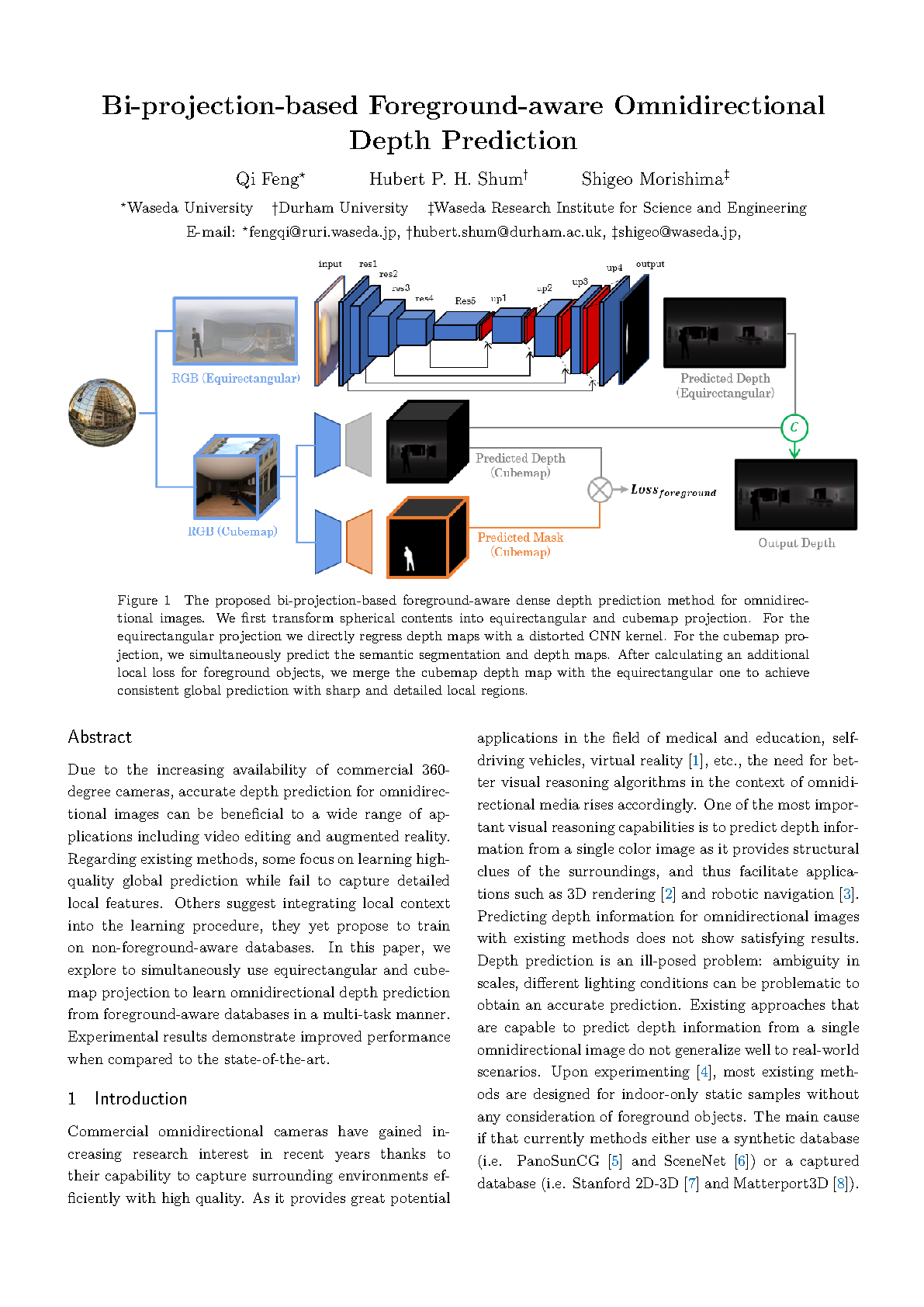

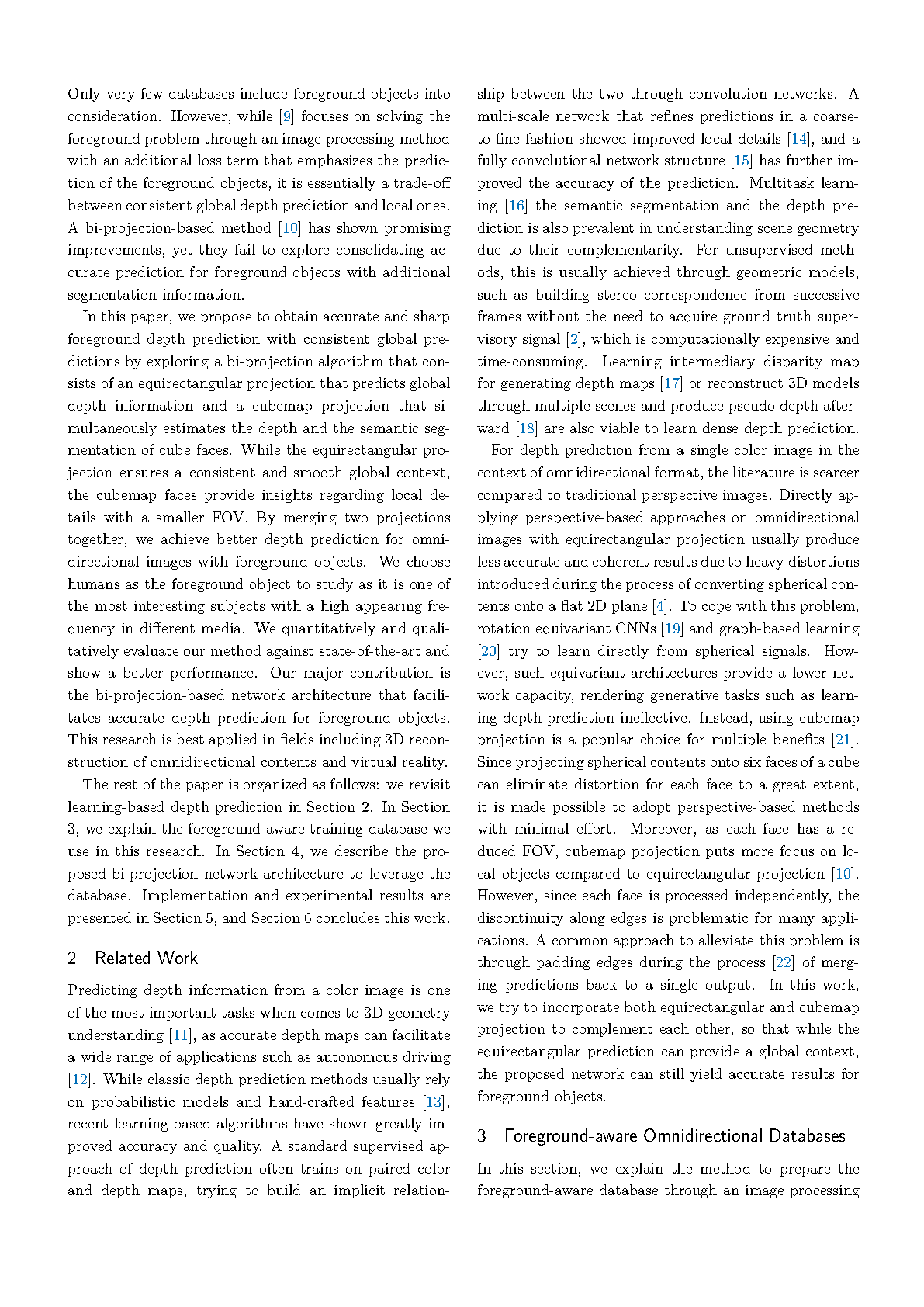

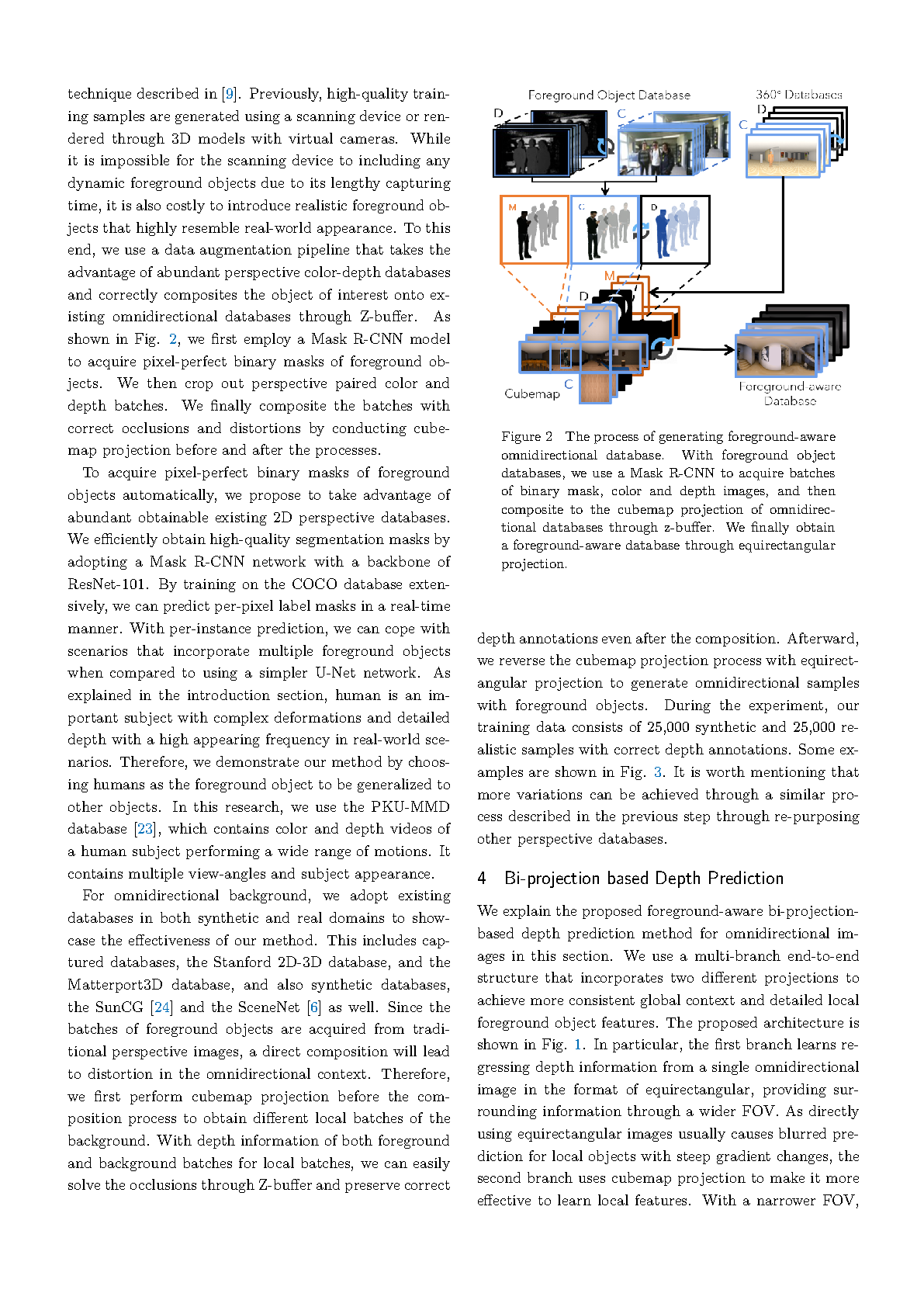

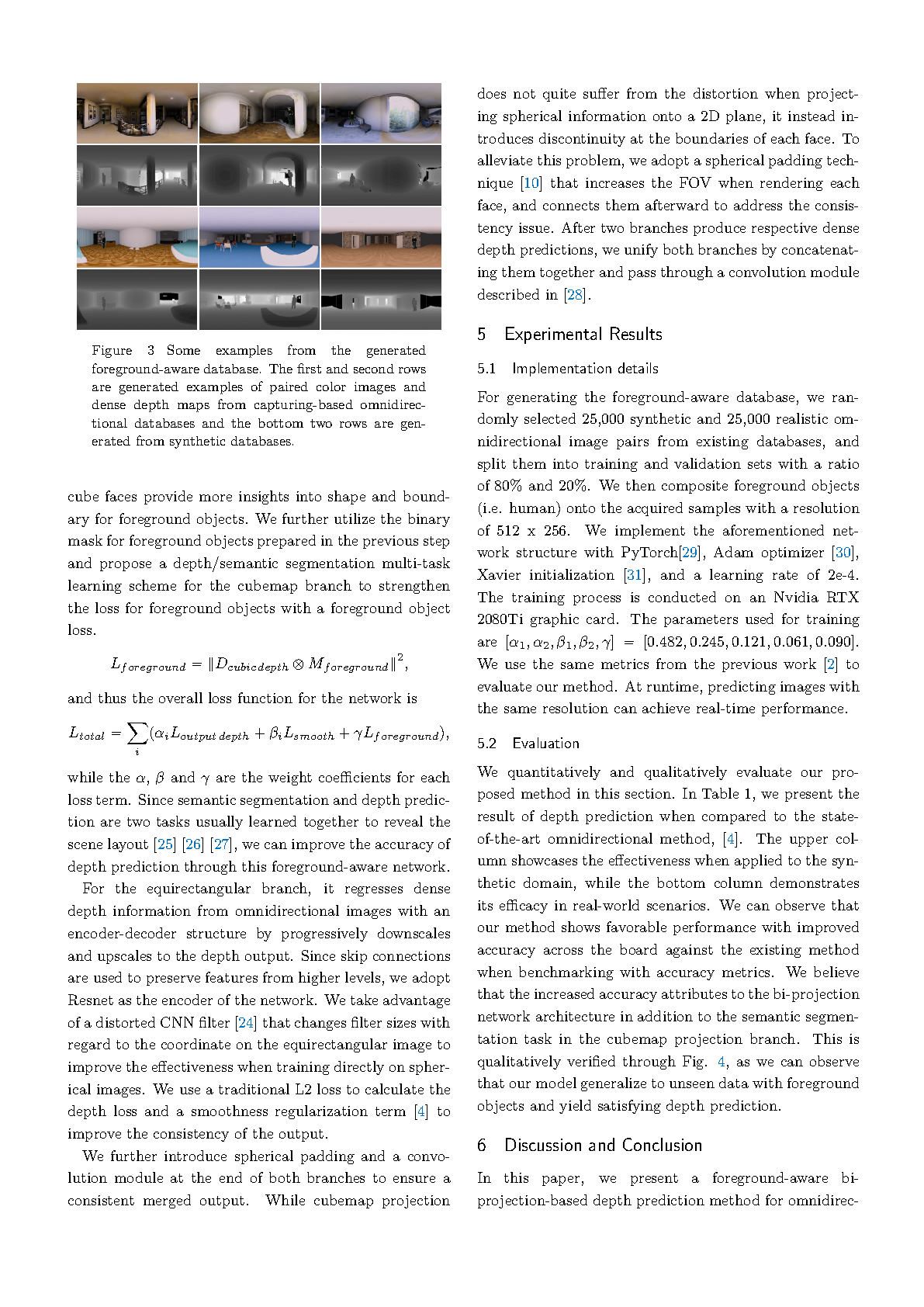

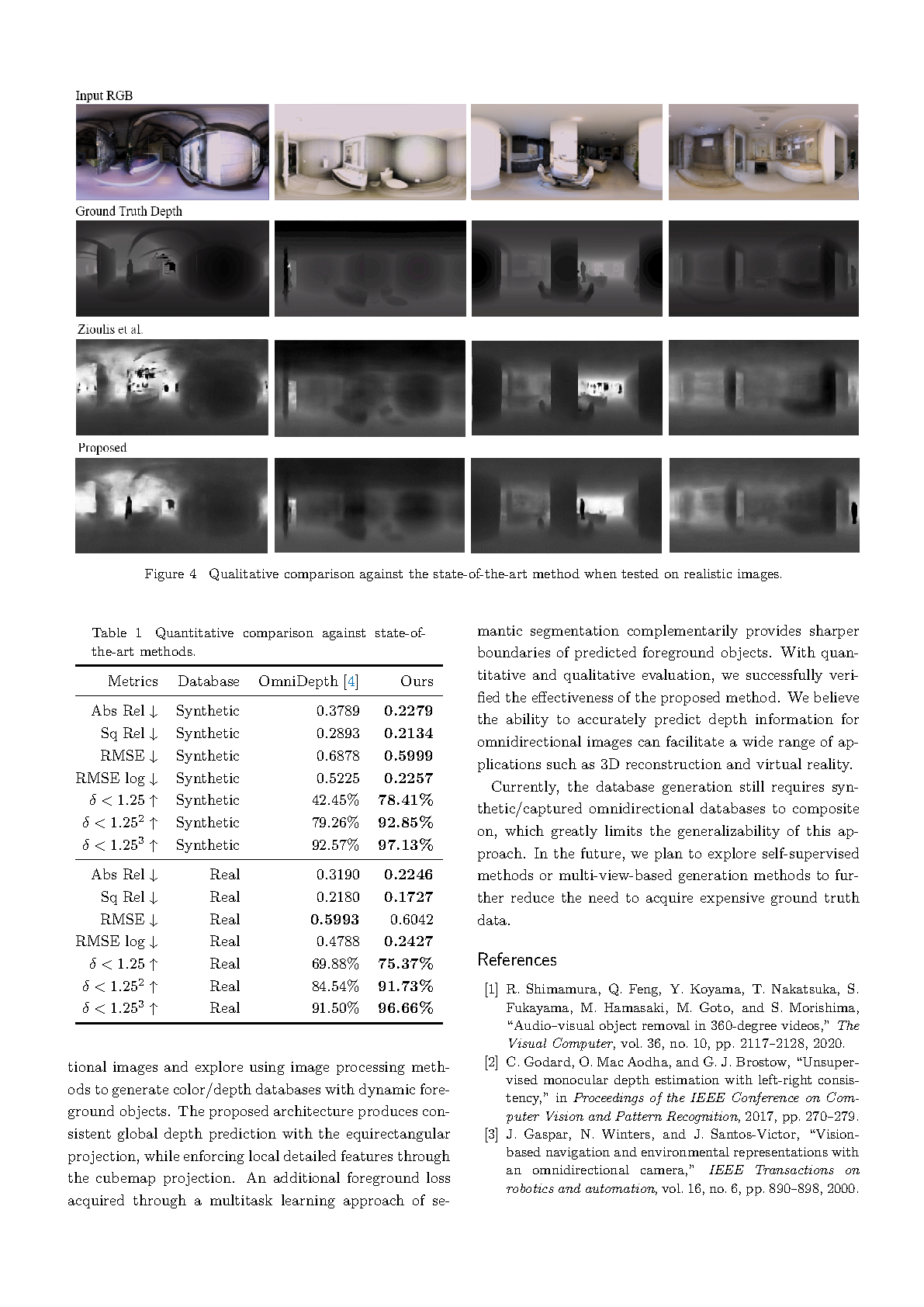

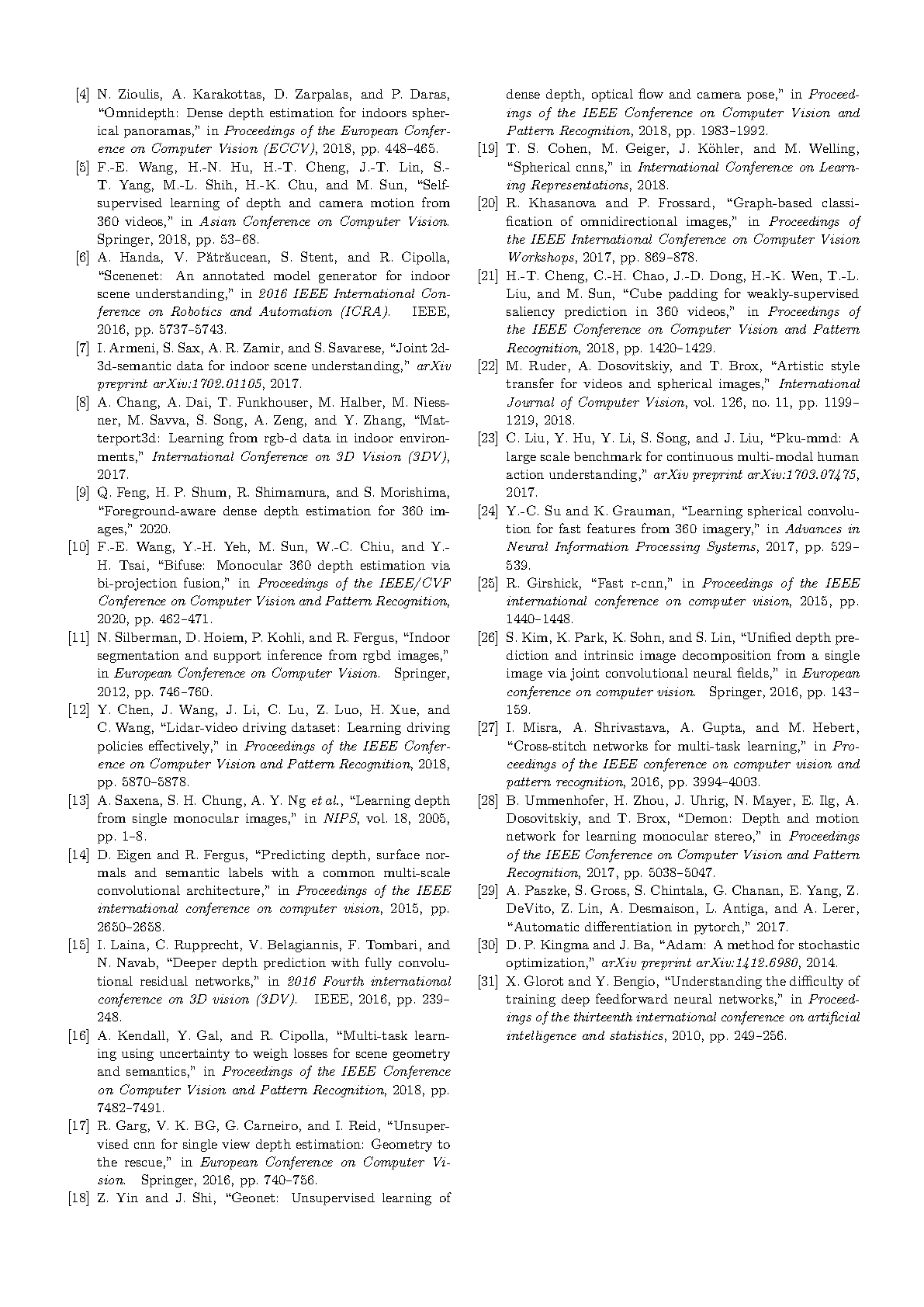

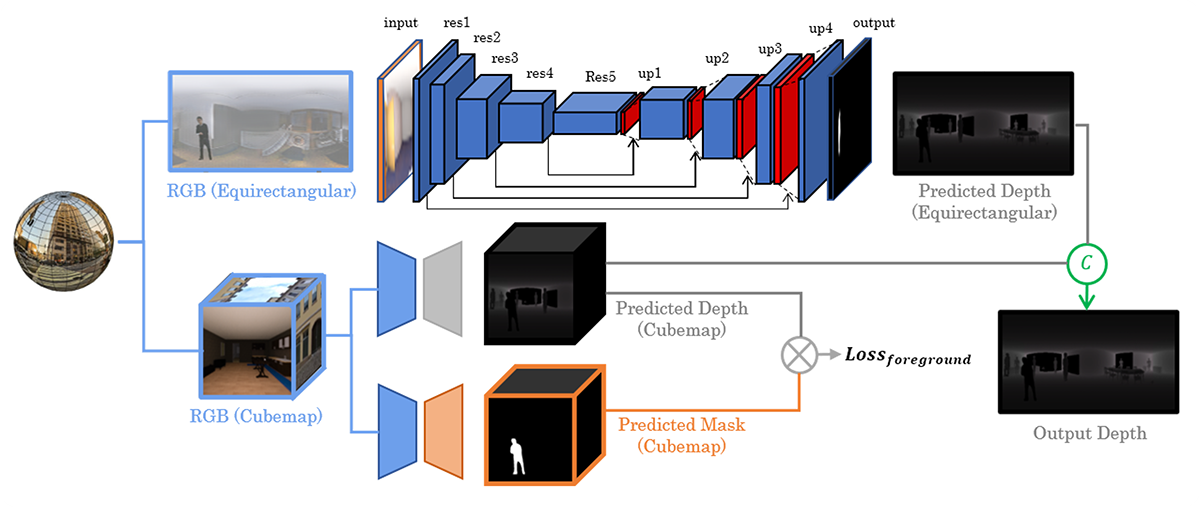

Due to the increasing availability of commercial 360-degree cameras, accurate depth prediction for omnidirectional images can be beneficial to a wide range of applications including video editing and augmented reality. Regarding existing methods, some focus on learning high-quality global prediction while fail to capture detailed local features. Others suggest integrating local context into the learning procedure, they yet propose to train on non-foreground-aware databases. In this paper, we explore to simultaneously use equirectangular and cubemap projection to learn omnidirectional depth prediction from foreground-aware databases in a multi-task manner. Experimental results demonstrate improved performance when compared to the state-of-the-art.