Improving Posture Classification Accuracy for Depth Sensor-Based Human Activity Monitoring in Smart Environments

Edmond S. L. Ho, Jacky C. P. Chan, Donald C. K. Chan, Hubert P. H. Shum, Yiu-ming Cheung and P. C. Yuen

Computer Vision and Image Understanding (CVIU), 2016

Impact Factor: 3.5† Citation: 100#

Abstract

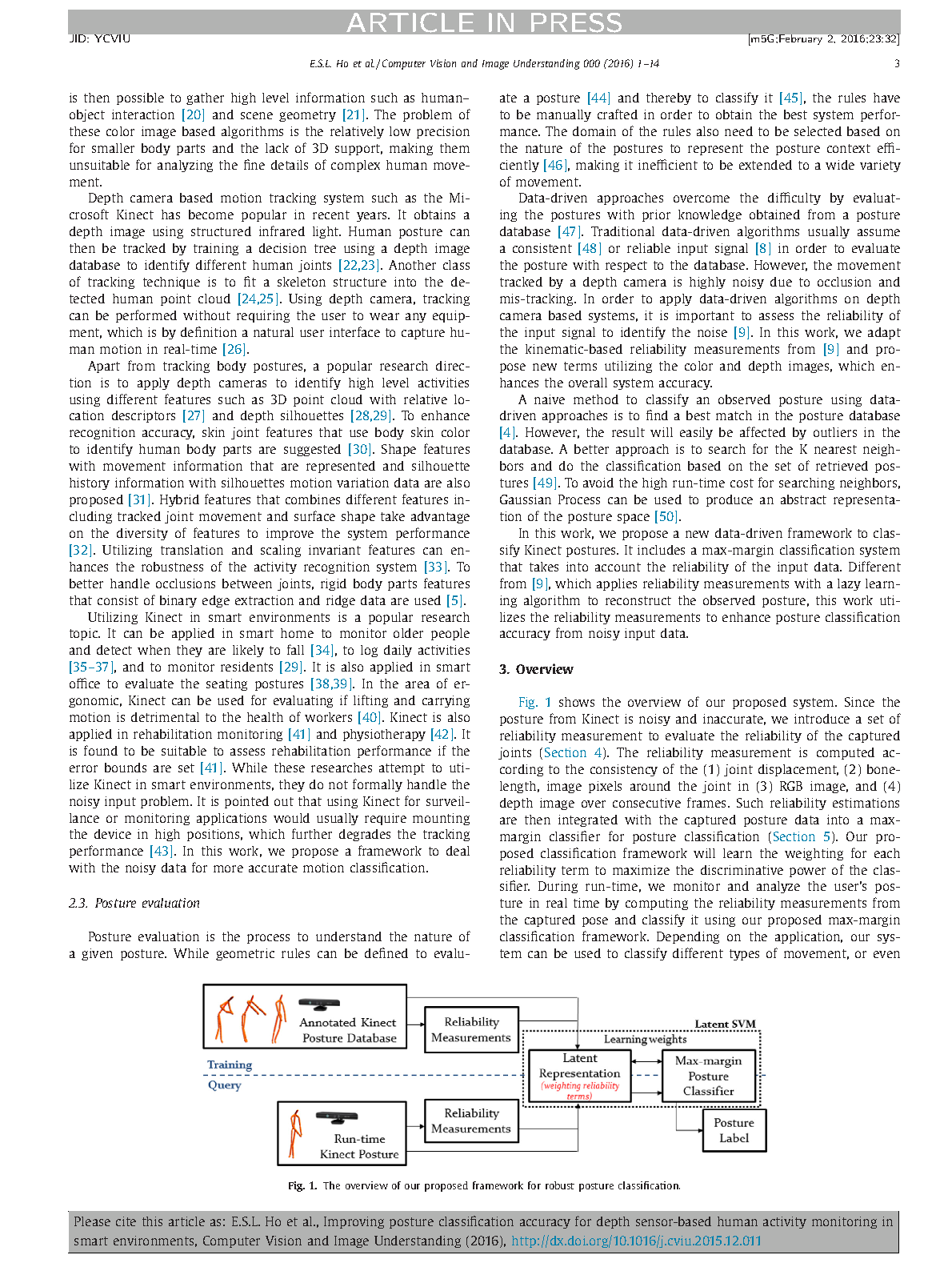

Smart environments and monitoring systems are popular research areas nowadays due to its potential to enhance the quality of life. Applications such as human behaviour analysis and workspace ergonomics monitoring are automated, thereby improving well-being of individuals with minimal running cost. The central problem of smart environments is to understand what the user is doing in order to provide the appropriate support. While it is difficult to obtain information of full body movement in the past, depth camera-based motion sensing technology such as Kinect has made it possible to obtain 3D posture without complex setup. This has fused a large number of research projects to apply Kinect in smart environments. The common bottleneck of these researches is the high amount of errors in the detected joint positions, which would result in inaccurate analysis and false alarms. In this paper, we propose a framework that accurately classifies the nature of the 3D postures obtained by Kinect using a max-margin classifier. Different from previous work in the area, we integrate the information about the reliability of the tracked joints in order to enhance the accuracy and robustness of our framework. As a result, apart from general classifying activity of different movement context, our proposed method can classify the subtle differences between correctly performed and incorrectly performed movement in the same context. We demonstrate how our framework can be applied to evaluate the user??s posture and identify the postures that may result in musculoskeletal disorders. Such a system can be used in workplace such as offices and factories to reduce risk of injury. Experimental results have shown that our method consistently outperforms existing algorithms in both activity classification and posture healthiness classification. Due to the low-cost and the easy deployment process of depth camera based motion sensors, our framework can be applied widely in home and office to facilitate smart environments.

Cite This Research

Supporting Grants

EPSRC First Grant Scheme (Ref: EP/M002632/1): £123,819, Principal Investigator

Received from The Engineering and Physical Sciences Research Council, UK, 2014-2016

Project Page