High-Speed Multi-Person Pose Estimation with Deep Feature Transfer

Ying Huang, Hubert P. H. Shum, Edmond S. L. Ho and Nauman Aslam

Computer Vision and Image Understanding (CVIU), 2020

Impact Factor: 3.5† Citation: 16#

Abstract

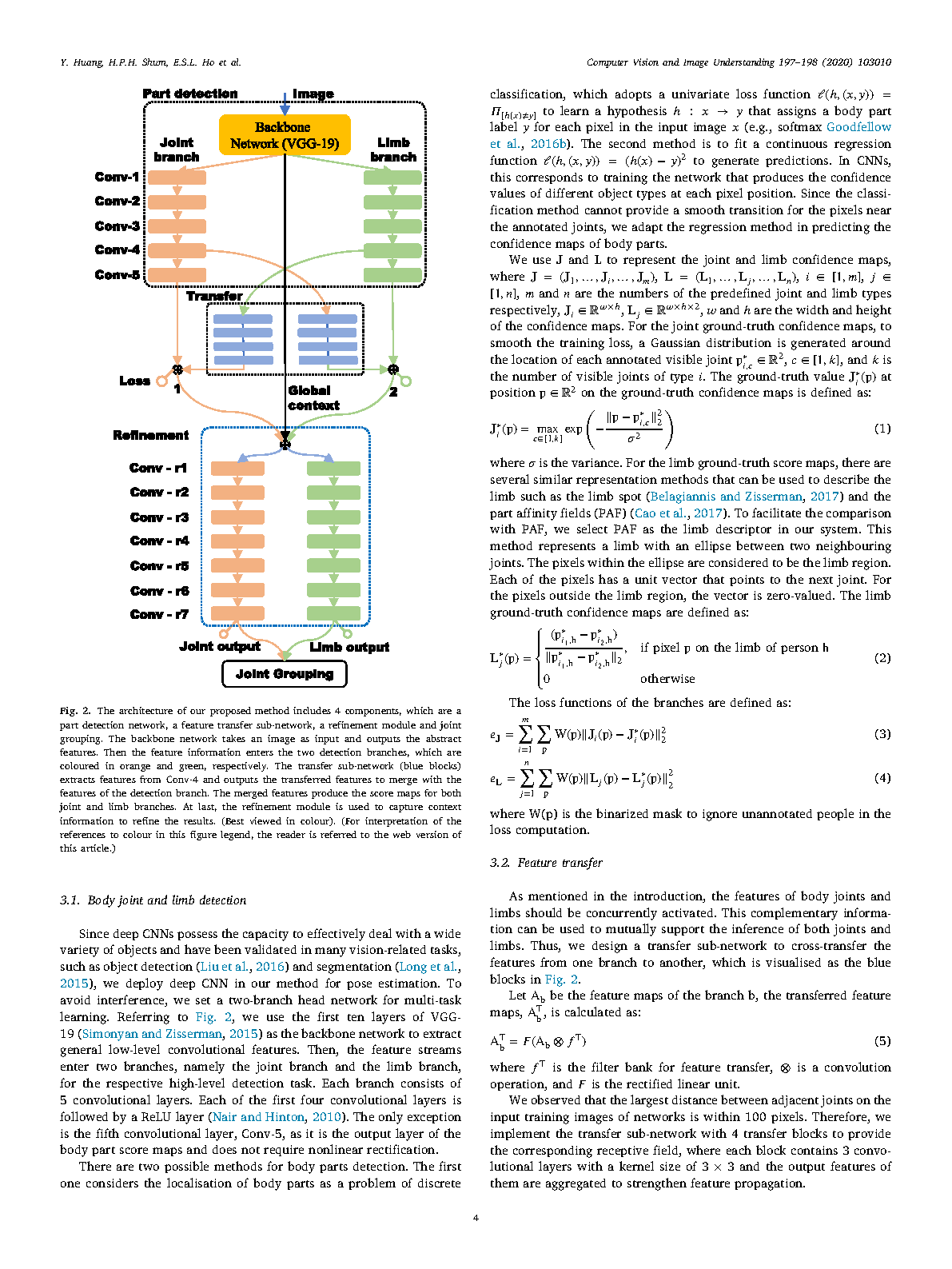

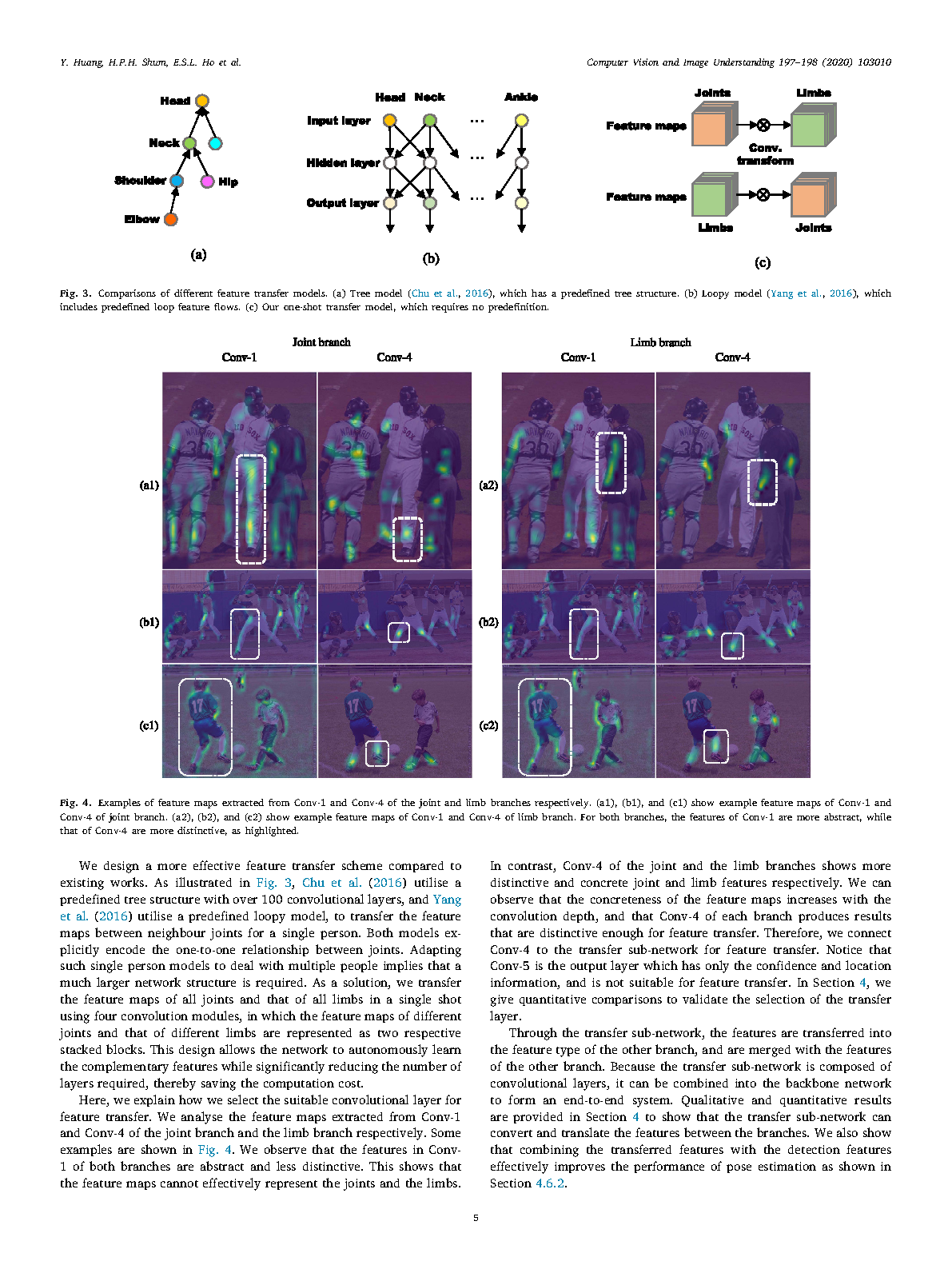

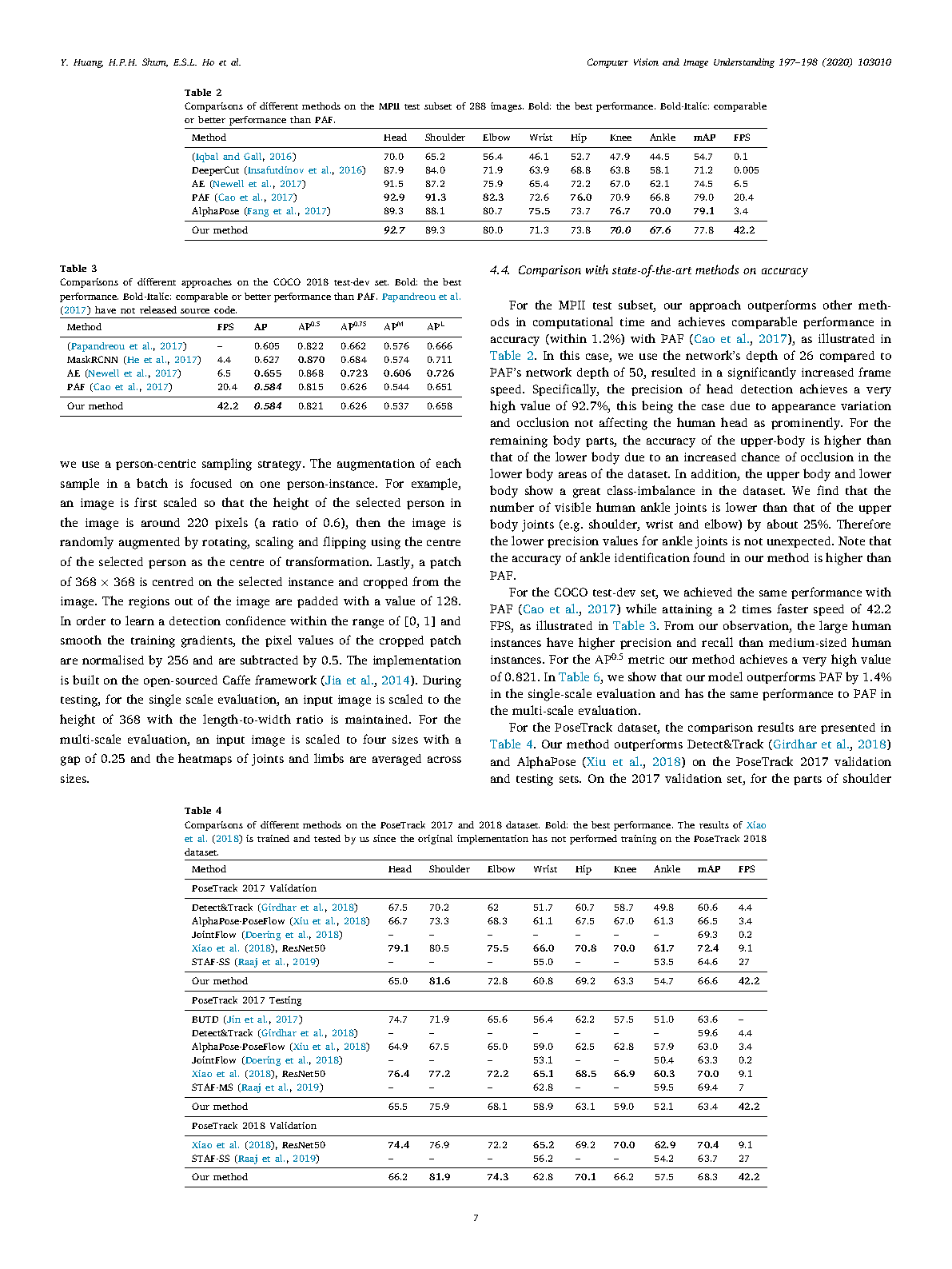

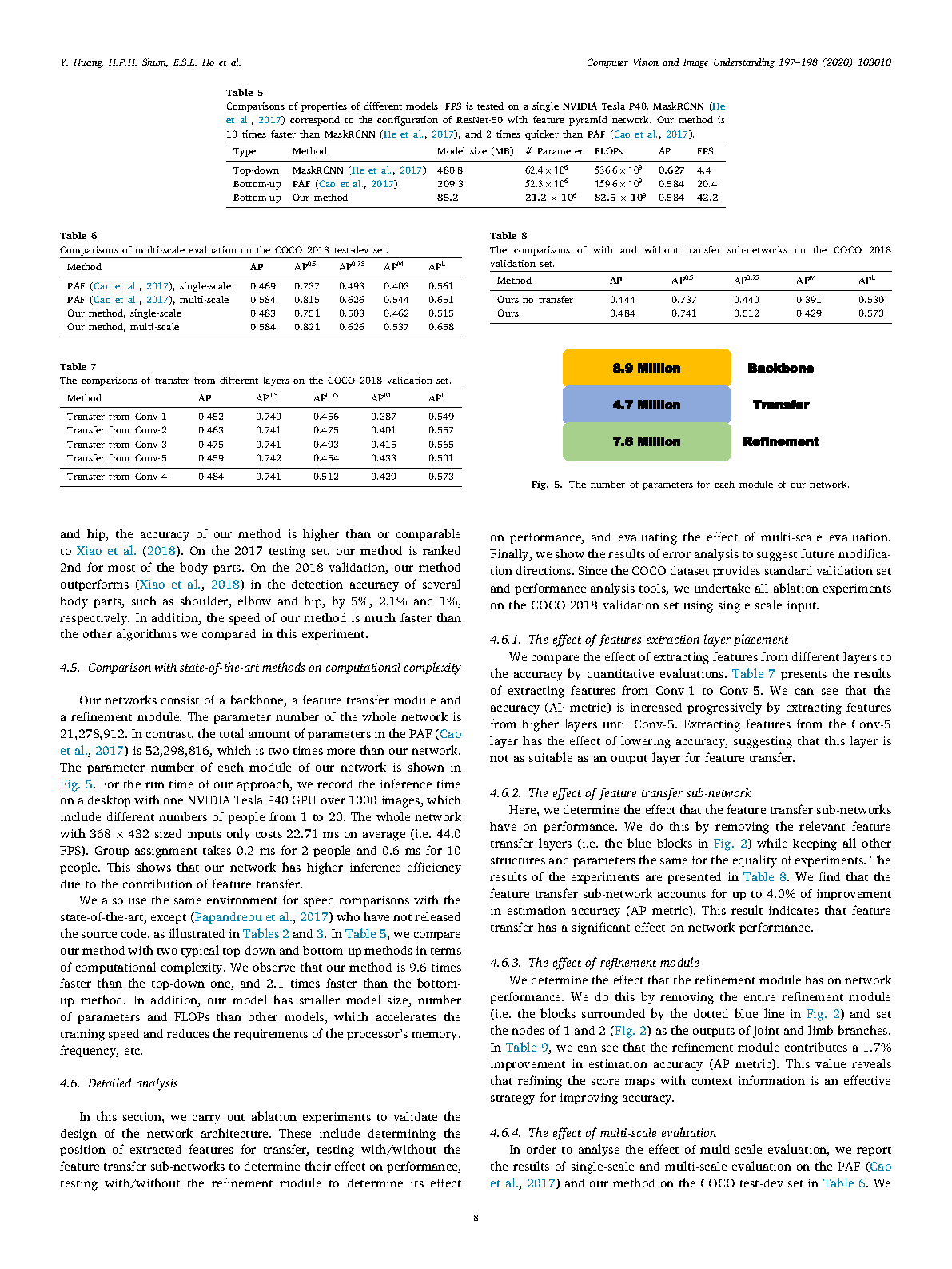

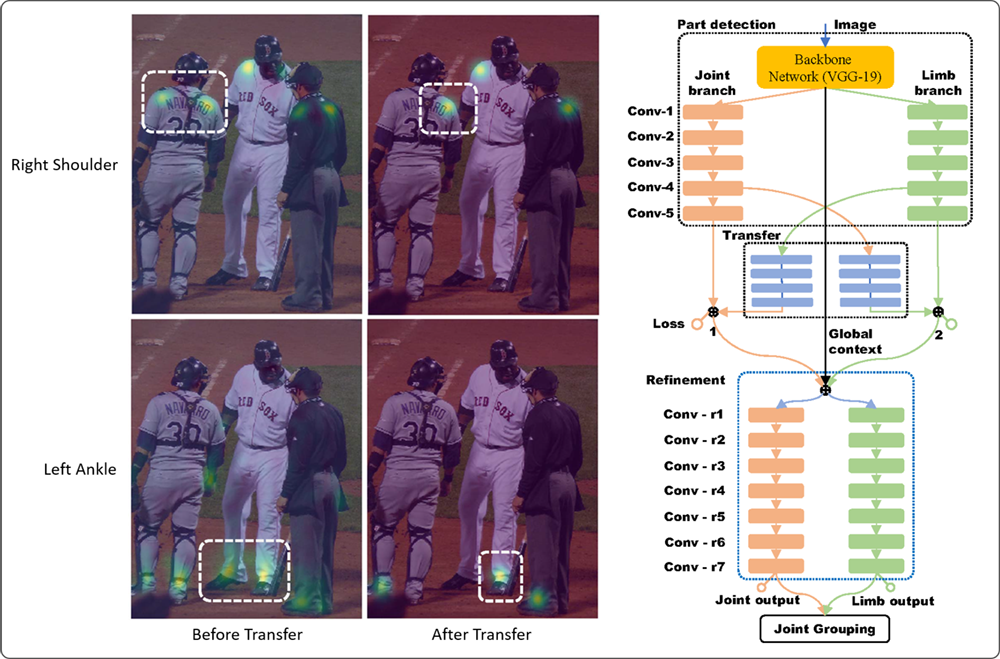

Recent advancements in deep learning have significantly improved the accuracy of multi-person pose estimation from RGB images. However, these deep learning methods typically rely on a large number of deep refinement modules to refine the features of body joints and limbs, which hugely reduce the run-time speed and therefore limit the application domain. In this paper, we propose a feature transfer framework to capture the concurrent correlations between body joint and limb features. The concurrent correlations of these features form a complementary structural relationship, which mutually strengthens the network’s inferences and reduces the needs of refinement modules. The transfer sub-network is implemented with multiple convolutional layers, and is merged with the body part detection network to form an end-to-end system. The transfer relationship is automatically learned from ground-truth data instead of being manually encoded, resulting in a more general and efficient design. The proposed framework is validated on the multiple popular multi-person pose estimation benchmarks - MPII, COCO 2018 and PoseTrack 2017 and 2018. Experimental results show that our method not only significantly increases the inference speed to 73.8 frame per second (FPS), but also attains comparable state-of-the-art performance.

Cite This Research

Supporting Grants

Erasmus Mundus Action 2 Programme (Ref: 2014-0861/001-001): €3.03 million, Northumbria University Funding Management Leader (PI: Prof. Nauman Aslam)

Received from Erasmus Mundus, 2015-2018

Project Page