Arbitrary View Action Recognition via Transfer Dictionary Learning on Synthetic Training Data

Jingtian Zhang, Lining Zhang, Hubert P. H. Shum and Ling Shao

Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), 2016

H5-Index: 129# Core A* Conference‡ Citation: 16#

Abstract

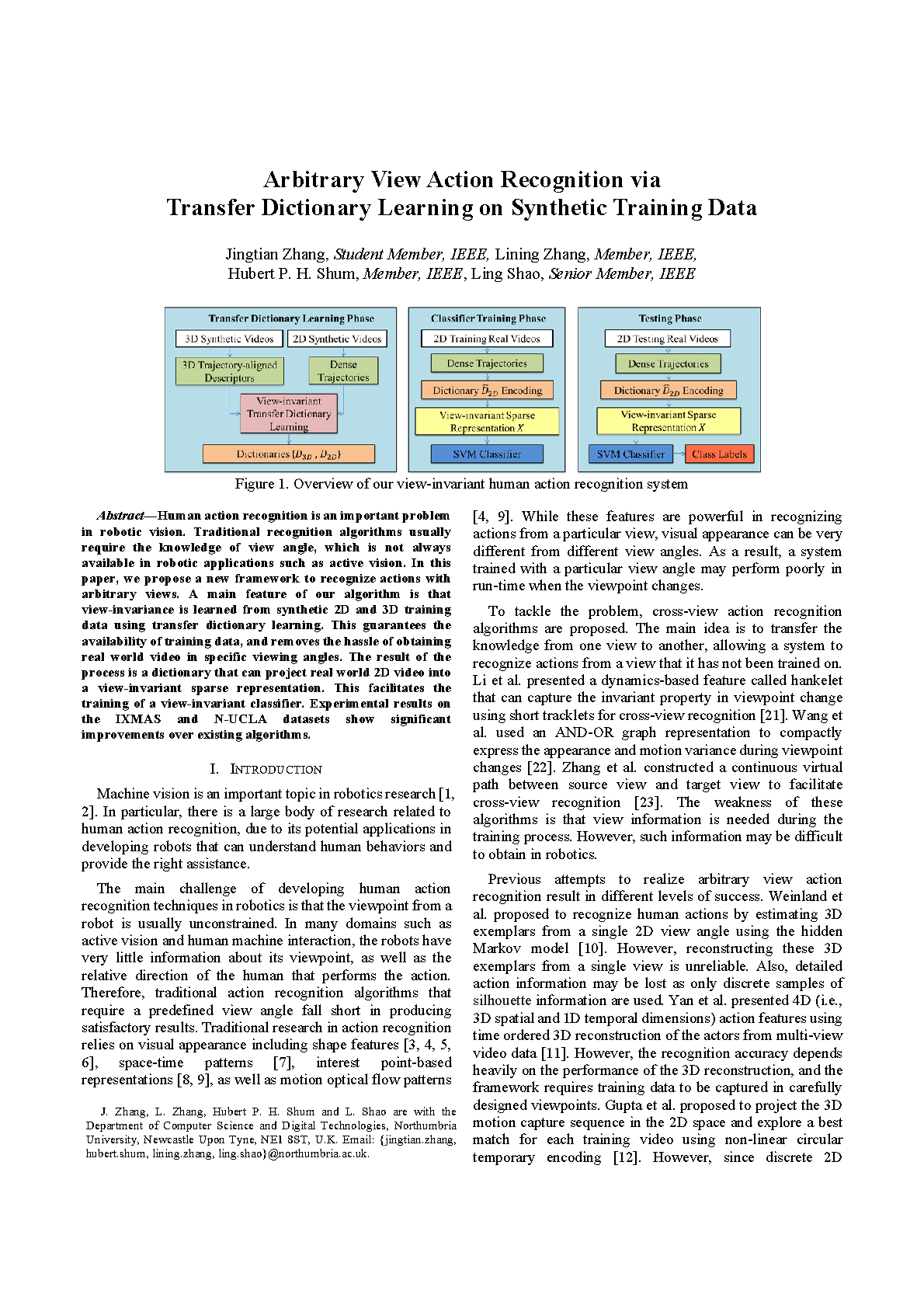

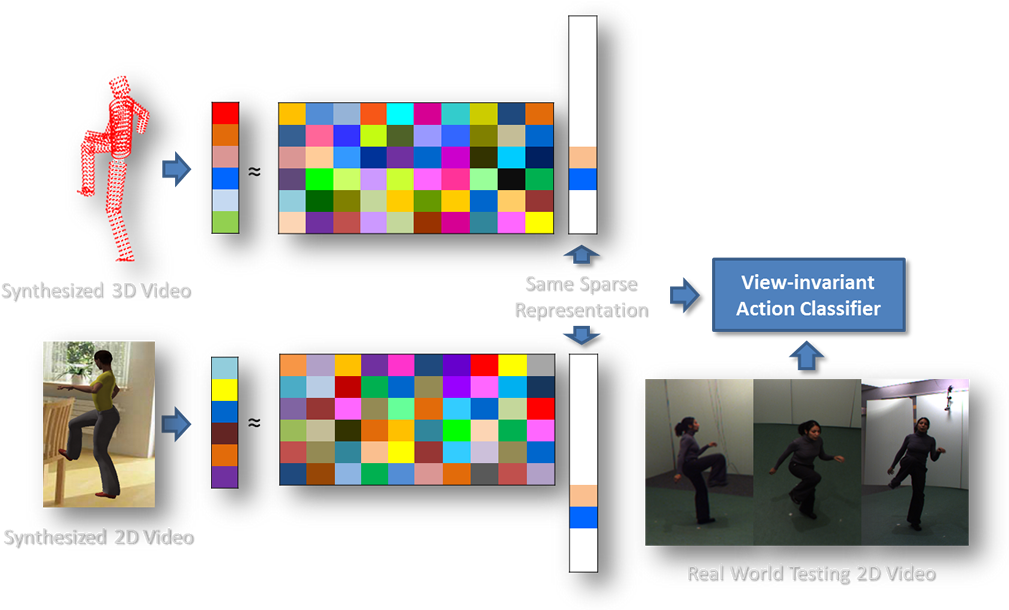

Human action recognition is an important problem in robotic vision. Traditional recognition algorithms usually require the knowledge of view angle, which is not always available in robotic applications such as active vision. In this paper, we propose a new framework to recognize actions with arbitrary views. A main feature of our algorithm is that view-invariance is learned from synthetic 2D and 3D training data using transfer dictionary learning. This guarantees the availability of training data, and removes the hassle of obtaining real world video in specific viewing angles. The result of the process is a dictionary that can project real world 2D video into a view-invariant sparse representation. This facilitates the training of a view-invariant classifier. Experimental results on the IXMAS and N-UCLA datasets show significant improvements over existing algorithms.

Cite This Research

Supporting Grants

Received from Faculty of Engineering and Environment, Northumbria University, UK, 2015-2018

Project Page