Unmanned Aerial Vehicle Visual Detection and Tracking using Deep Neural Networks: A Performance Benchmark

Brian K. S. Isaac-Medina, Matthew Poyser, Daniel Organisciak, Chris G. Willcocks, Toby P. Breckon and Hubert P. H. Shum

Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), 2021

Abstract

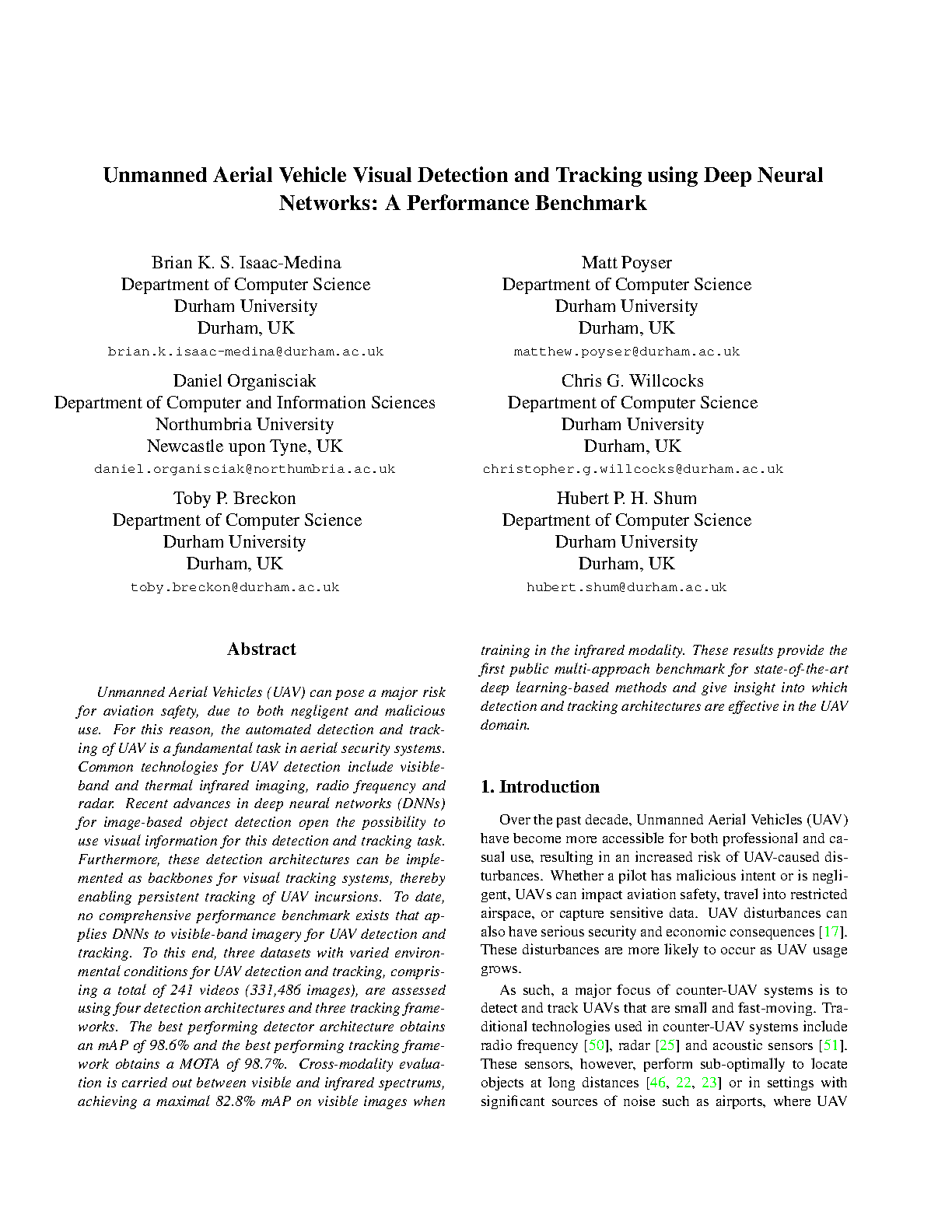

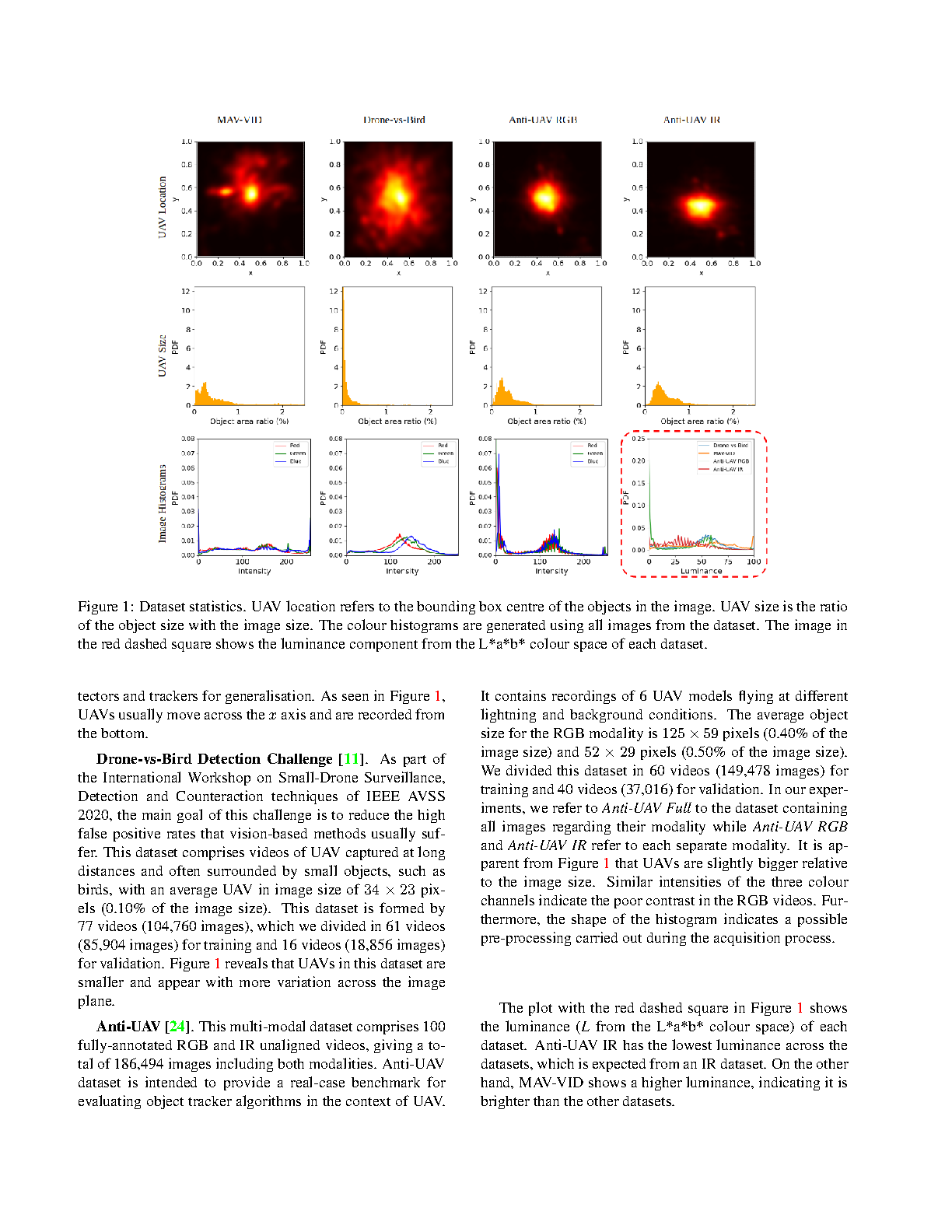

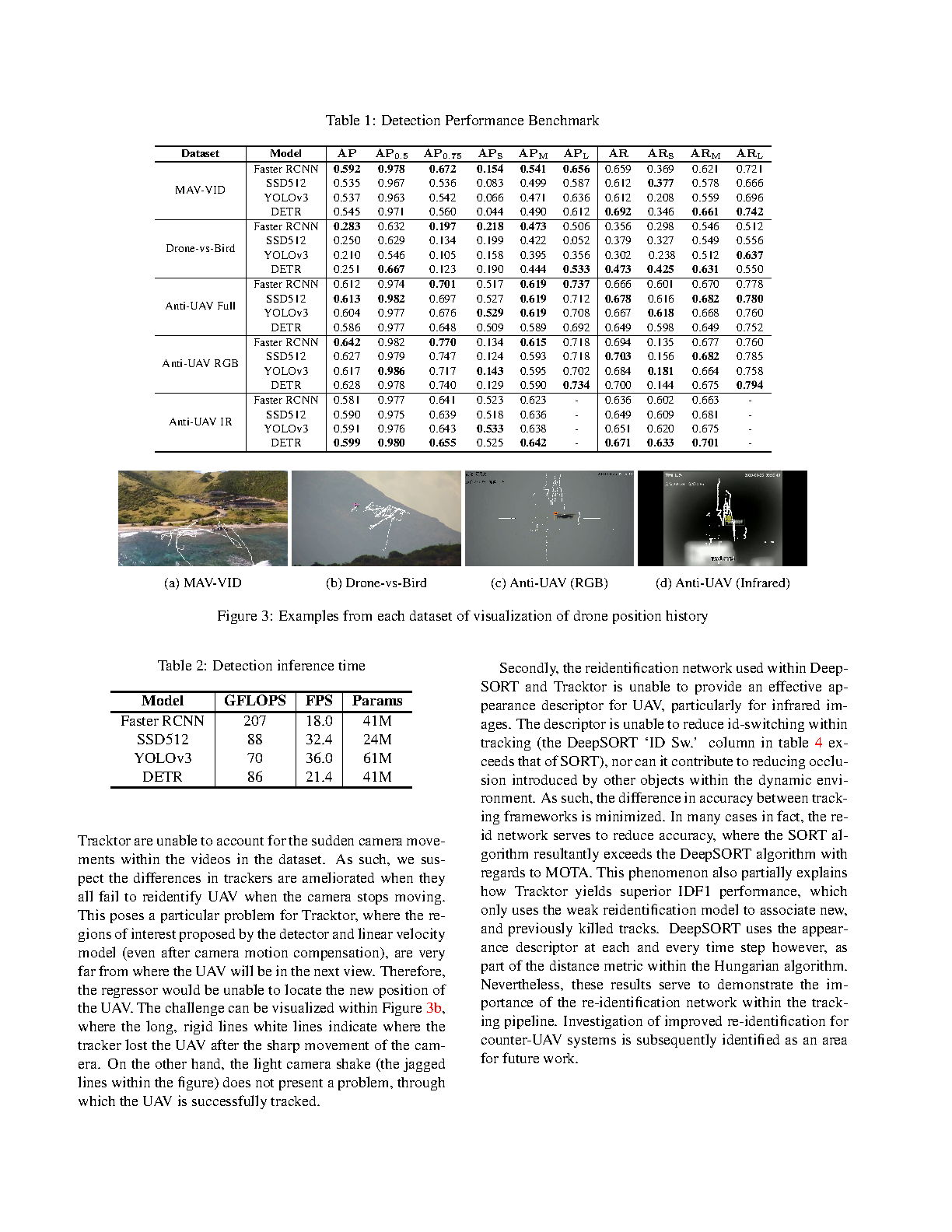

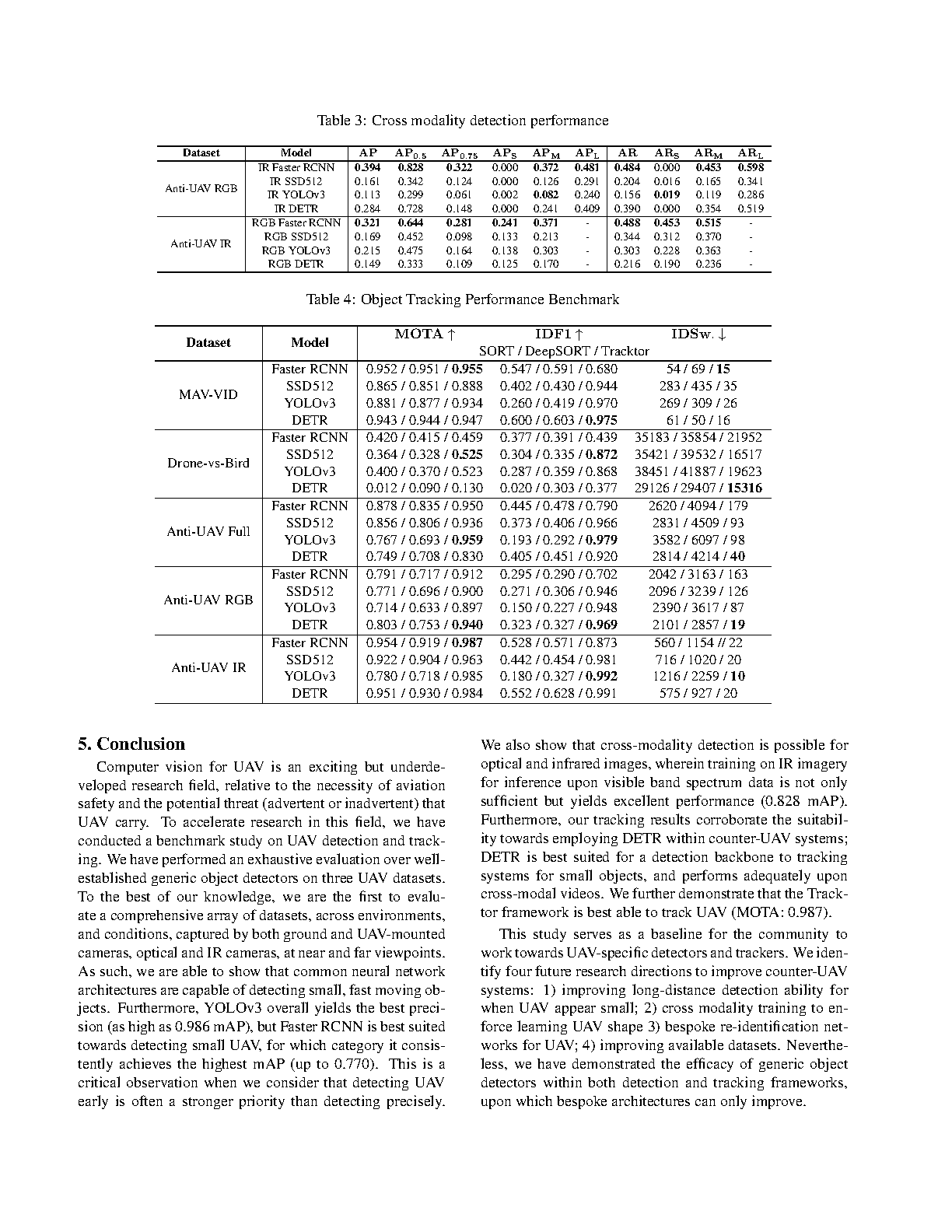

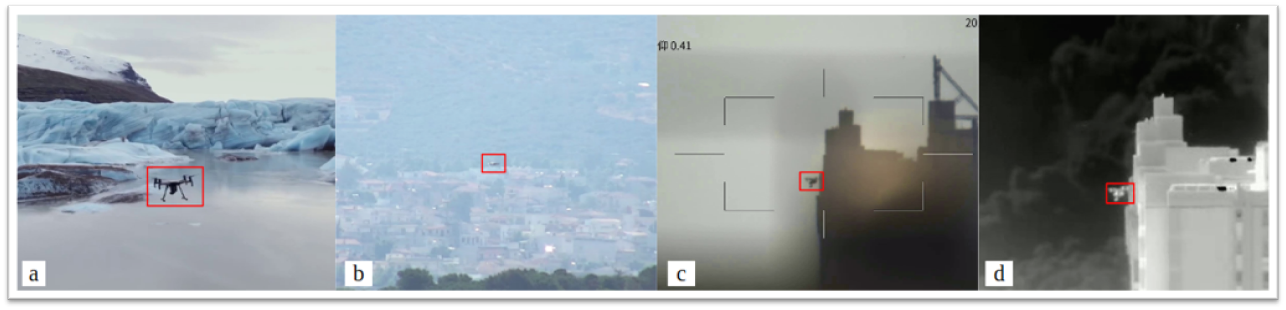

Unmanned Aerial Vehicles (UAV) can pose a major risk for aviation safety, due to both negligent and malicious use. For this reason, the automated detection and tracking of UAV is a fundamental task in aerial security systems. Common technologies for UAV detection include visible-band and thermal infrared imaging, radio frequency and radar. Recent advances in deep neural networks (DNNs) for image-based object detection open the possibility to use visual information for this detection and tracking task. Furthermore, these detection architectures can be implemented as backbones for visual tracking systems, thereby enabling persistent tracking of UAV incursions. To date, no comprehensive performance benchmark exists that applies DNNs to visible-band imagery for UAV detection and tracking. To this end, three datasets with varied environmental conditions for UAV detection and tracking, comprising a total of 241 videos (331,486 images), are assessed using four detection architectures and three tracking frameworks. The best performing detector architecture obtains an mAP of 98.6% and the best performing tracking framework obtains a MOTA of 98.7%. Cross-modality evaluation is carried out between visible and infrared spectrums, achieving a maximal 82.8% mAP on visible images when training in the infrared modality. These results provide the first public multi-approach benchmark for state-of-the-art deep learning-based methods and give insight into which detection and tracking architectures are effective in the UAV domain.

Cite This Research

Supporting Grants

Security Technology Research Innovation Grants Programme (S-TRIG) (Ref: 007CD): £32,727, Principal Investigator

Received from The Catapult Network (S-TRIG), UK, 2020-2021

Project Page

Received from Faculty of Engineering and Environment, Northumbria University, UK, 2018-2021

Project Page