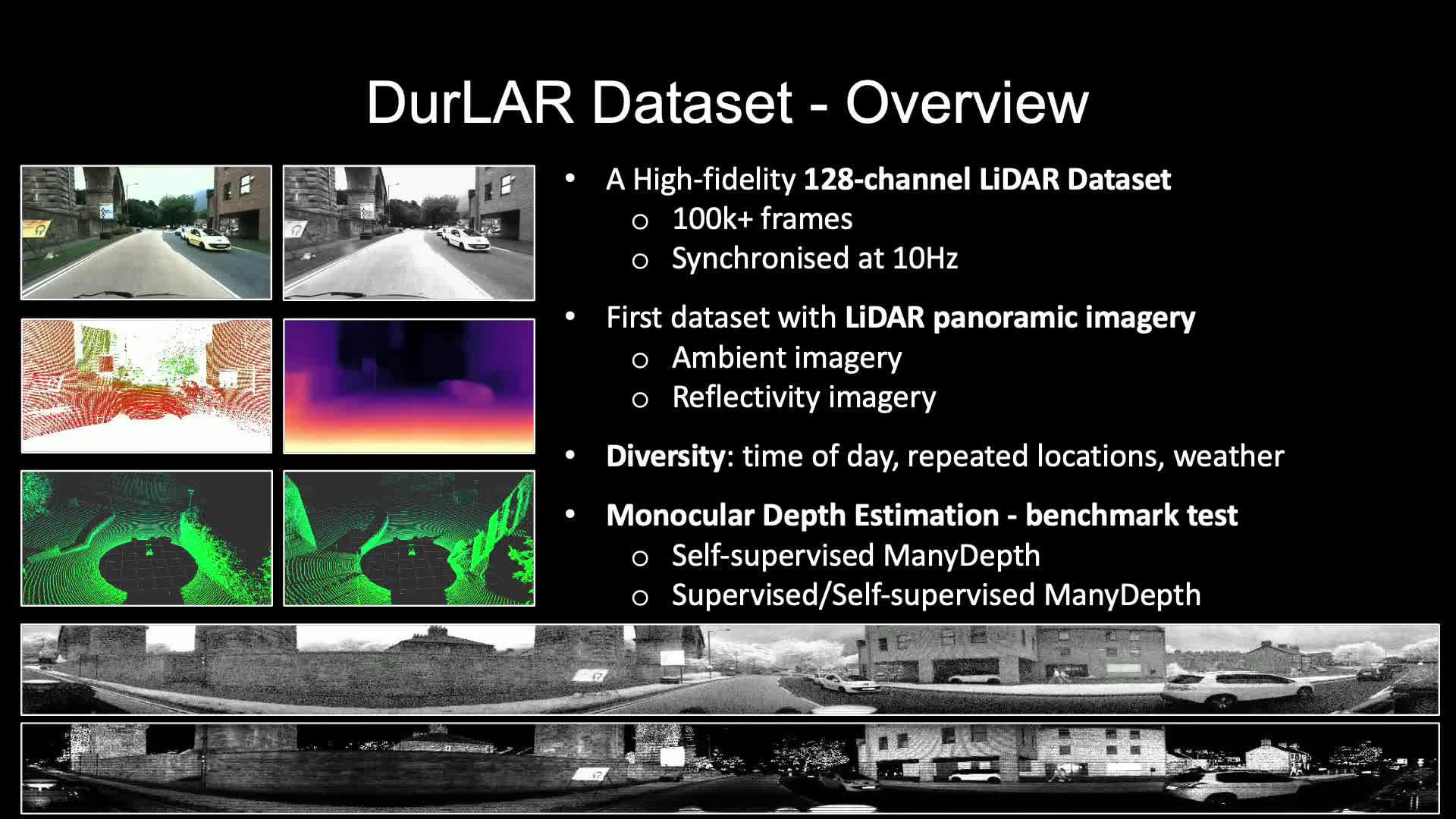

DurLAR: A High-fidelity 128-Channel LiDAR Dataset with Panoramic Ambientand Reflectivity Imagery for Multi-Modal Autonomous Driving Applications

Li Li, Khalid N. Ismail, Hubert P. H. Shum and Toby P. Breckon

Proceedings of the 2021 International Conference on 3D Vision (3DV), 2021

Abstract

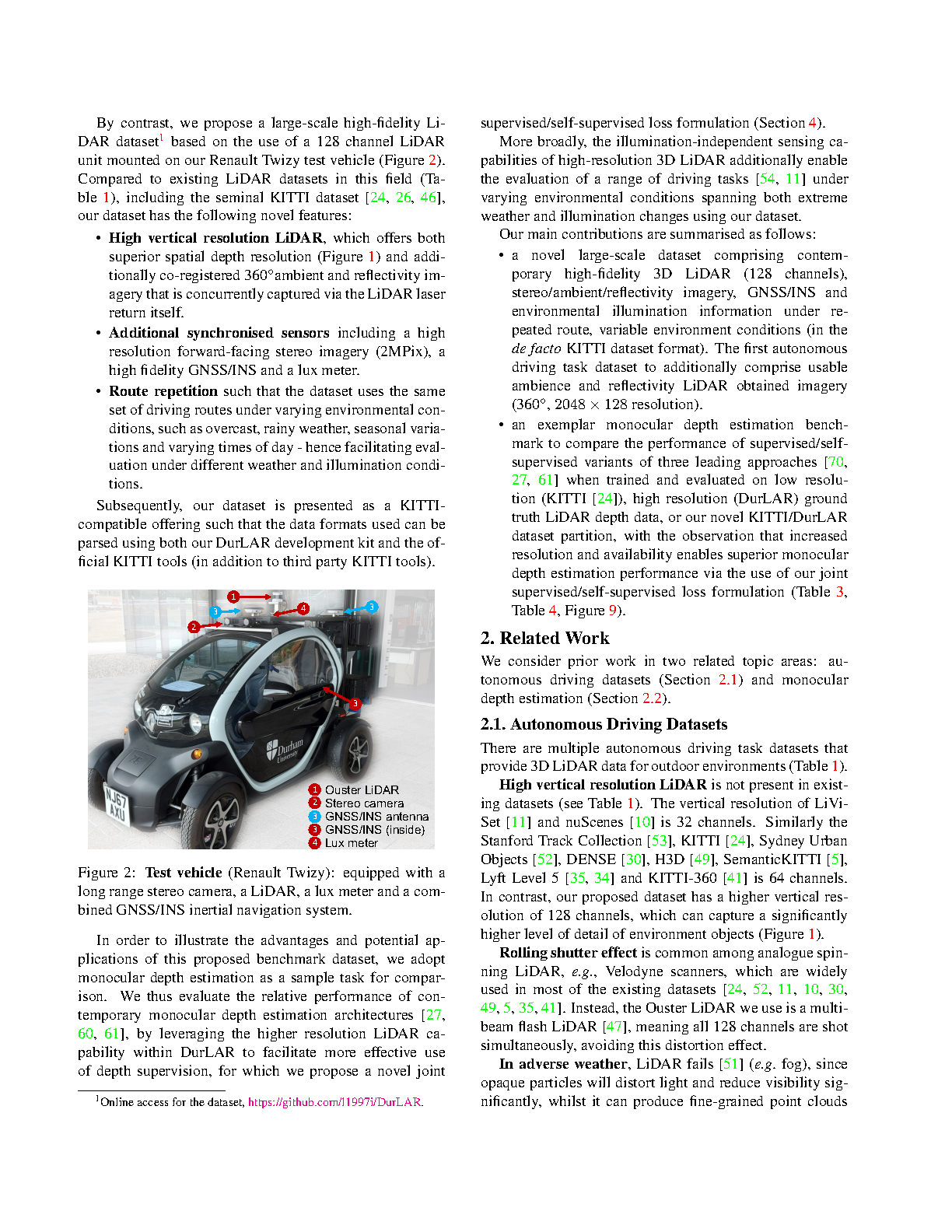

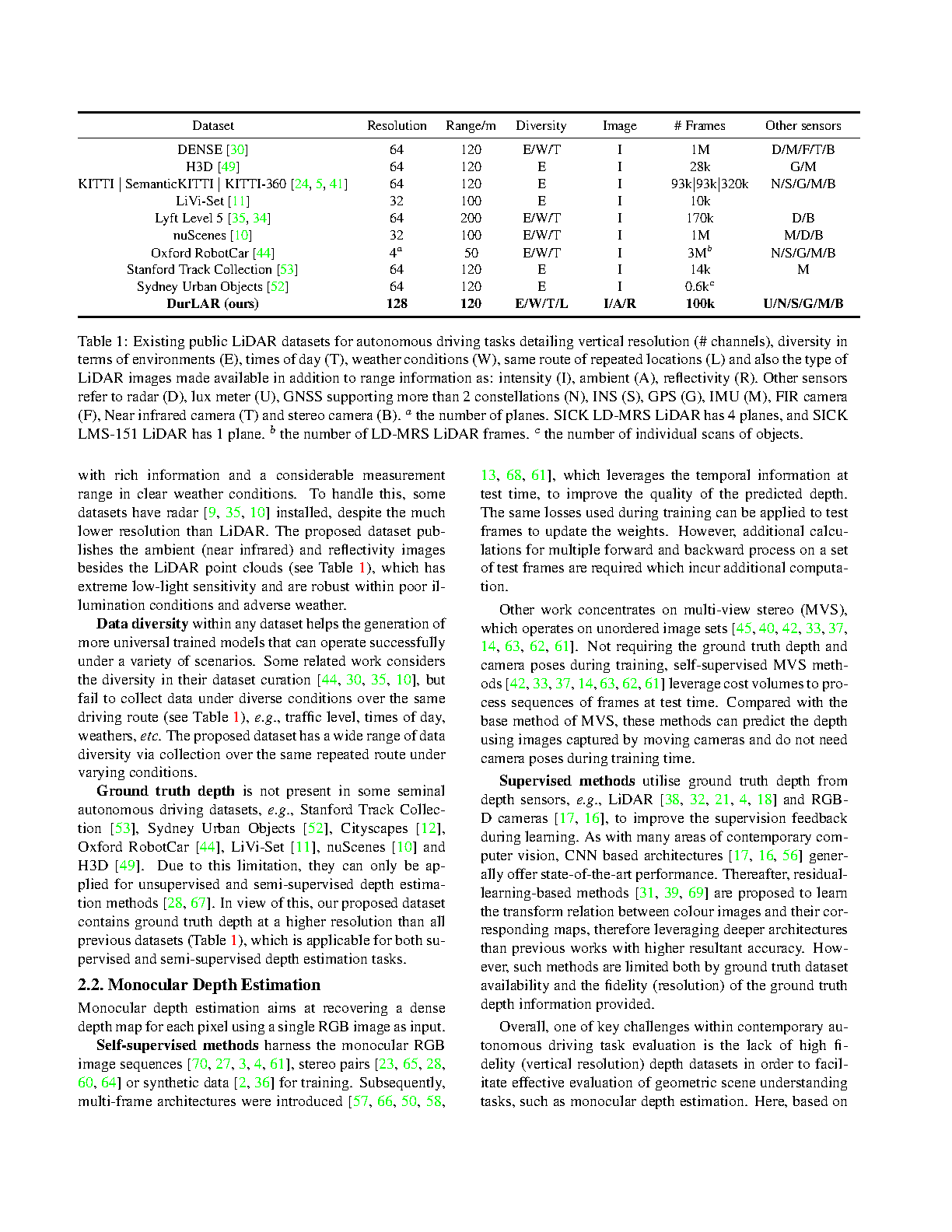

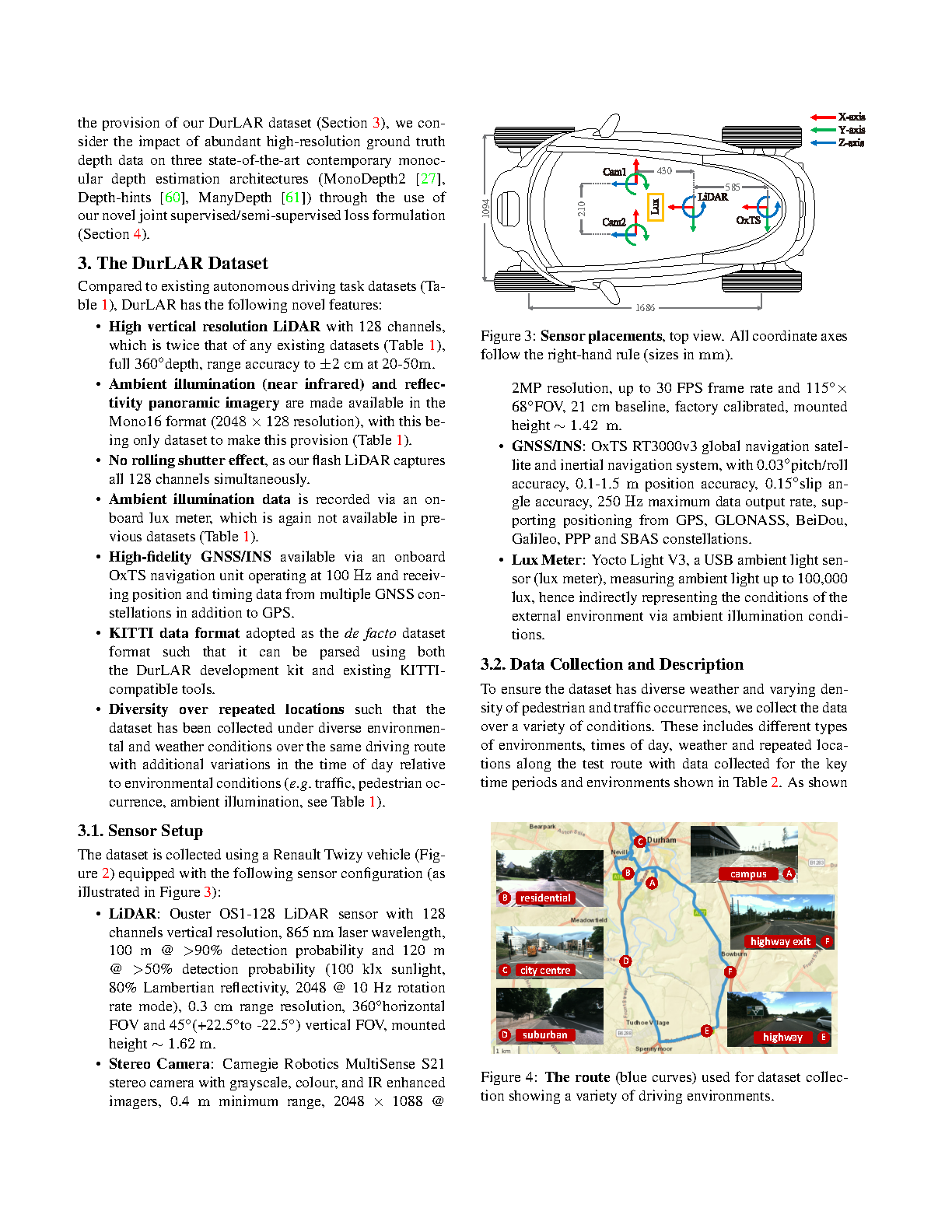

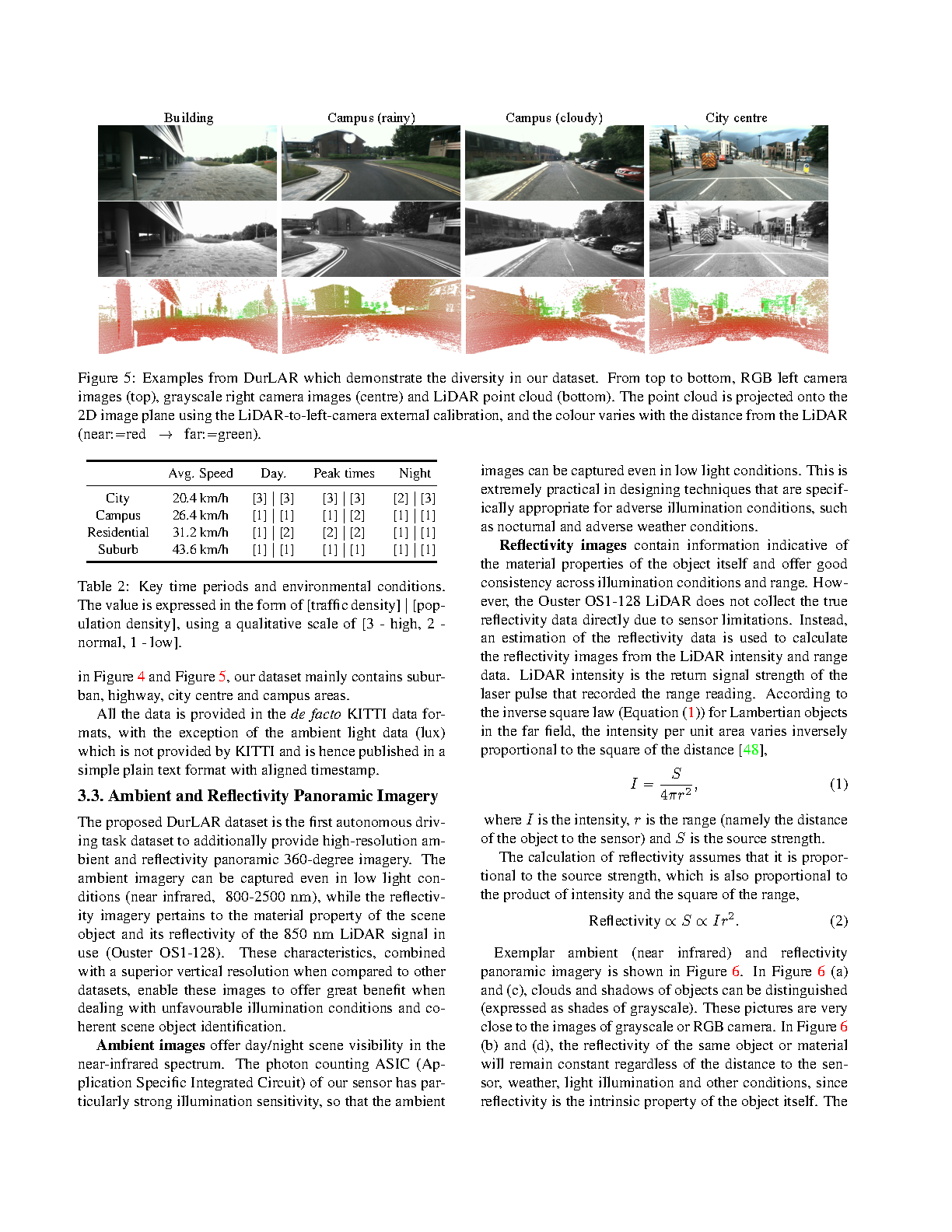

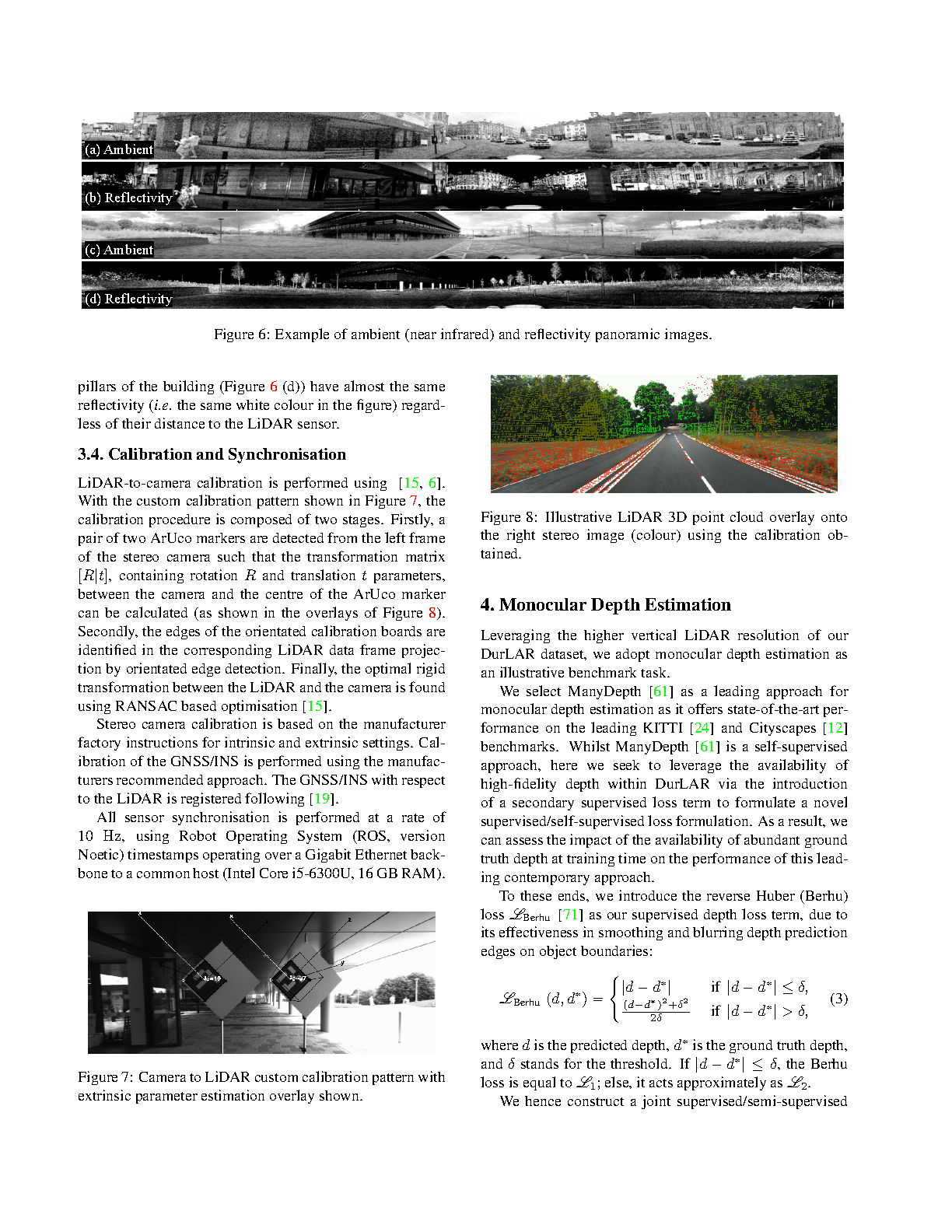

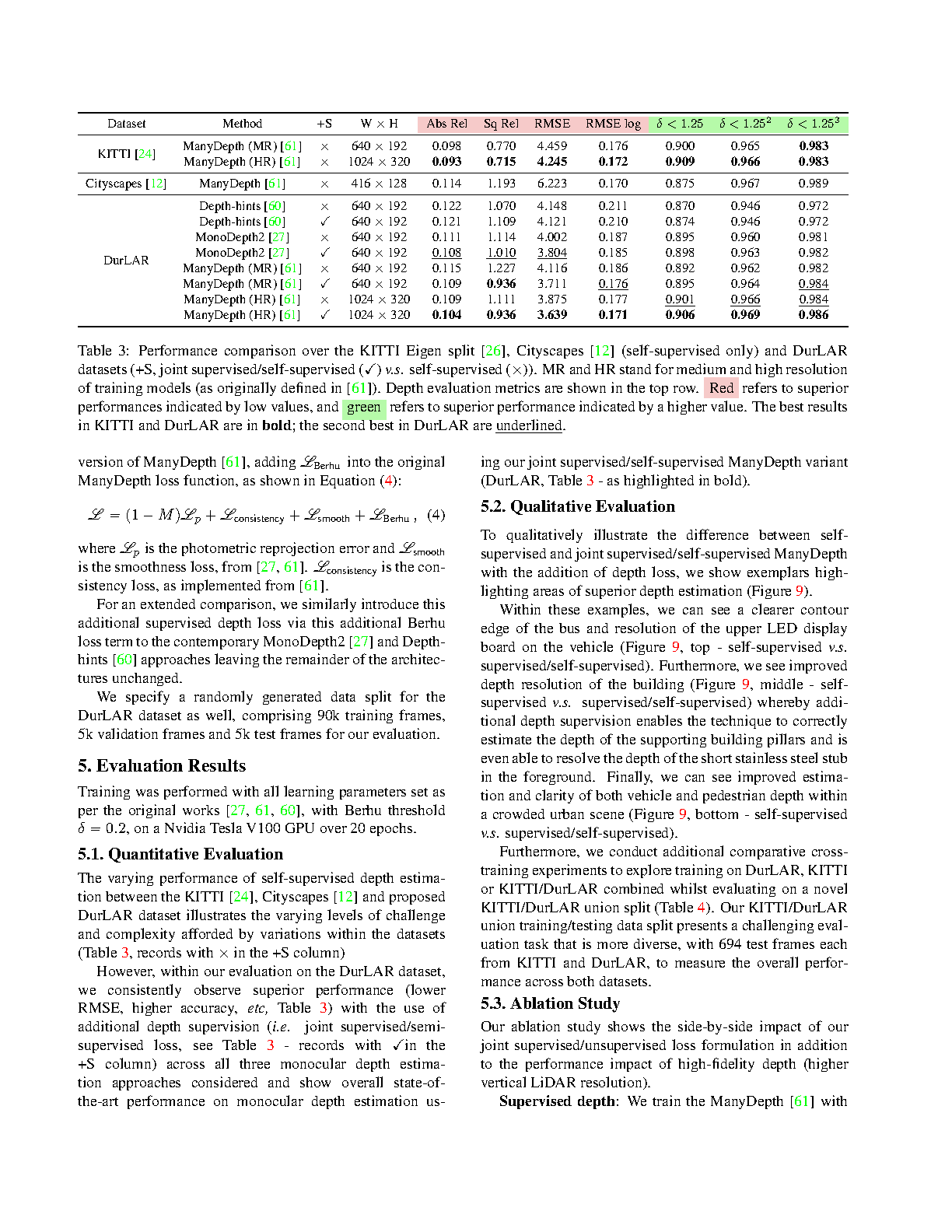

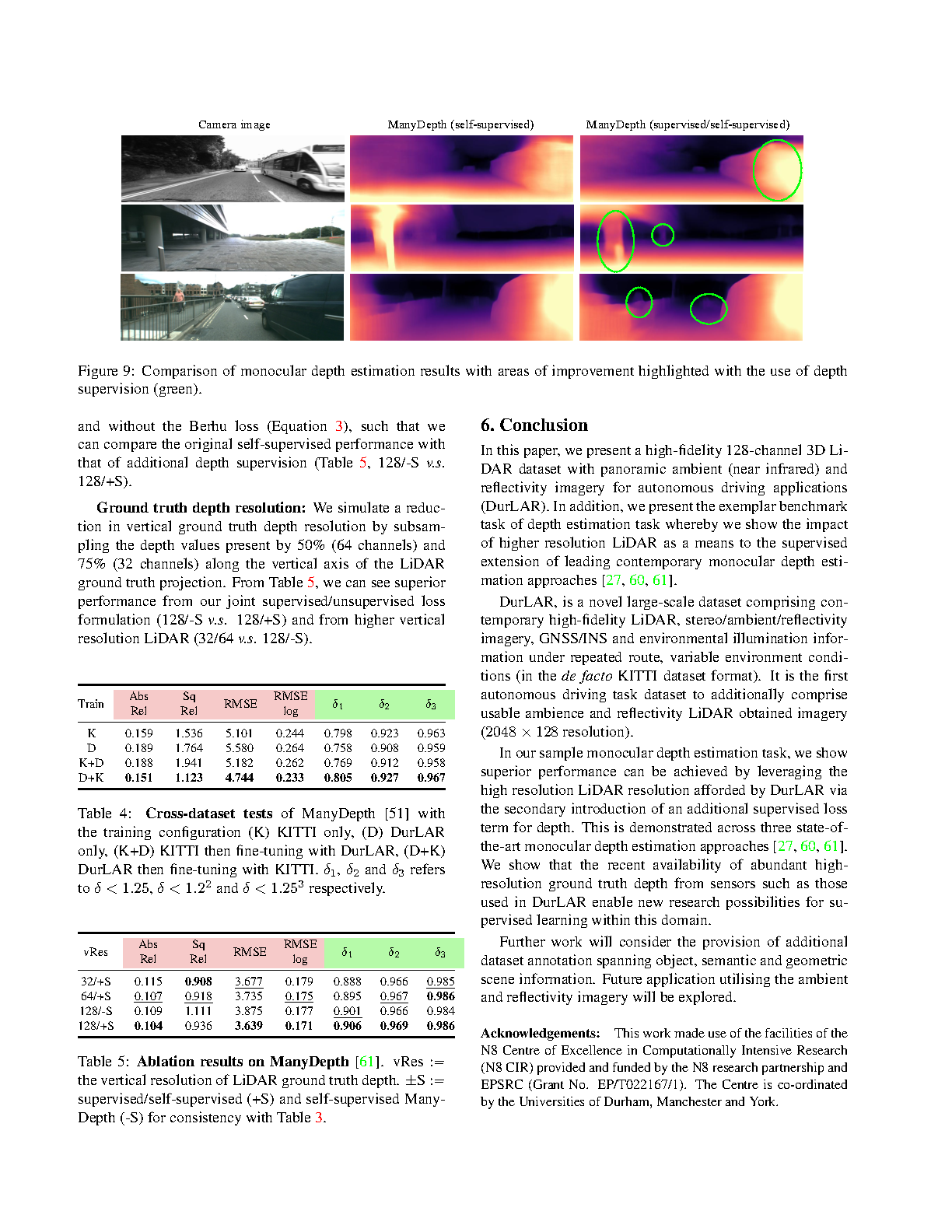

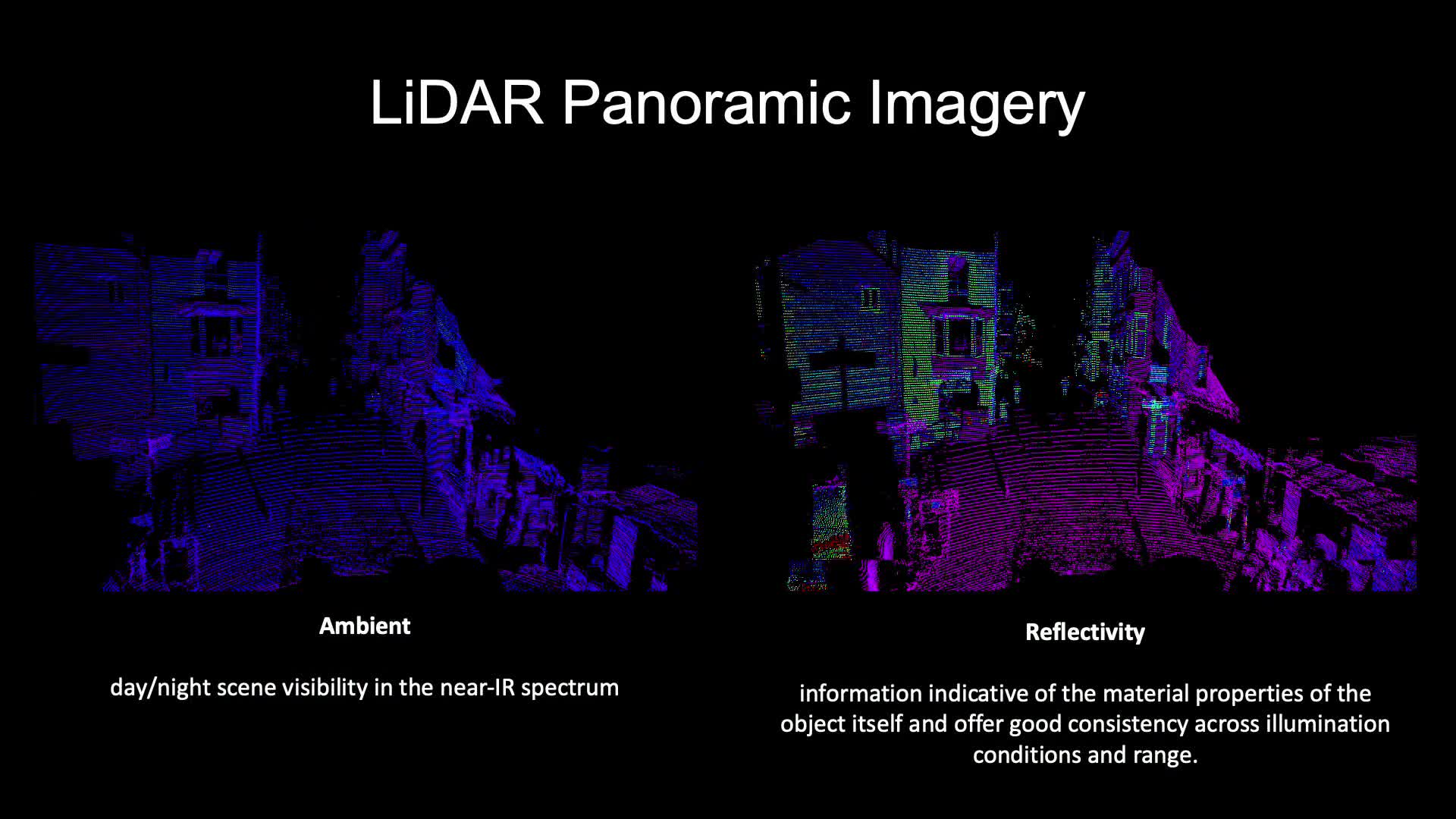

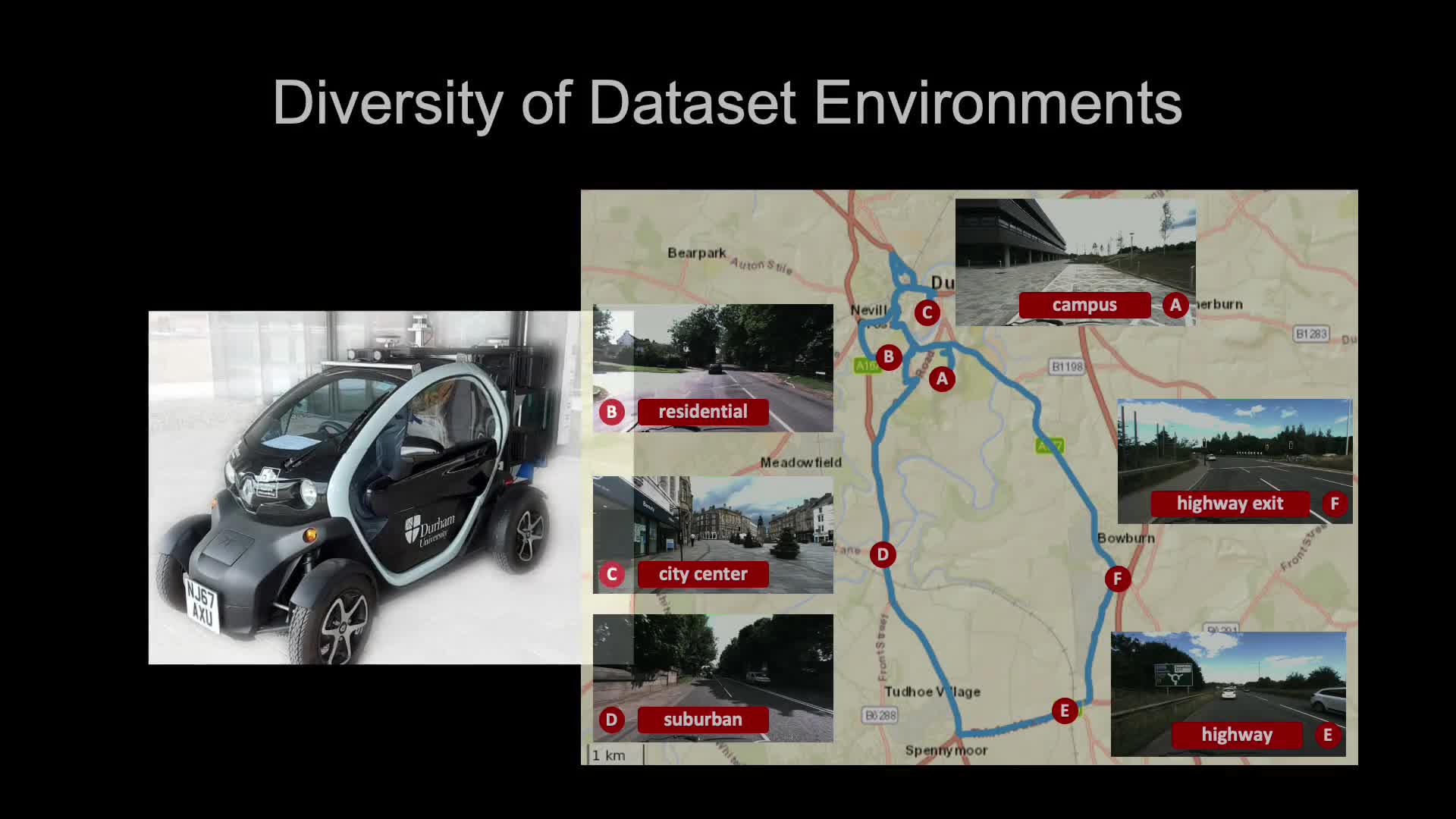

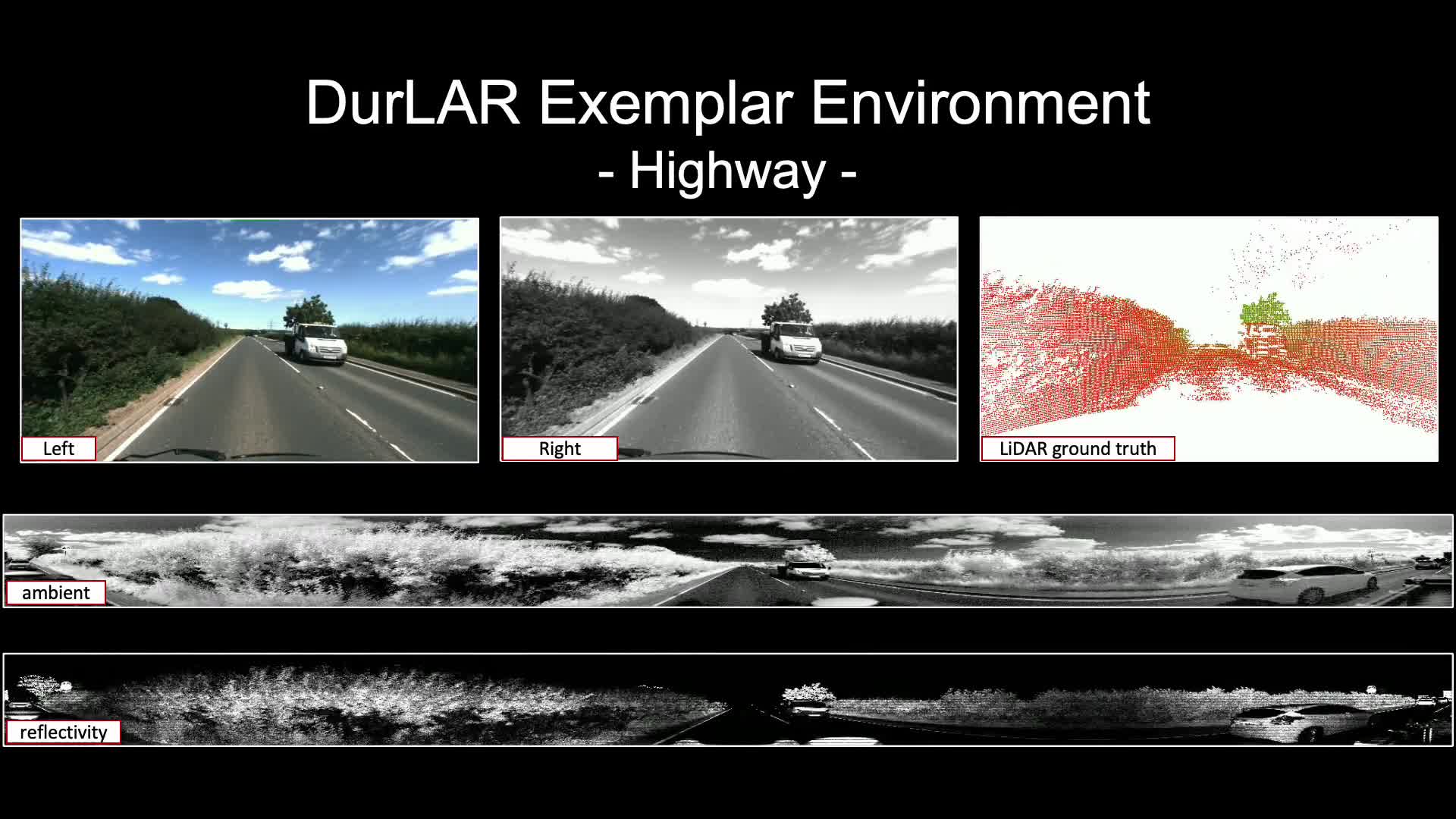

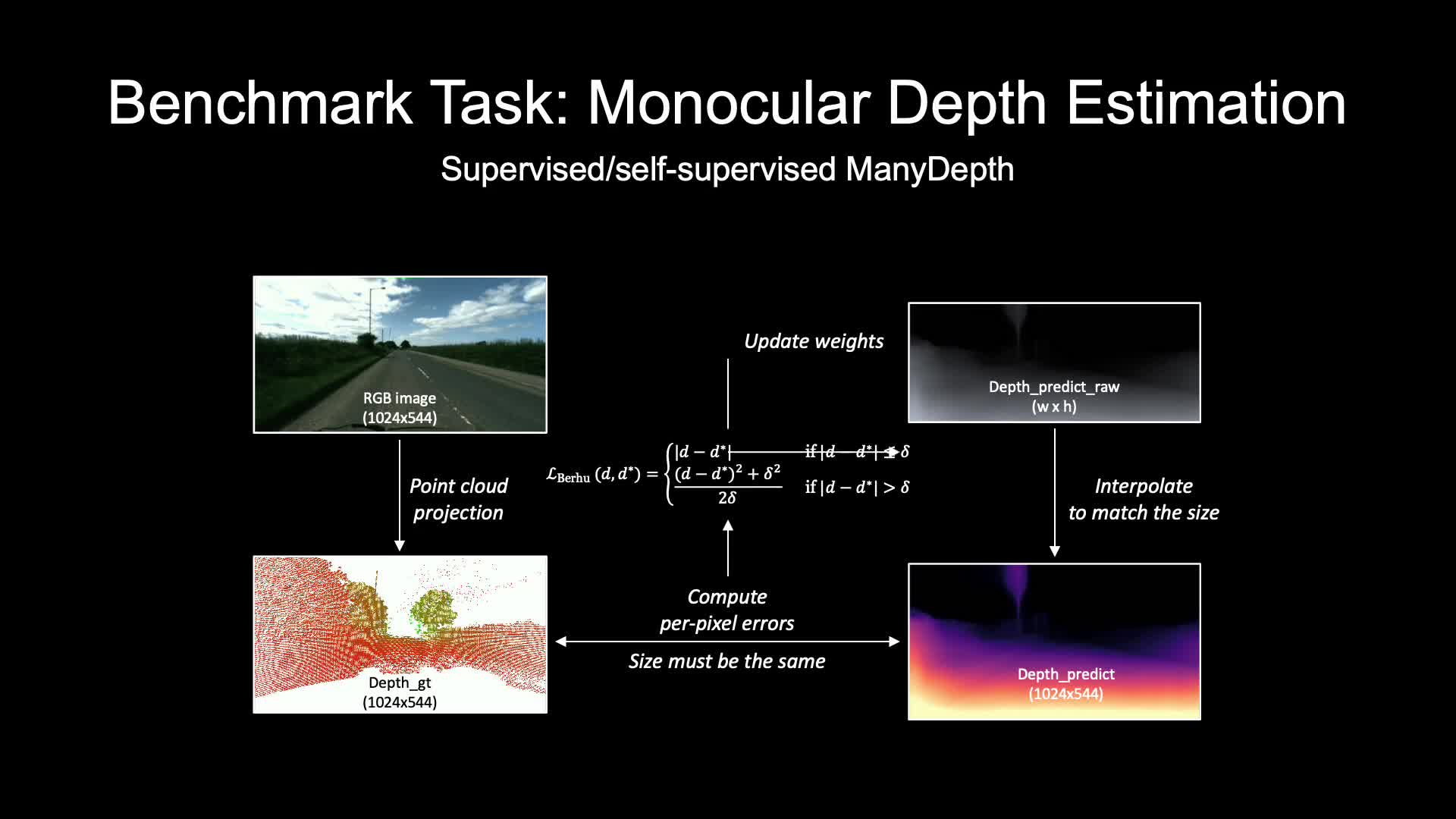

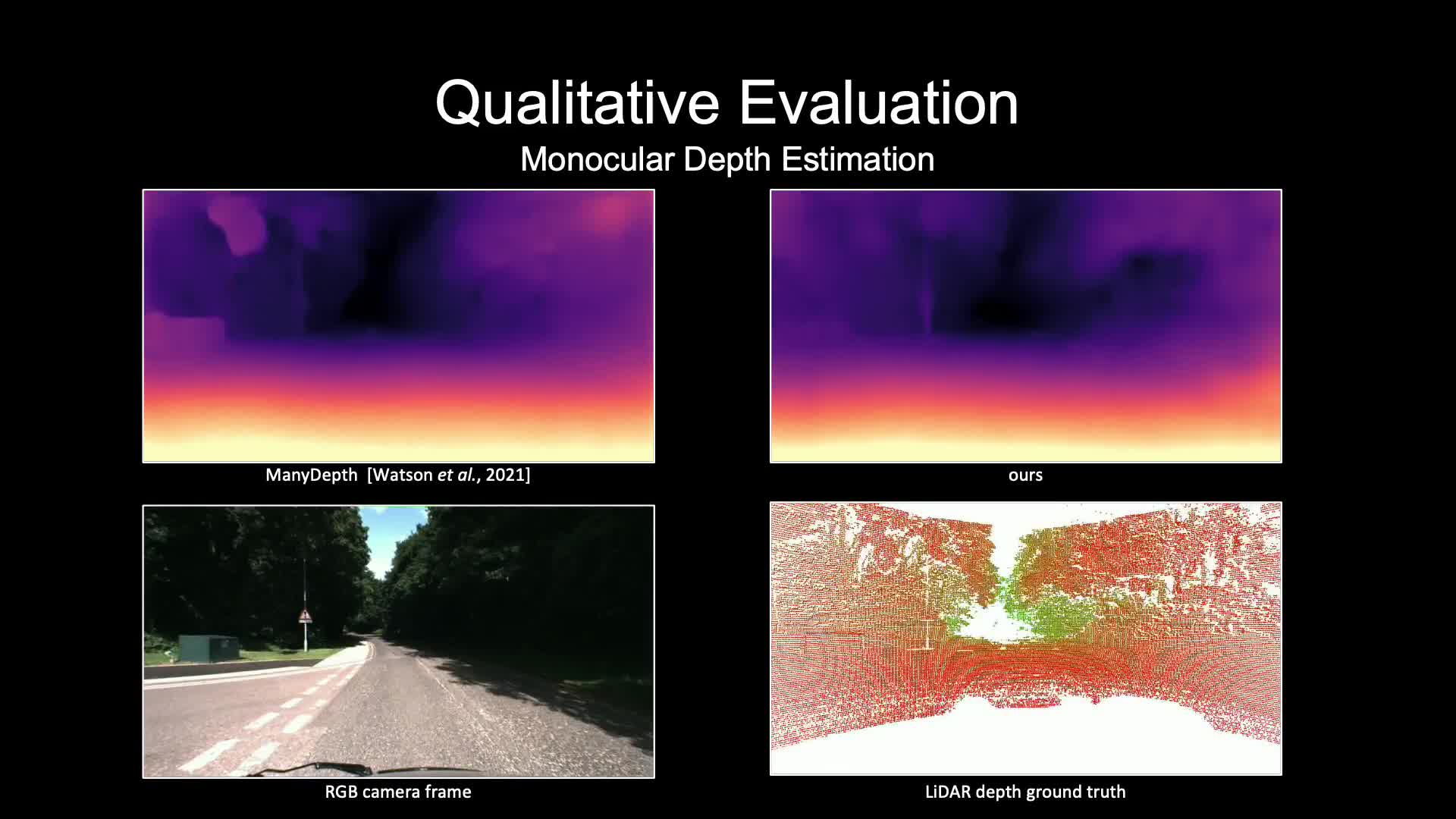

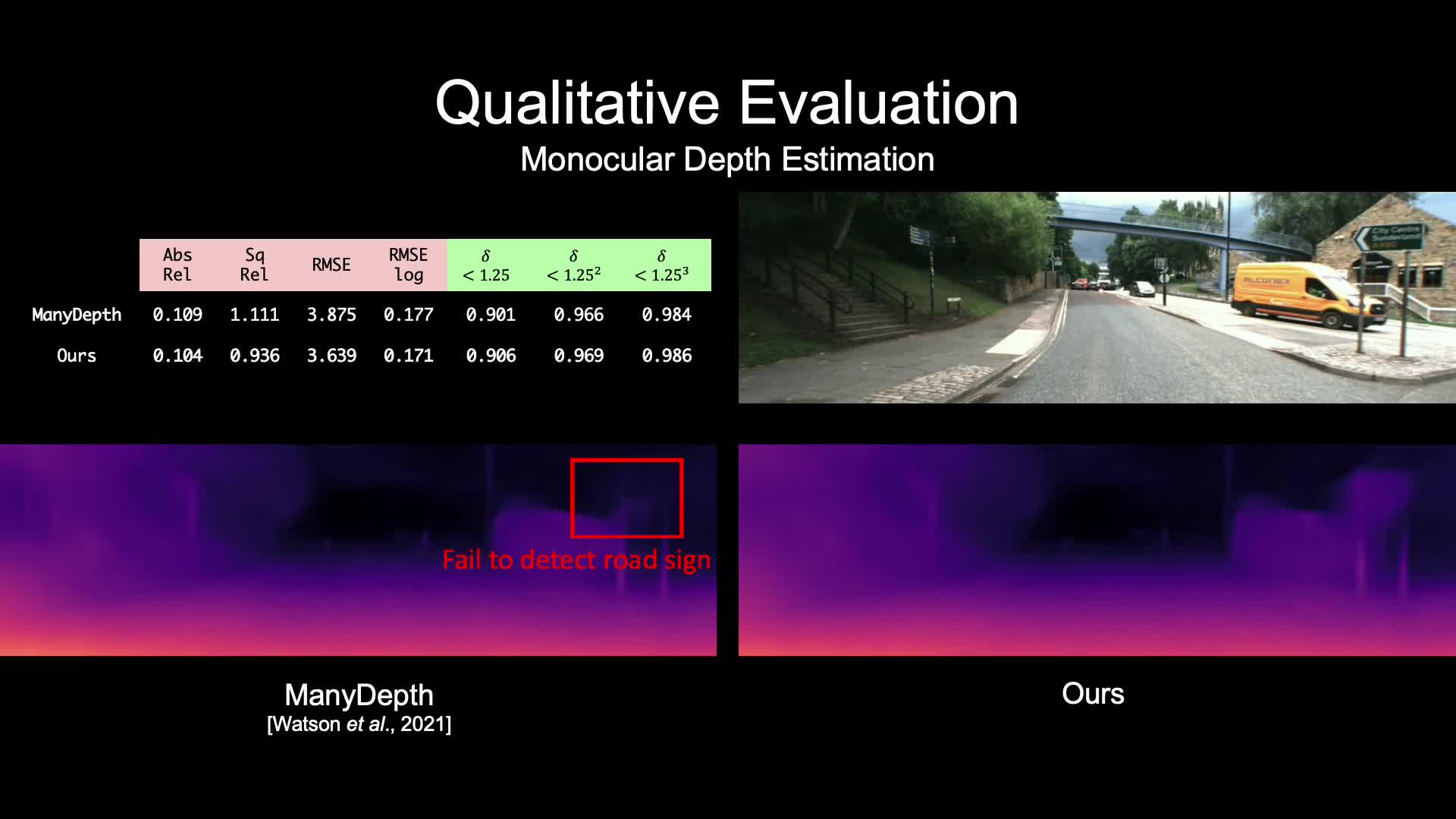

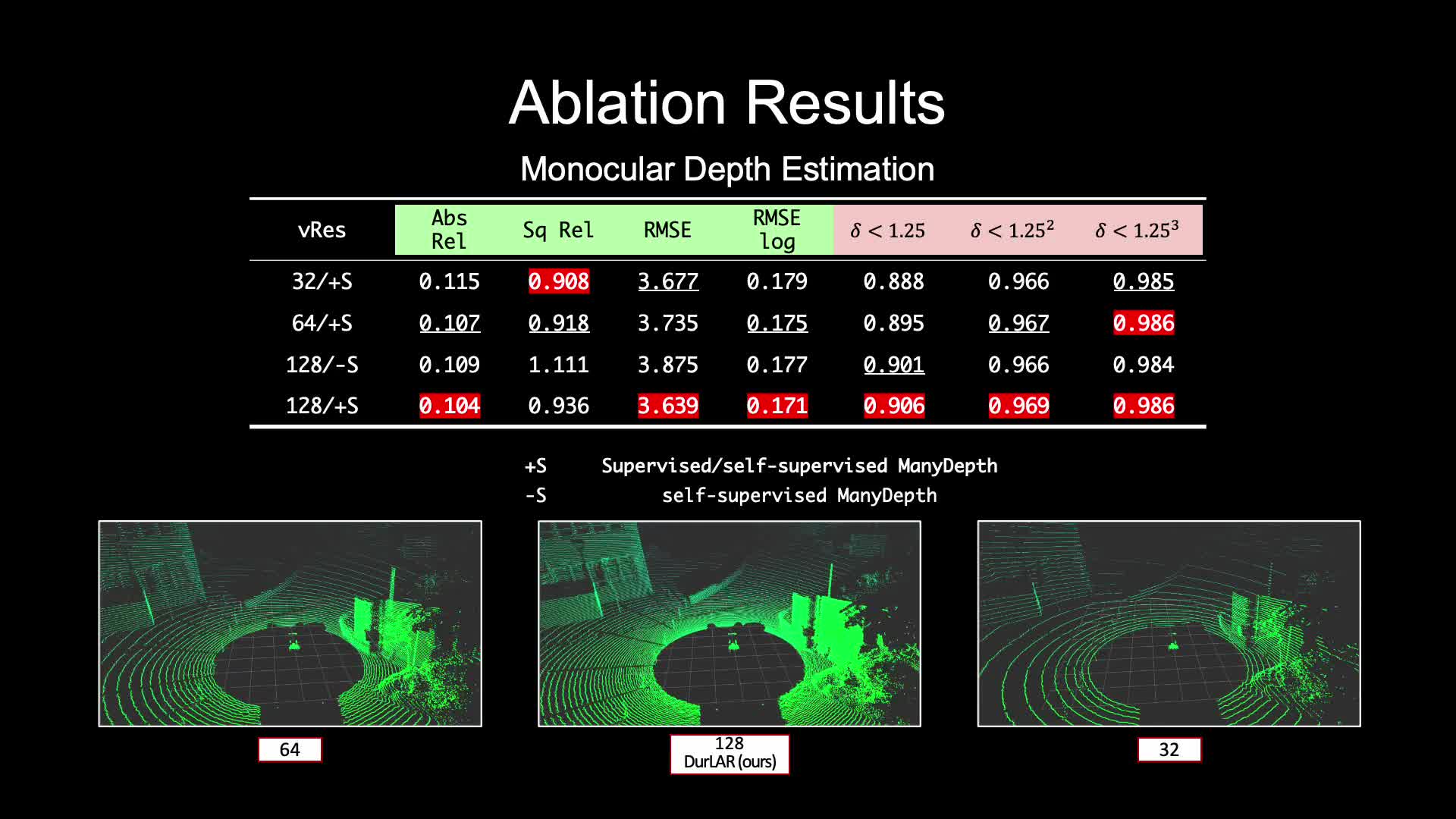

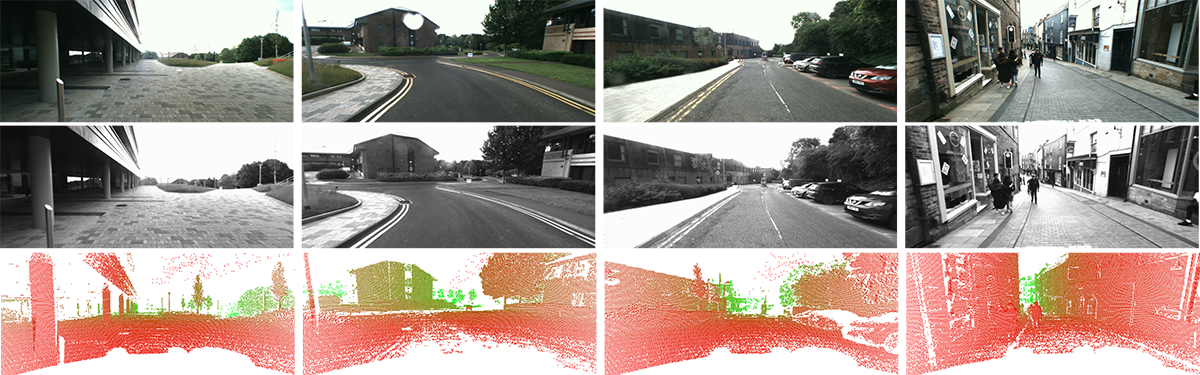

We present DurLAR, a high-fidelity 128-channel 3D Li- DAR dataset with panoramic ambient (near infrared) and reflectivity imagery, as well as a sample benchmark task using depth estimation for autonomous driving applications. Our driving platform is equipped with a high resolution 128 channel LiDAR, a 2MPix stereo camera, a lux meter and a GNSS/INS system. Ambient and reflectivity images are made available along with the LiDAR point clouds to facilitate multi-modal use of concurrent ambient and reflectivity scene information. Leveraging DurLAR, with a resolution exceeding that of prior benchmarks, we consider the task of monocular depth estimation and use this increased availability of higher resolution, yet sparse ground truth scene depth information to propose a novel joint supervised/selfsupervised loss formulation. We compare performance over both our new DurLAR dataset, the established KITTI benchmark and the Cityscapes dataset. Our evaluation shows our joint use supervised and self-supervised loss terms, enabled via the superior ground truth resolution and availability within DurLAR improves the quantitative and qualitative performance of leading contemporary monocular depth estimation approaches (RMSE = 3.639, SqRel = 0.936).