Denoising Diffusion Probabilistic Models for Styled Walking Synthesis

Edmund J. C. Findlay, Haozheng Zhang, Ziyi Chang and Hubert P. H. Shum

Proceedings of the 2022 ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG) Posters, 2022

Abstract

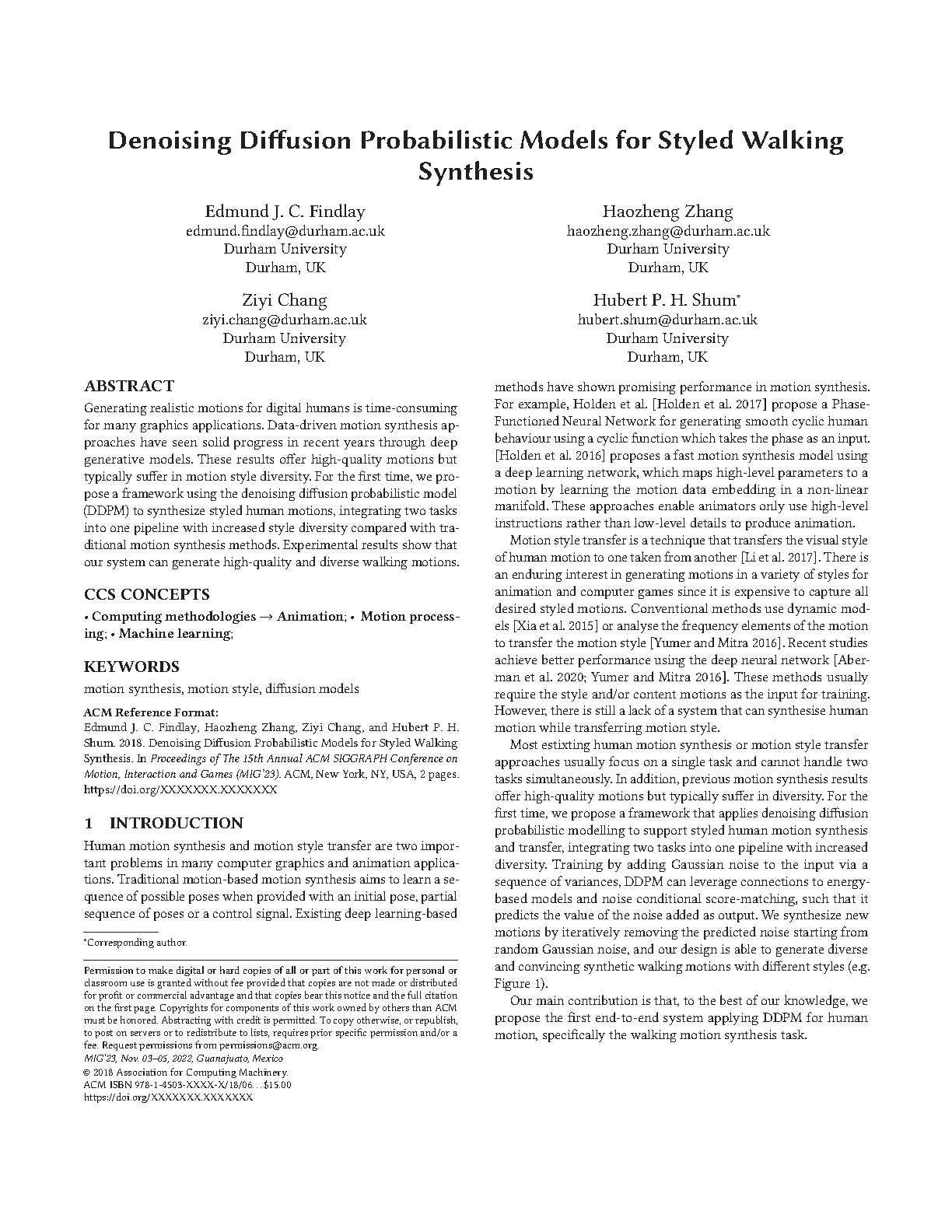

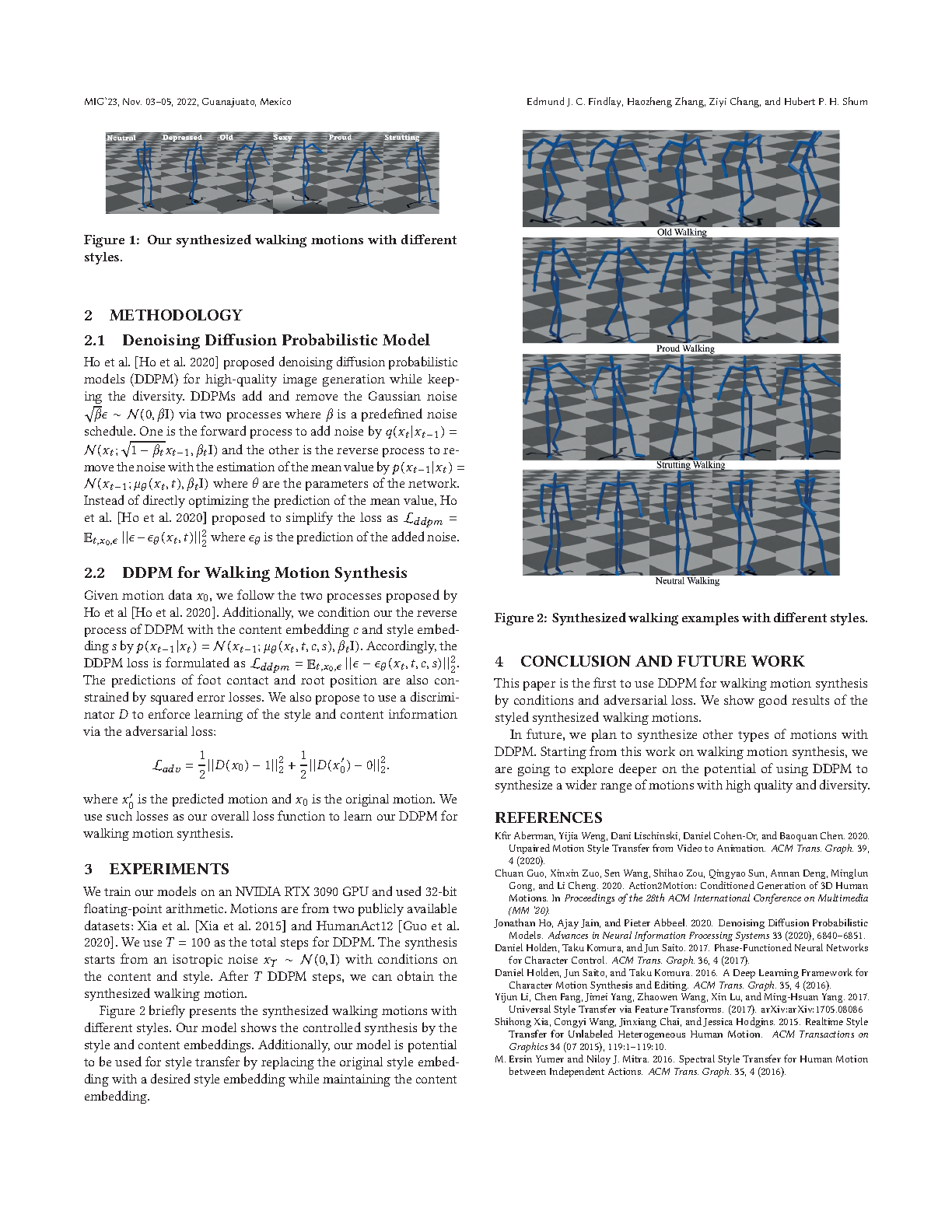

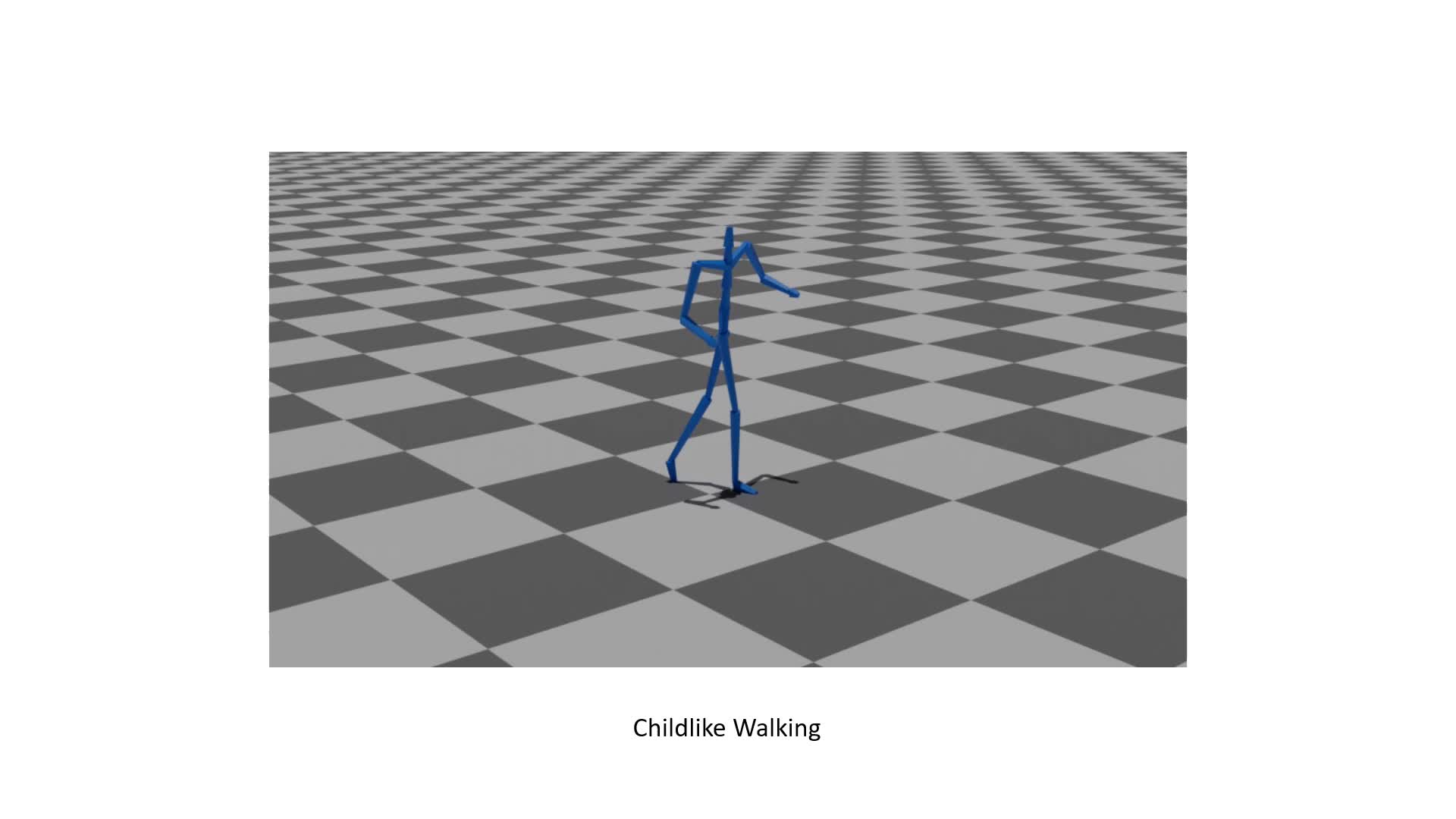

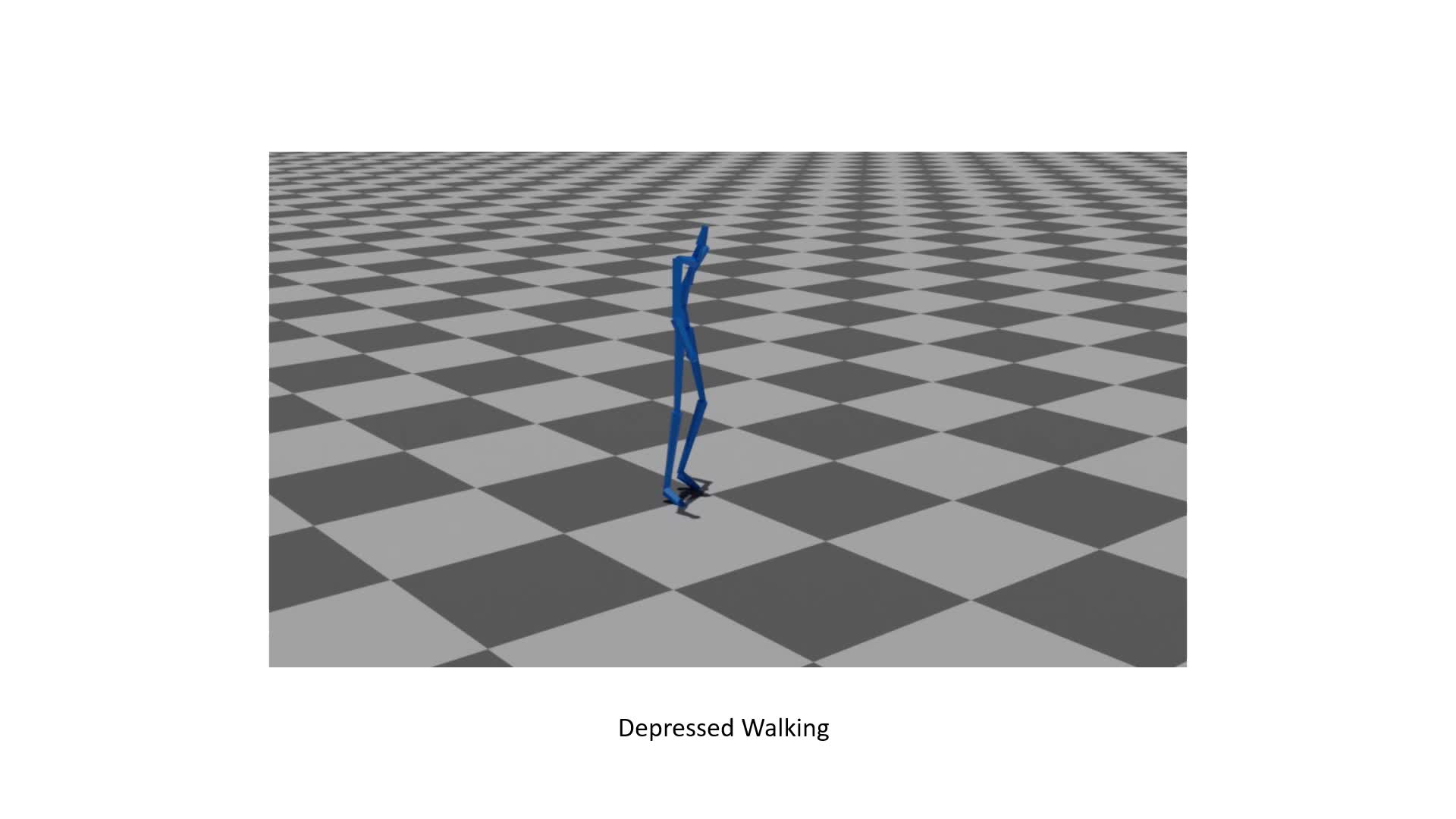

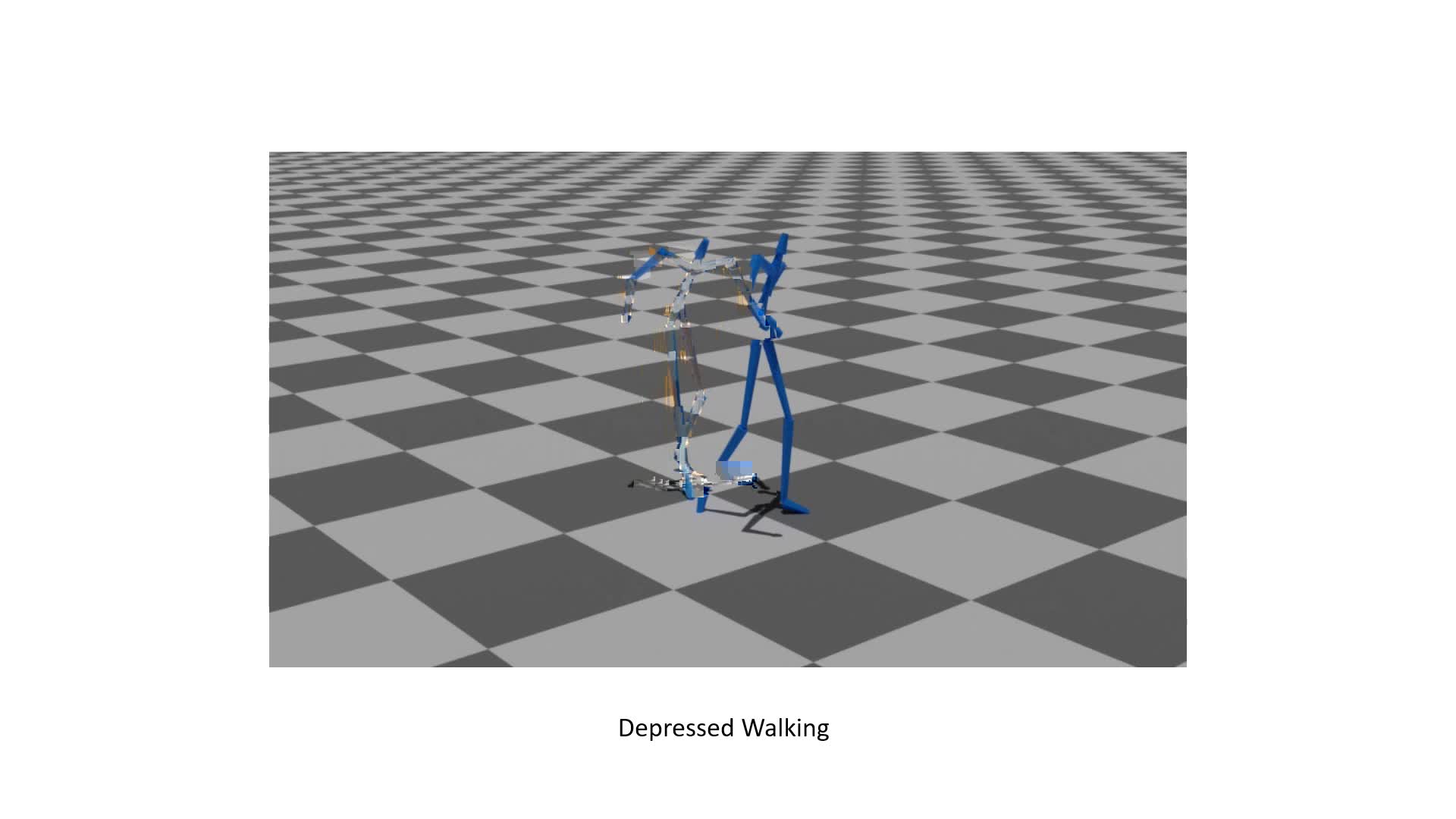

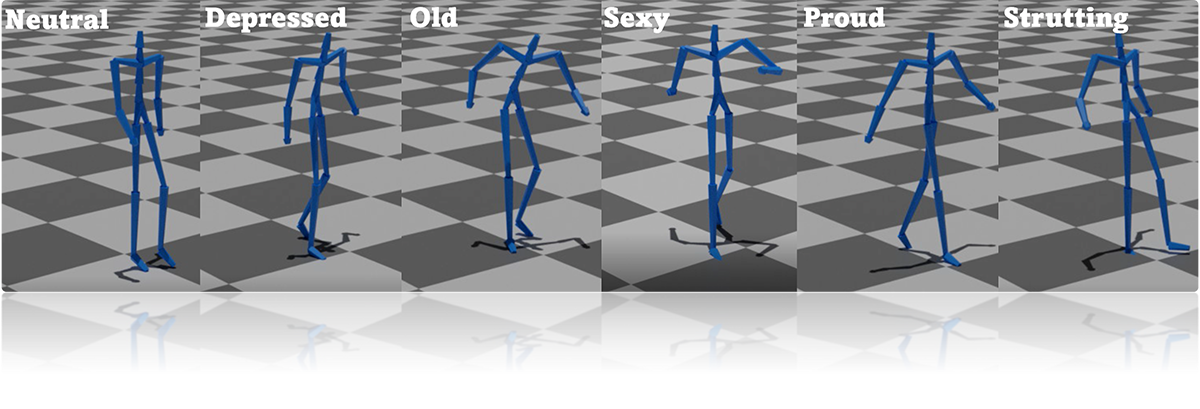

Generating realistic motions for digital humans is time-consuming for many graphics applications. Data-driven motion synthesis approaches have seen solid progress in recent years through deep generative models. These results offer high-quality motions but typically suffer in motion style diversity. For the first time, we propose a framework using the denoising diffusion probabilistic model (DDPM) to synthesize styled human motions, integrating two tasks into one pipeline with increased style diversity compared with traditional motion synthesis methods. Experimental results show that our system can generate high-quality and diverse walking motions.

YouTube

Cite This Research

Similar Research

Ziyi Chang, Edmund J. C. Findlay, Haozheng Zhang and Hubert P. H. Shum, "Unifying Human Motion Synthesis and Style Transfer with Denoising Diffusion Probabilistic Models", Proceedings of the 2023 International Conference on Computer Graphics Theory and Applications (GRAPP), 2023

He Wang, Edmond S. L. Ho, Hubert P. H. Shum and Zhanxing Zhu, "Spatio-Temporal Manifold Learning for Human Motions via Long-Horizon Modeling", IEEE Transactions on Visualization and Computer Graphics (TVCG), 2021

Liuyang Zhou, Lifeng Shang, Hubert P. H. Shum and Howard Leung, "Human Motion Variation Synthesis with Multivariate Gaussian Processes", Computer Animation and Virtual Worlds (CAVW) - Proceedings of the 2014 International Conference on Computer Animation and Social Agents (CASA), 2014

Edmond S. L. Ho, Hubert P. H. Shum, He Wang and Li Yi, "Synthesizing Motion with Relative Emotion Strength", Proceedings of the 2017 ACM SIGGRAPH Asia Workshop on Data-Driven Animation Techniques (D2AT), 2017

Qianhui Men, Hubert P. H. Shum, Edmond S. L. Ho and Howard Leung, "GAN-Based Reactive Motion Synthesis with Class-Aware Discriminators for Human-Human Interaction", Computers and Graphics (C&G), 2022

Hubert P. H. Shum, Taku Komura and Pranjul Yadav, "Angular Momentum Guided Motion Concatenation", Computer Animation and Virtual Worlds (CAVW) - Proceedings of the 2009 International Conference on Computer Animation and Social Agents (CASA), 2009